The applications of this day and age are running on cloud, but do you wonder how these applications used to be deployed before the advent of cloud technologies? Containers are the new norm for various companies irrespective of their domain and the work they do, but there has been an evolution from deploying applications in just the hardware itself towards containers and container technologies. Evolution of virtualization brought us from running applications directly in simple hardware systems to the world of virtual machines and containers.

In this article we will discuss the evolution of virtualization, what were the issues with bare metal computing? Why virtual machines and containers were introduced? and what problems they scored?

What is Virtualization?

Virtualization is the process of creating a software-based or virtual version or representation of an application, storage, networking, or servers. This simply means virtualization enables us to manage any hardware such as servers like a piece of software. This helps us bypass the issues with physical interactions. For example, using physical servers has its limitations so rather than physically going to the servers and interacting with them, we can manage them using applications.

Evolution of Virtualization

Evolution of virtualization happened from bare metal computing to Virtual machines and then to Containers, let us start with bare metal computing which was the only way of running applications until the 1960s.

Bare metal computing

Before the advent of containers and even virtual machines, we used to run our applications directly on the hardware system and this was called bare metal computing. These days it is popularly known as bare metal computing, back then it was simply called Computing.

In bare metal computing, we have our physical host in which the Physical Hardware layer is at the bottom. Then we install Operating System as per our needs on top of the physical hardware. After that we install binaries and libraries that are necessary to run the application and then we have one or more applications installed directly onto the host machine.

Features and Challenges

Following are the features and challenges on bare metal computing:

1. There is a shared layer of binaries and libraries that is shared across all the applications so if the applications need different versions of the same dependency or have some dependencies that are somehow incompatible with each other, we might not get the expected results from our applications. This situation is known as dependency hell.

2. To tackle this problem we somehow need to either modify our applications such that the dependencies are compatible or figure out a way to carve up the system such that we can have both versions of the libraries available. This can be done by setting path environment variables differently for the different processes.

3. The third challenge is that because of this dependency management challenge the developers are more likely to have fewer applications on each host machine and so it becomes harder and harder to achieve high utilization using bare metal computing.

For example, Let’s say an application takes one CPU core and another application also takes one CPU core and we have a server-based system that has 64 cores. It it becomes very hard to utilize that compute capacity effectively because of the challenges associated with managing dependencies for the applications.

4. Since we are are installing multiple applications onto the system, in case we have an issue with one of those applications, it might mess up some underlying dependency and that would impact the performance of another applications running on that same host machine. This is called having a large blast radius. So this makes it much more dangerous to modify things.

5. The startup and shutdown speed of a physical system is on the order of minutes. So to spin down that server and reboot it for some reason can take a number of minutes. This makes it relatively slow if you want to try to achieve high uptime and and eliminate downtime for your applications.

6. Creating new physical machines or provisioning a new system is very slow. In large scale Bare metal computing, we will need our own Data Center, we will need to actually order the hardware and get it installed and hook up networking that could be on the order of days or weeks. If we are working with a co-location facility or a cloud provider that offers bare metal systems, even that provisioning might be on the order of hours but still quite slow relative to what we have in case of virtual machines and containers.

Virtual Machines

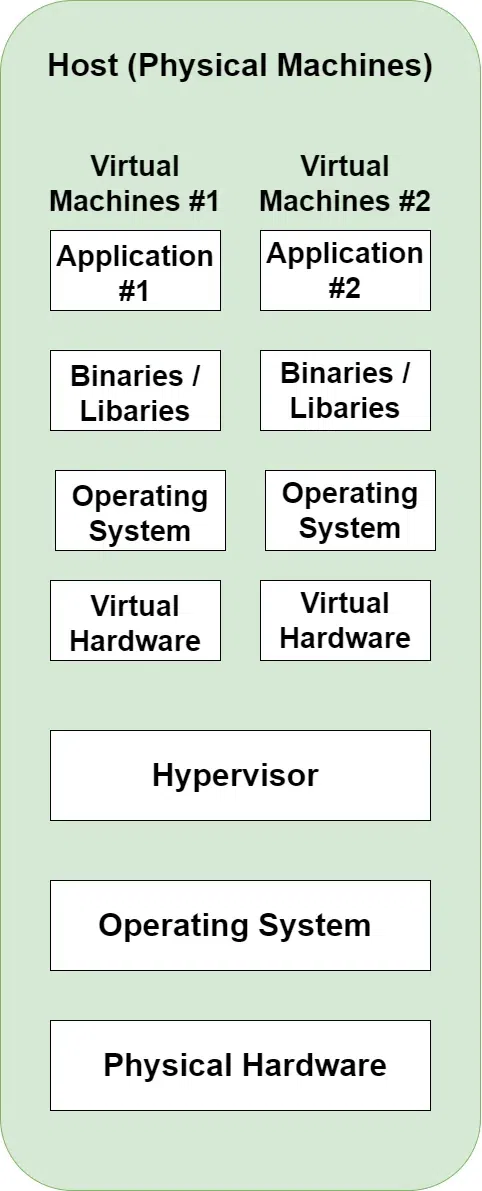

There were many challenges with bear metal computing, and these challenges prompted Engineers to come up with the concept of virtualization in order to eliminate some of those challenges. In a virtual machine the outer boundary is that of a the host (the physical system or the server). We may or may not use an operating system running on that Hardware. On the operating system layer we have hypervisor. And then we have virtual machines.

Note :- A Hypervisor is some combination of software and Hardware that allows us to carve up the physical resources.

Hypervisor allows us to carve up pool of resources into isolated smaller parts that we can then install our our systems onto. Hypervisor gives us a virtual hardware layer upon which we can then install an operating system, binaries and libraries and also install our applications. In bare metal computing we had both applications on the single physical host, while in virtual machines we have application #1 and application #2 each with their own isolated virtual machine running on top of the hypervisor.

Types of Hypervisors

There are two types of hypervisors:

Type 1 Hypervisor: In case of type 1 hypervisor, there is no underlying Operating System. Type 1 hypervisor are running directly on the physical Hardware. This is beneficial because we don’t have to sacrifice any performance in the OS to software virtualization layer (There is a small performance hit but much less than if you have an operating system on the hardware and then a hypervisor on top of that).

For example, AWS Nitro – hypervisor used by Amazon Web Services, vSphere by VMware.

Type 2 Hypervisor: type 2 hypervisor is mostly used to create a virtual machine on a personal computer.

For example, VirtualBox. You can install VirtualBox and then within VirtualBox you can create virtual machines for various operating systems.

Features of Virtual Machines

- Dependency hell can be eliminated in virtual machines. Therefore, now each virtual machine has an isolated set of dependencies binaries and libraries such that our application can specify whatever dependencies it needs and not have to worry about the needs of other applications.

- Because of Virtual machines we can carve up a big server CPU into many different smaller chunks and each of those chunks can have its own virtual machine and then we can install our applications accordingly. This is what enables a cloud provider to offer your to choose between all the different machine types they don’t actually have. Cloud providers don’t have all those different machine types installed in their data center rather, they have much larger machine types and you are given some part of that installed as a virtual machine.

- Virtual Machines also significantly reduces the blast radius. If something goes wrong with one application on a virtual machine since the applications are running on isolated environments, other applications on a different virtual machines is not impacted. Because on this developers can make changes to any application without worrying about its impact on any other application.

- Virtual Machines also enables faster speed of starting and stopping applications unlike bare metal computing where the startup and shutdown speed of a physical system is on the order of minutes. The speed of starting and stopping applications in virtual machines is faster than shutting down and starting speed of a physical server.

- One very important feature of virtual machines is that we can provision and decommission them much much faster. Because of virtual machines we can go on to our favorite cloud provider, click a button or issue a command and have a new virtual server ready within minutes. if we are done with it we can decommission it and we can stop paying for it within minutes as well.

Containers

In case of Containers, at the bottom we have either a virtual or physical Hardware with an operating system and then on that we have a container runtime.

Note: Container Runtime is a piece of software that takes a container image and run it within the host system.

The key difference between virtual machines and containers is that virtual machines are running their own copy of the Linux kernel while Linux containers are actually sharing the kernel with the host operating system. While in a virtual machine you have your own Opera, you have your own kernel, and you have a copy of your own operating system. In containers we are sharing that with the host. In containers, we don’t get quite the same level of isolation that we would get with a virtual machine. But we do get quite good isolation if we configure things properly.

Even though containers run on the same host, they are still isolated and have their own copies of binaries and libraries even though they are sharing that underlying kernel.

To learn more about containers – follow this GeeksforGeeks article

Types of Container Platforms

There are different types of software related to Containers:

Desktop container platforms: Desktop container platforms are the container platforms that you would install on your development system to manage containers, manage container images, run containers, set up the necessary networking etc. For example, Docker and Podman fall into this category.

Container Runtimes: Container Runtimes on the other hand are much more targeted software which are very specifically designed to take a container image that follows the specification and run it with the necessary configuration options to provide that type of isolated environment required. For example, container d and cri-o.

Features of containers

- In case of containers, we don’t have the issues of dependency conflicts. Because each application in containers have their own underlying binaries and libraries.

- Through Containerization, we can improved our utilization efficiency even further because in case of virtual machines, we had to have a an entire copy of the operating system so each of those virtual machine images are going to be on the order of gigabytes. Whereas in case of containers, container images can be much more smaller because they leverage the underlying Operating System rather than having the copy of it. And so they are generally on the order of megabytes.

- Because of this we are able to utilize the resources of the system much more effectively. Therefore, the capability of the hardware can be optimally utilized.

- With Containers, we have a small blast radius. Since we have some level of isolation even if it is not as strong isolation as offered by virtual machine.

- We are able to start and stop containers even more quickly than virtual machines. So in the order of seconds we are able to starting and stopping a container (which is equivalent to provisioning and decommissioning in bare metal computing). On the order of seconds we can bring up a new container from scratch and clear it out as well.

- The key feature of containers is that they’re small and light enough that we use them within our development environment. On the other hand, creating a virtual machine that tries to replicate the production environment is slow and clunky. Using containers within our development environment gives us high confidence that our production environment is as similar as possible to that development environment. And that decreases the chances that we are going to hit some bug because of the differences between production and deployment environment.

Bare metal computing vs Virtual machines vs Containers

|

poor

|

good

|

good

|

|

poor

|

fine

|

good

|

|

good

|

good

|

fine

|

|

poor

|

fine

|

good

|

|

poor

|

fine

|

good

|

|

good

|

fine

|

fine

|

|

poor

|

fine

|

good

|

And this is how we went from running our applications directly in a hardware system to distributing resources in a single hardware system and finally to the world of containers where we have small and light weight containers.

FAQs On Virtualization in Cloud Computing

1. Which problems does containers solve?

Containers solves multiple issues around dependency management, scalability and portability of software.

2. What are the limitations of containers

The limitations of containers are Security and increased complexity of the software. Also the learning curve of this technology is quite steep.

3. How to create containers?

You can create containers by using tools like Docker, Podman etc.

4. Which is better containers or Virtual machines?

Containers have an edge over virtual machines, but which one is better depends on case to case basis.

5. IS containerization really popular?

Yes, 37% of established tech companies use container technologies in their software.

Share your thoughts in the comments

Please Login to comment...