Deleting Files in HDFS using Python Snakebite

Last Updated :

14 Oct, 2020

Prerequisite: Hadoop Installation, HDFS

Python Snakebite is a very popular Python library we can use to communicate with the HDFS. Using the Python client library provided by the Snakebite package we can easily write python code that works on HDFS. It uses protobuf messages to communicate directly with the NameNode. The python client library directly works with HDFS without making a system call to hdfs dfs. The Snakebite doesn’t support python3.

Deleting Files and Directories

In Python Snakebite there is a method named delete() through which we can easily delete the multiple files or directories available in our HDFS. We will use the python client library to perform the deletion. So, let’s start with the Hands-on.

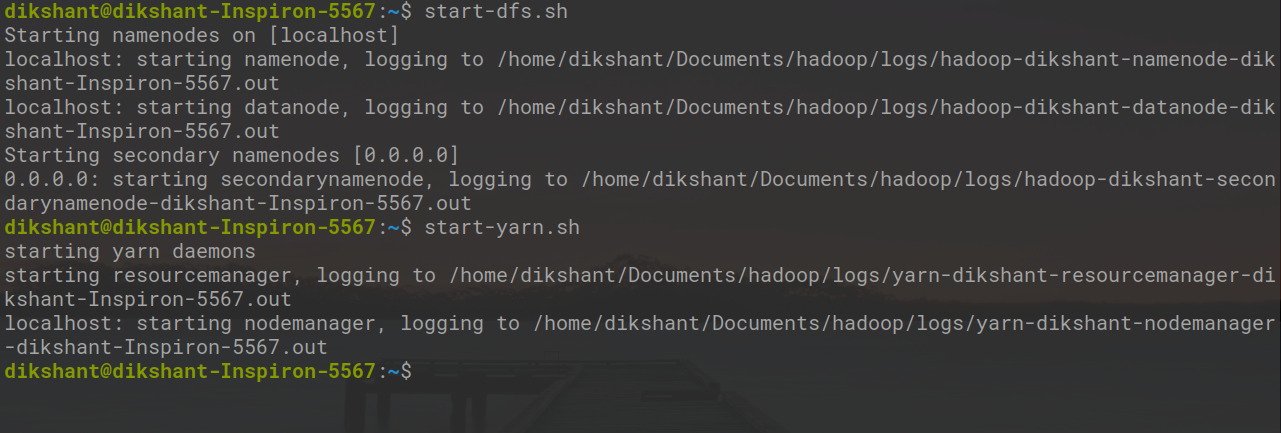

All the Hadoop Daemon should be running. You can start Hadoop Daemons with the help of the below command.

start-dfs.sh // start your namenode datanode and secondary namenode

start-yarn.sh // start resourcemanager and nodemanager

Task: Recursively Deleting files and directory’s available on HDFS (In my case I am removing ‘/demo/demo1’ and ‘/demo2’ directory’s).

Step 1: Let’s see the files and directory that are available in HDFS with the help of the below command.

hdfs dfs -ls /

In the above command hdfs dfs is used to communicate particularly with the Hadoop Distributed File System. ‘ -ls / ‘ is used for listing the file present in the root directory. We can also check the files manually available in HDFS.

Step 2: Create a file in your local directory with the name remove_directory.py at the desired location.

cd Documents/ # Changing directory to Documents(You can choose as per your requirement)

touch remove_directory.py # touch command is used to create file in linux enviournment.

Step 3: Write the below code in the remove_directory.py python file.

Python

from snakebite.client import Client

client = Client('localhost', 9000)

for p in client.delete(['/demo', '/demo2'], recurse=True):

print p

|

In the above program recurse=True states that the directory will be deleted recursively means if the directory is not empty and it contains some sub-directory’s then those subdirectories will also be removed. In our case /demo1 will be deleted first then the /demo directory will be removed.

Client() method explanation:

The Client() method can accept all the below listed arguments:

- host(string): IP Address of NameNode.

- port(int): RPC port of Namenode.

- hadoop_version (int): Hadoop protocol version(by default it is: 9)

- use_trash (boolean): Use trash when removing the files.

- effective_use (string): Effective user for the HDFS operations (default user is current user).

In case if the file name we are specifying will not found then the delete() method will throw FileNotFoundException. If the directory contains some subdirectory and recurse=True is not mentioned DirectoryException will be thrown by the delete() method.

Step 4: Run the remove_directory.py file and observe the result.

python remove_directory.py // this will remove directory's recursively as mentioned in delete() argument

In the above image ‘result’ :True states that we have successfully removed the directory.

Step 5: We can check the directories are removed or not either visiting manually or with the below command.

hdfs dfs -ls /

Now we can see that the /demo and /demo2 are no more available on HDFS.

Share your thoughts in the comments

Please Login to comment...