In this article, we will discuss how to create an MPI Cluster.

To set up a cluster in the local environment, the same versions of the OpenMPI should be pre-installed in every system.

Prerequisites

- Operating System: The Operating System is Ubuntu 18.04.

- MPI: We could either use OpenMPI or MPICH. This tutorial follows OpenMPI (version 2.1.1). List Open MPI available versions

apt list -a openmpi-bin

sudo apt-get install openmpi-bin //To install open-mpi

Steps to Create an MPI Cluster

Step 1: Configure your hosts file

We are going to communicate between the computers and we don’t want to type in the IP addresses every so often. Instead, we can give a name to the various nodes in the network that we wish to communicate with. hosts file is used by the device operating system to map hostnames to IP addresses.

Example of a host file in master.

sudo nano /etc/hosts

Add these host IPs and Worker IPs in that file :

#MPI CLUSTERS

172.20.36.120 manager

172.20.36.153 worker1

172.20.36.143 worker2

172.20.36.116 worker3

For worker (slave) node

Example of a host file for worker2

#MPI CLUSTER SETUP

172.20.36.120 manager

172.20.36.143 worker2

Step 2: Create a new user

We can operate the cluster using existing users. It’s better to create a new user to keep things simpler. Create new user accounts with the same username in all the machines to keep things simple.

To add a new user:

sudo adduser mpiuser

Making mpiuser a sudoer :

sudo usermod -aG sudo mpiuser

Step 3: Setting up SSH

Machines are going to be talking over the network via SSH and share data via NFS. Follow the below process for both manager and the worker nodes.

To install ssh in the system.

sudo apt-get install openssh-server

Log in to the newly created user by

su - mpiuser

Navigate to ~/.ssh folder and

ssh-keygen -t rsa

cd .ssh/

cat id_rsa.pub >> authorized_keys

ssh-copy-id worker1

For example:

mpiuser@tele-h81m-s138:~/.ssh$ ssh-copy-id worker2

Now you can connect to worker nodes without entering passwords

ssh worker2

In worker nodes use

ssh-copy-id manager

Step 4: Setting up NFS

We share a directory via NFS in the manager which the worker mounts to exchange data.

NFS-Server for the master node :

Install the required packages by

$ sudo apt-get install nfs-kernel-server

We need to create a folder that we will share across the network. In our case, we used “cloud”. To export the cloud directory, we need to create an entry in /etc/exports

sudo nano /etc/exports

Add

/home/mpiuser/cloud *(rw,sync,no_root_squash,no_subtree_check)

Instead of *, we can specifically give out the IP address to which we want to share this folder, or we can use *.

For Example:

/home/mpiuser/cloud 172.20.36.121(rw,sync,no_root_squash,no_subtree_check)

After an entry is made, run the following.

$ exportfs -a

Run the above command, every time any change has been made to /etc/exports.

Use sudo exportfs -a if the above statement doesn’t work.

If required, restart the NFS server

$ sudo service nfs-kernel-server restart

> NFS-worker for the client nodes

Install the required packages

$ sudo apt-get install nfs-common

Create a directory in the worker’s machine with the same name – “cloud”

$ mkdir cloud

And now, mount the shared directory like

$ sudo mount -t nfs manager:/home/mpiuser/cloud ~/cloud

To check the mounted directories,

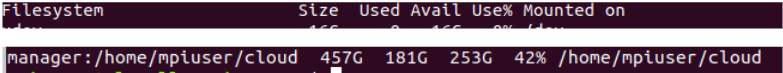

$ df -h

This is how it would show up

To make the mount permanent so you don’t have to manually mount the shared directory every time you do a system reboot, you can create an entry in your file systems table – i.e., /etc/fstab file like this:

$ nano /etc/fstab

Add

#MPI CLUSTER SETUP

manager:/home/mpiuser/cloud /home/mpiuser/cloud nfs

Step 5: Running MPI programs

Navigate to the NFS shared directory (“cloud” in our case) and create the files there[or we can paste just the output files). To compile the code, the name of which let’s say is mpi_hello.c, we will have to compile it the way given below, to generate an executable mpi_hello.

$ mpicc -o mpi_hello mpi_hello.c

To run it only in the master machine, we do

$ mpirun -np 2 ./mpi_helloBsend

np – No. of processes = 2

To run the code within a cluster

$ mpirun -hostfile my_host ./mpi_hello

Here, the my_host file determines the IP Addresses and number of processes to be run.

Sample Hosts File :

manager slots=4 max_slots=40

worker1 slots=4 max_slots=40

worker2 max_slots=40

worker3 slots=4 max_slots=40

Alternatively,

$ mpirun -np 5 -hosts worker,localhost ./mpi_hello

Note: Hostnames can also be substituted with IP addresses.

Share your thoughts in the comments

Please Login to comment...