Bag-Of-Words Model In R

Last Updated :

14 Jan, 2024

Effectively representing textual data is crucial for training models in Machine Learning. The Bag-of-Words (BOW) model serves this purpose by transforming text into numerical form. This article comprehensively explores the Bag-of-Words model, elucidating its fundamental concepts and utility in text representation for Machine Learning.

What is Bag-of-Words?

Bag-of-words is useful for representing textual data in a passage when using text for training and modelling in Machine Learning. We represent the text in the form of numbers generally in Machine Learning. BOW allows to extract features from text using numerous ways to convert text into numbers. It provides two main features:

- Known vocabulary of words: BOW relies on a predefined vocabulary of words. Each unique word in the corpus is assigned a unique identifier.

- Frequency or probability of occurrence of words: BOW captures the frequency or probability of occurrence of each word in a document. This information is used to create a numerical representation of the text.

With the help of a bag of words, we can detect the type of document, useful for sentimental analysis, document classification, and spam filtering.

The BOW model treats each sentence as a vector, where each element of the vector corresponds to the frequency of a word in the dictionary converting a collection of text documents into a matrix, where each row represents a document, and each column represents a unique word.

But, BOW does not preserve the structure of sentences or consider word order. It treats each word as independent, ignoring semantic relationships.

Bag-of-Words Example

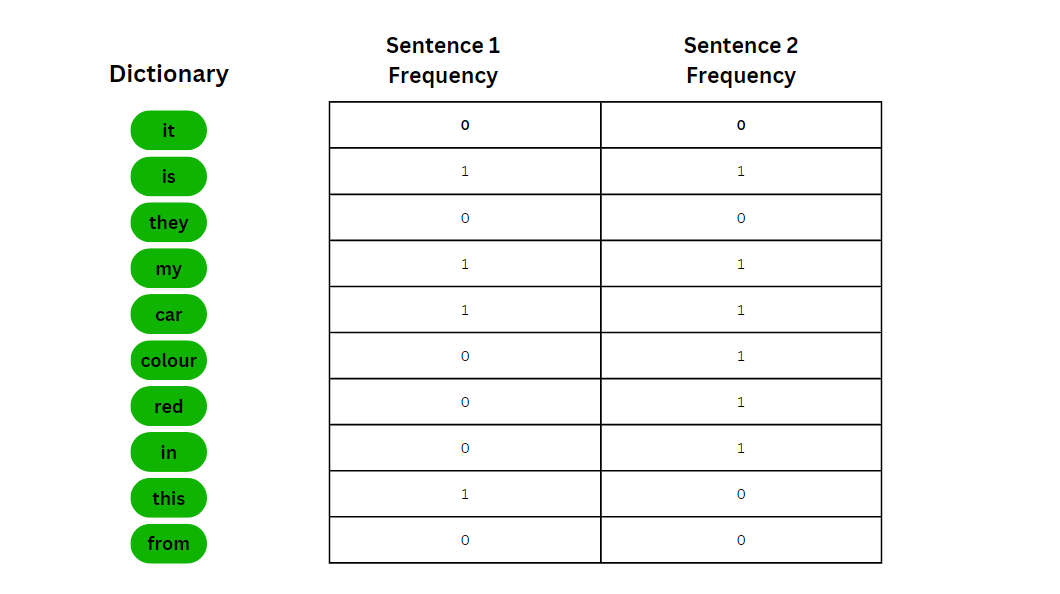

Suppose we have the following two sentences:

- This is my car.

- My car is red in colour.

So we would have a dictionary of some words and we track the frequency of words of each sentence.

With the frequency table, we can feed this vector into machine learning models and train them.

Text Classification using Bag of Words

We will be using the CSV file of Poems from poetryfoundation.org from kaggle.com.

Step 1: Install the libraries

install.packages("data.table")

install.packages("stringr")

install.packages("tm")

install.packages("caret")

Step 2: Import the data

R

library(data.table)

library(stringr)

library(tm)

library(slam)

data = read.csv("/kaggle/input/modern-renaissance-poetry/all.csv")$content

|

Preprocessing of Data

Before moving ahead, a text needs to be preprocessed before moving ahead. Here are two texts, for eg:

- Hello ,,, how are <b>you</b>

- I am fine, what about you?

First sentence contains lot of unnecessary characters which can make the model inaccurate. However the second sentence is quite perfect, still the comma, question mark is not required. Punctuation generally don’t add much information, similarly the case.

Second is stopwords. These words such as “and”, “in”, “on”, “the” don’t add much information and can skew the model. Hence we need to remove such words.

Step 3: Preprocess the data. It includes:

- Convert to lower case

- Remove punctuation

- remove numbers

- remove stopwords

- strip whitespace

- Finally create a matrix of words to document

We will be using tm and slam used for text mining and matrix usage.

R

corpus = Corpus(VectorSource(data))

corpus = tm_map(corpus, content_transformer(tolower))

corpus = tm_map(corpus, removePunctuation)

corpus = tm_map(corpus, removeNumbers)

corpus = tm_map(corpus, removeWords, stopwords("SMART"))

corpus = tm_map(corpus, stripWhitespace)

|

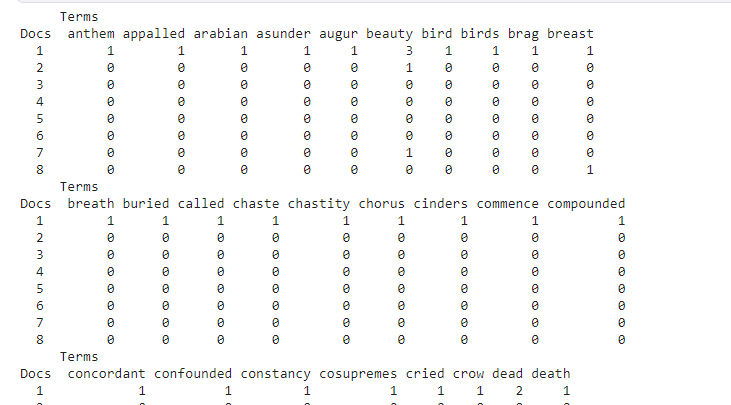

Document-Term Matrix

It is the frequency table with each document on one axis, and dictionary on the other. We will create a matrix using the DocumentTermMatrix method by passing the data corpus and then convert the object into matrix.

R

matrix = as.matrix(DocumentTermMatrix(corpus))

print(matrix)

|

Output:

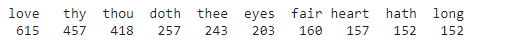

Step 4: First sum the columns of each word and then check the top ten words

R

word_frequencies = colSums(matrix)

N = 10

top_words = names(sort(word_frequencies, decreasing = TRUE)[1:N])

print(word_frequencies[top_words])

|

Output:

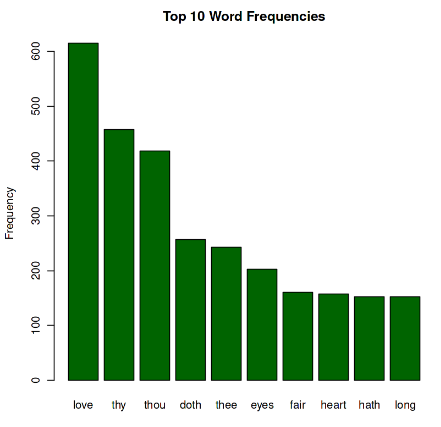

Step 5: We can also plot the word frequencies using barplot

R

top_frequencies = word_frequencies[top_words]

barplot(top_frequencies, main = "Top 10 Word Frequencies", xlab = "Words", ylab = "Frequency", col = "darkgreen")

|

Output:

Bag-Of-Words Model In R

In the following example, we use spam email dataset for the classification using bag of words. We use SVM classifier for classification of spam and ham(original). Download the dataset link.

Step 1: Load all required libraries

R

library(data.table)

library(stringr)

library(caret)

library(tm)

library(slam)

library(e1071)

|

Step 2: Load the dataset and preprocess the dataset similar to that of previous example

R

data = read.csv("/kaggle/input/spam-email/spam.csv")

corpus = Corpus(VectorSource(data$Message))

labels = as.numeric(factor(data$Category, levels = c("ham", "spam")))

labels = labels - 1

corpus = tm_map(corpus, content_transformer(tolower))

corpus = tm_map(corpus, removePunctuation)

corpus = tm_map(corpus, removeNumbers)

corpus = tm_map(corpus, removeWords, stopwords("SMART"))

corpus = tm_map(corpus, stripWhitespace)

dtm <- DocumentTermMatrix(corpus)

matrix <- as.matrix(dtm)

dtm_df <- as.data.table(matrix)

dtm_df$label <- labels

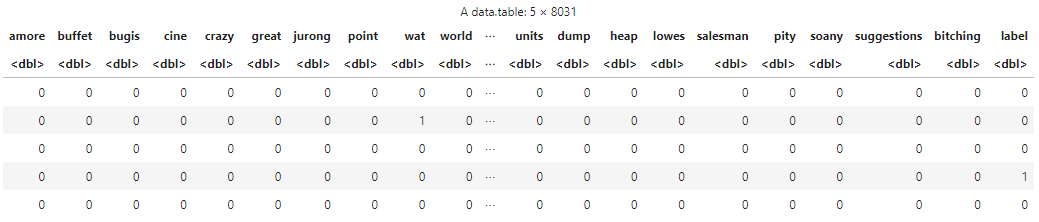

head(dtm_df)

|

Output:

Step 3: Perform the train test split in the ration of 80% 20% for train and test set respectively.

R

set.seed(42)

split_index <- sample(1:nrow(dtm_df), 0.8 * nrow(dtm_df))

train_set <- dtm_df[split_index]

test_set <- dtm_df[-split_index]

|

Step 4: Train the model and create predictions. Then create confusion matrix

R

model <- svm(label ~ ., data = train_set, kernel = "linear")

predictions <- predict(model, newdata = test_set[, -"label"])

threshold <- 0.5

binary_predictions <- ifelse(predictions > threshold, 1, 0)

confusion_matrix <- table(binary_predictions, test_set$label)

print(confusion_matrix)

accuracy <- sum(diag(confusion_matrix)) / sum(confusion_matrix)

print(paste("Accuracy:", accuracy))

|

Output:

binary_predictions 0 1

0 958 23

1 1 133

[1] "Accuracy: 0.97847533632287"

Hence, The model has a high accuracy of approximately 97.85%, indicating that it correctly predicts the class for a large proportion of instances.

Limitations to Bag-of-Words

- It loses the sequence information from the dataset. It just relies on the frequency of words appearing.

- It creates very sparse dataset since many words tend not to appear in a document.

- It ignores the context.

- It doesn’t relate the terms and hence loses the relationship among words.

- Tend to overfit since so many columns are formed with increasing vocabulary.

Conclusion

In conclusion, Bag-of-Words stands as a versatile tool for converting textual data into a format suitable for machine learning applications. While it excels in certain scenarios, its limitations, such as the loss of sequence information and sparse dataset creation, should be considered.

Share your thoughts in the comments

Please Login to comment...