Artificial Intelligence (AI) refers to developing computer systems for performing tasks requiring human intelligence. These systems assess large amounts of data to identify patterns and make logical decisions based on the collected information. The ultimate goal of AI is to create machines to carry out diverse tasks.

Artificial Intelligence techniques refer to a set of methods and algorithms used to develop intelligent systems that can perform tasks requiring human-like intelligence. Some of the widely used ones are:

- Machine Learning.

- Natural Language Processing.

- Computer Vision.

- Deep Learning

- Data Mining

- Robotics.

Machine Learning:

This approach involves the building of algorithms to learn patterns in data and make predictions based on it.

1. Unsupervised machine learning -AI systems analyse unlabelled data, where no predefined outcomes are provided. The objective is to uncover inherent structures or patterns within the data without any prior knowledge. For instance, it can group similar customer behaviour data to identify customer segments for targeted marketing strategies.

2. Supervised learning – A combination of an input data set and the intended output is inferred from the training data. AI systems learn from a labelled dataset, where each data point is associated with a known outcome. For instance, it enables email spam filters to distinguish between spam and legitimate emails based on learned patterns.

3. Semi-supervised learning – It is a method that uses a small amount of labelled data and a large amount of unlabelled data to train a model. The goal of semi-supervised learning is to learn a function that can accurately predict the output variable based on the input variables, similar to supervised learning. However, unlike supervised learning, the algorithm is trained on a dataset that contains both labelled and unlabelled data.

4. Reinforcement learning – In RL, the data is accumulated from machine learning systems that use a trial-and-error method to learn from outcomes and decide which action to take next. After each action, the algorithm receives feedback that helps it determine whether the choice it made was correct, neutral or incorrect. It performs actions with the aim of maximizing rewards, or in other words, it is learning by doing in order to achieve the best outcomes.

Natural Language Processing:

Natural Language Processing involves programming computers to process human languages to facilitate interactions between humans and computers.

However, the nature of human languages makes Natural Language Processing difficult because of the rules involved in passing information using natural language. NLP leverages algorithms to recognize and abstract the rules of natural languages, converting unstructured human language data into a computer-understandable format.

Applications of Natural Language Processing can be found in IVR systems and applications used in call centres, language translation applications like Google Translate, and word processors such as Microsoft Word to check the accuracy of grammar in text.

This AI technique has paved the way for virtual assistants, chatbots, and language translation tools, making communication between humans and machines more seamless than ever.

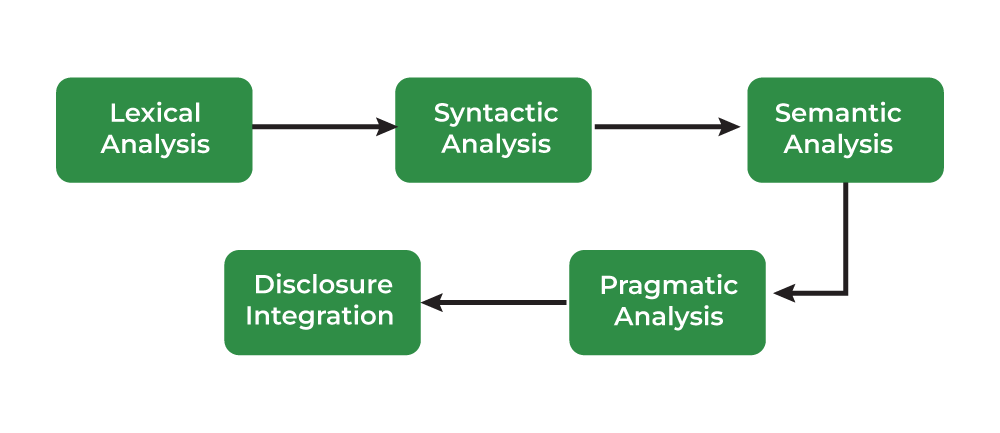

Some of the common variants of NLP are:

1. Lexical integration – Lexical analysis is the process of converting a sequence of characters into a sequence of tokens. A lexer is generally combined with a parser, which together analyses the syntax of programming languages, web pages, and so forth. Lexers and parsers are most often used for compilers. The text or sound waves are segmented into words and other units.

2. Syntactic integration – Syntactic analysis is the process of analysing a string of symbols, either in natural language, computer languages, or data structures, conforming to the rules of formal grammar. It is used in the analysis of computer languages, to facilitate the writing of compilers and interpreters. Grammatical rules are applied to categories and groups of words, not individual words. It is a crucial part of NLP.

3. Semantic integration – Semantic Analysis attempts to understand the meaning of the human language. It captures the meaning of the given text while considering context, logical structuring of sentences, and grammar roles.

2 parts of Semantic Analysis are:

- Lexical Semantic Analysis

- Compositional Semantics Analysis.

4. Pragmatic integration – Pragmatic Analysis is part of the process of extracting information from text. It focuses on taking a structured set of text and figuring out the actual meaning of the text. It also focuses on the meaning of the words of the time and context.

Effects on interpretation can be measured using PA by understanding the communicative and social content from the given text.

5. Disclosure integration – Discourse analysis is used to uncover the motivation behind a text and is useful for studying the underlying meaning of a spoken or written text as it considers the social and historical contexts of it. Discourse analysis is a process of performing text or language analysis, that involves text interpretation and an understanding of social interactions and customs.

Computer Vision:

Computer Vision equips machines with the ability to interpret visual information from the world. This technique has revolutionized industries like healthcare, automotive, and robotics, enabling tasks such as facial recognition, object detection, and autonomous driving. The extent to which it can discriminate between objects is an essential component of machine vision.

Sensitivity in computer vision is an AI application’s ability to pick out small details in visual information. A low-sensitivity system may not pick up subtle clues in images or fail to work well in low lighting. However, high sensitivity might be able to look at an image’s fine details and pick up on information other systems might miss.

Sensitivity in computer vision is an AI application’s ability to pick out small details in visual information. A low-sensitivity system may not pick up subtle clues in images or fail to work well in low lighting. However, high sensitivity might be able to look at an image’s fine details and pick up on information other systems might miss. A common example is Surveillance systems.

Resolution is the level of detail a computer vision system can capture and process. High-resolution images are vital for correctly identifying an image’s detail.

Application of computer vision

Robotics & Automation:

Automation aims to enable machines to perform boring, repetitive jobs, increasing productivity and delivering more effective, efficient, and affordable results. To automate processes, many businesses employ machine learning, artificial neural, and graphs.

By leveraging the CAPTCHA technique, this automation can avoid fraud problems during online payments.

Robotic process automation is designed to carry out high-volume, repetitive jobs while being capable of adapting to changing conditions.

Deep Learning:

Deep learning is the branch of machine learning which is based on artificial neural network architecture. An artificial neural network or ANN uses layers of interconnected nodes called neurons that work together to process and learn from the input data.

In a fully connected Deep neural network, there is an input layer and one or more hidden layers connected one after the other. Each neuron receives input from the previous layer neurons or the input layer. The output of one neuron becomes the input to other neurons in the next layer of the network, and this process continues until the final layer produces the output of the network. The layers of the neural network transform the input data through a series of nonlinear transformations, allowing the network to learn complex representations of the input data.

The main applications of deep learning can be divided into computer vision, natural language processing (NLP), and reinforcement learning.

In computer vision, Deep learning models can enable machines to identify and understand visual data. Some of the main applications include the identification and locating of objects within images and videos.

In NLP, the Deep learning model can enable machines to understand and generate human language. Some of the main applications include the generation of essays, translating languages, and sentiment analysis

In reinforcement learning, deep learning works as training agents to take action in an environment to maximize a reward. Some of the main applications of deep learning in reinforcement learning include the training of robots to perform complex tasks such as grasping objects, navigation, and manipulation.

Data Mining:

Data mining is the process of extracting knowledge or insights from large amounts of data using various statistical and computational techniques. The data can be structured, semi-structured, or unstructured, and can be stored in various forms such as databases, data warehouses, and data lakes.

The primary goal of data mining is to discover hidden patterns and relationships in the data that can be used to make informed decisions or predictions. This involves exploring the data using various techniques such as clustering, classification, regression analysis, association rule mining, and anomaly detection.

Data mining has a wide range of applications across various industries, including marketing, finance, healthcare, and telecommunications. For example, in marketing, data mining can be used to identify customer segments and target marketing campaigns, while in healthcare, it can be used to identify risk factors for diseases and develop personalized treatment plans.

However, data mining also raises ethical and privacy concerns, particularly when it involves personal or sensitive data. It’s important to ensure that data mining is conducted ethically and with appropriate safeguards in place to protect the privacy of individuals and prevent misuse of their data.

Share your thoughts in the comments

Please Login to comment...