Scrapy – Requests and Responses

Last Updated :

24 Aug, 2023

In this article, we will explore the Request and Response-ability of Scrapy through a demonstration in which we will scrape some data from a website using Scrapy request and process that scraped data from Scrapy response.

Scrapy – Requests and Responses

The Scrapy Framework, built using Python, is one of the most popular open-source web crawling frameworks. The motivation behind Scrapy is to make searching through large Web pages and extraction of data efficient and easy. No matter the size or complexity of the website, Scrapy is capable of crawling any website. One of the core features of Scrapy is its ability to send HTTP requests and handle the respective responses.

Required Modules

Before diving into the Scrapy requests and responses demonstration, the following modules are expected to be installed:

- Scrapy: The main module for web scraping. Introduction

- Python: The programming language in which Scrapy is built.

To install Scrapy we can use pip as follow,

pip install scrapy

Scrapy Syntax and Parameters

Scrapy follows a particular syntax in which it accepts quite a number of parameters mentioned and explained below,

Syntax: yield scrapy.Request(url, callback, method=’GET’, headers=None, body=None)

Parameter:

As you can see the above code line is a typical Scrapy Request with its parameters which are,

- `url`: This is the URL of the website you want to scrape.

- `callback`: The method or handler that will process the response received

- `method`: This is an optional parameter that represents the HTTP method to be used.

- `headers`: Again an optional parameter this includes the header in the request.

- `body`: This refers to the request body mostly used while performing a POST request.

Making Requests using Scrapy

Now, when sending Requests using Scrapy, we send a Request object which represents an HTTP request sent to a website. To understand the requests in Scrapy let’s consider the following example,

The consists of a class named `Myspider` in which we have 2 methods first `start_requests` which will make a scrapy request to the URL provided and the second `parse` which will be called when a response is received it is used for parsing the response but in this, we are just printing it.

Python

import scrapy

class MySpider(scrapy.Spider):

name = 'scrapy_example'

def start_requests(self):

def parse(self, response):

print(response.body)

|

To run the code use the following command,

$ scrapy runspider .\<script_filename>.py

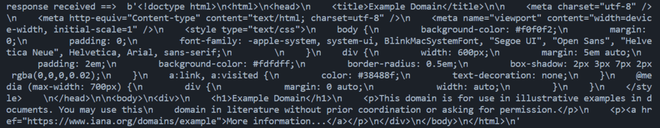

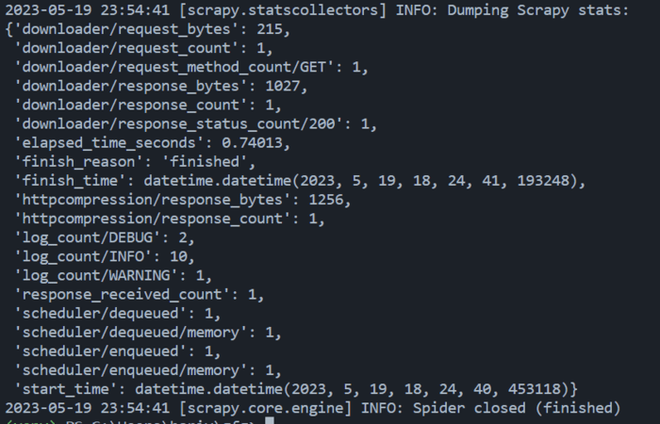

Once the script is executed it makes an HTTP request to the URL mentioned and after getting a response it will print the content of the response body as follow.

The first image contains and in the second one we can see all the stat related to this particular Scapy request.

The HTML of the website received as a response

Stat related to this particular Scapy request

Handling Responses

Once a request is made to a particular website using Scrapy and the request does not lead to any error then in return the website server sends a Response object that has a number of properties associated with it which can be used to extract data related to the website and the request-response performed. A Scrapy Request Object consists of the following information:

- HTTP status code.

- any headers if required.

- the response body containing all the data.

Now that we know how to make a scrapy request to a URL and get data, let’s see how we can parse the response data so we can extract the required information from it.

Python3

import scrapy

class MySpider(scrapy.Spider):

name = 'scrapy_example'

def start_requests(self):

callback=self.parse)

def parse(self, response):

headings = response.css('h1::text').getall()

paragraphs = response.css('p::text').getall()

print("Headings:")

for heading in headings:

print(heading)

print("\nParagraphs:")

for paragraph in paragraphs:

print(paragraph)

|

We’ve created a spider called MySpider in the above code. Once we execute the above code the `start_requests` method is called and makes the request to the URL provided. After the request response is received from the web server that contains the data it is passed to the parse() method.

In the parse method, we extract all the h1 and p tags data from the response and print it to the console as shown in the illustration below:

Share your thoughts in the comments

Please Login to comment...