Rate Limiting in System Design

Last Updated :

14 Mar, 2024

Rate limiting is an important concept in system design that involves controlling the rate of traffic or requests to a system. It plays a vital role in preventing overload, improving performance, and enhancing security. This article explores the importance of rate limiting in system design, the various rate-limiting strategies and algorithms, and how to implement rate limiting effectively to ensure the stability and reliability of a system.

Important Topics for Rate Limiting in System Design

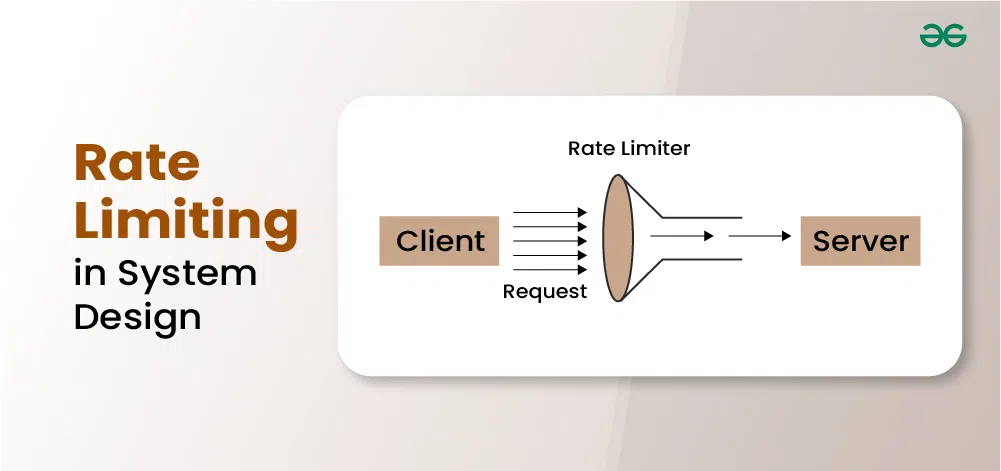

What is Rate Limiting?

Rate limiting is a technique used in system design to control the rate at which incoming requests or actions are processed or served by a system. It imposes constraints on the frequency or volume of requests from clients to prevent overload, maintain stability, and ensure fair resource allocation.

- By setting limits on the number of requests allowed within a specific timeframe, rate limiting helps to mitigate the risk of system degradation, denial-of-service (DoS) attacks, and abuse of resources.

- Rate limiting is commonly implemented in various contexts, such as web servers, APIs, network traffic management, and database access, to ensure optimal performance, reliability, and security of systems.

What is a Rate Limiter?

A rate limiter is a component that controls the rate of traffic or requests to a system. It is a specific implementation or tool used to enforce rate-limiting.

Importance of Rate Limiting in System Design

Rate limiting plays a crucial role in system design for several reasons:

- Preventing Overload: Rate limiting helps prevent overload situations by controlling the rate of incoming requests or actions. By imposing limits on the frequency or volume of requests, systems can avoid becoming overwhelmed and maintain stable performance.

- Ensuring Stability: By regulating the flow of traffic, rate limiting helps ensure system stability and prevents resource exhaustion. It allows systems to handle incoming requests in a controlled manner, avoiding spikes in demand that can lead to degradation or failure.

- Mitigating DoS Attacks: Rate limiting is an effective defense mechanism against denial-of-service (DoS) attacks, where attackers attempt to flood a system with excessive requests to disrupt its operation. By enforcing limits on request rates, systems can mitigate the impact of DoS attacks and maintain availability for legitimate users.

- Fair Resource Allocation: Rate limiting promotes fair resource allocation by ensuring that all users or clients have equitable access to system resources. By limiting the rate of requests, systems can prevent certain users from monopolizing resources and prioritize serving requests from a diverse user base.

- Optimizing Performance: Rate limiting can help optimize system performance by preventing excessive resource consumption and improving response times. By controlling the rate of incoming requests, systems can allocate resources more efficiently and provide better overall performance for users.

- Protecting Against Abuse: Rate limiting helps protect systems against abuse or misuse by limiting the frequency of requests from individual users or clients. It can prevent abusive behavior such as spamming, scraping, or unauthorized access, thereby safeguarding system integrity and security.

Types of Rate Limiting

Rate limiting can be implemented in various ways depending on the specific requirements and constraints of a system. Here are some common types of rate limiting techniques:

1. Fixed Window Rate Limiting

In fixed window rate limiting, a fixed time window (e.g., one minute, one hour) is used to track the number of requests or actions allowed within that window. Requests exceeding the limit are either rejected or throttled until the window resets.

Example: Allow up to 100 requests per minute.

2. Sliding Window Rate Limiting

Sliding window rate limiting dynamically tracks the rate of requests within a sliding time window, which continuously moves forward in time. Requests are counted within the window, and if the limit is exceeded, subsequent requests are rejected or delayed until the window slides past them.

Example: Allow up to 100 requests in any 60-second rolling window.

3. Token Bucket Rate Limiting

Token bucket rate limiting allocates tokens at a fixed rate over time into a “bucket.” Each request consumes one or more tokens from the bucket. Requests are allowed only if there are sufficient tokens in the bucket. If not, requests are delayed or rejected until tokens become available.

Example: Allow up to 100 tokens per minute; each request consumes one token.

4. Leaky Bucket Rate Limiting

Leaky bucket rate limiting models a bucket with a leaky hole where requests are added at a constant rate and leak out at a controlled rate. Requests are allowed if the bucket has capacity, and excess requests are either delayed or rejected.

Example: Allow up to 100 requests with a leak rate of 10 requests per second.

5. Distributed Rate Limiting

Distributed rate limiting involves distributing rate limiting across multiple nodes or instances of a system to handle high traffic loads and improve scalability. Techniques such as consistent hashing, token passing, or distributed caches are used to coordinate rate limiting across nodes.

Example: Distribute rate limiting across multiple API gateways or load balancers.

6. Adaptive Rate Limiting

Adaptive rate limiting adjusts the rate limits dynamically based on system load, traffic patterns, or other factors. Machine learning algorithms, statistical analysis, or feedback loops may be used to adjust rate limits in real-time.

Example: Automatically adjust rate limits based on server load or response times.

Use Cases of Rate Limiting

Below are the use cases of Rate Limiting:

- API Rate Limiting: APIs often implement rate limiting to control the rate of requests from clients, ensuring fair access to resources and preventing abuse. For example, social media platforms limit the number of API requests per user per hour to prevent spamming and ensure system stability.

- Web Server Rate Limiting: Web servers use rate limiting to protect against DoS attacks and prevent server overload. By limiting the rate of incoming requests, web servers can ensure availability and maintain performance during traffic spikes or malicious attacks.

- Database Rate Limiting: Rate limiting is applied to database queries to prevent excessive load on the database server and maintain database performance. For example, an e-commerce website may limit the number of database queries per user to prevent resource exhaustion and ensure smooth operation.

- Login Rate Limiting: Rate limiting is used in login systems to prevent brute-force attacks and password guessing. By limiting the number of login attempts per user or IP address, systems can protect against unauthorized access and enhance security.

- Payment Processing Rate Limiting: Payment processing systems employ rate limiting to prevent fraudulent transactions and ensure compliance with payment regulations. By limiting the rate of payment requests or transactions, systems can detect and mitigate suspicious activities in real-time.

Rate Limiting Algorithms

Several rate limiting algorithms are commonly used in system design to control the rate of incoming requests or actions. Here are some popular rate limiting algorithms:

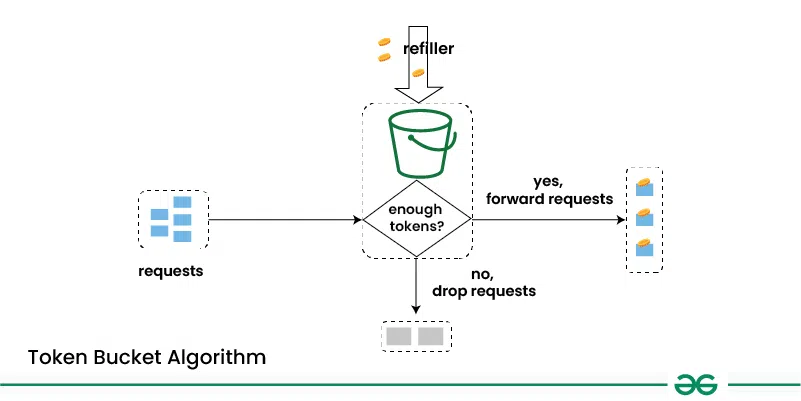

1. Token Bucket Algorithm

- The token bucket algorithm allocates tokens at a fixed rate into a “bucket.”

- Each request consumes a token from the bucket, and requests are only allowed if there are sufficient tokens available.

- Unused tokens are stored in the bucket, up to a maximum capacity.

- This algorithm provides a simple and flexible way to control the rate of requests and smooth out bursts of traffic.

2. Leaky Bucket Algorithm

- The leaky bucket algorithm models a bucket with a leaky hole, where requests are added at a constant rate and leak out at a controlled rate.

- Incoming requests are added to the bucket, and if the bucket exceeds a certain capacity, excess requests are either delayed or rejected.

- This algorithm provides a way to enforce a maximum request rate while allowing some burstiness in traffic.

.webp)

3. Fixed Window Counting Algorithm

- The fixed window counting algorithm tracks the number of requests within a fixed time window (e.g., one minute, one hour).

- Requests exceeding a predefined threshold within the window are rejected or delayed until the window resets.

- This algorithm provides a straightforward way to limit the rate of requests over short periods, but it may not handle bursts of traffic well.

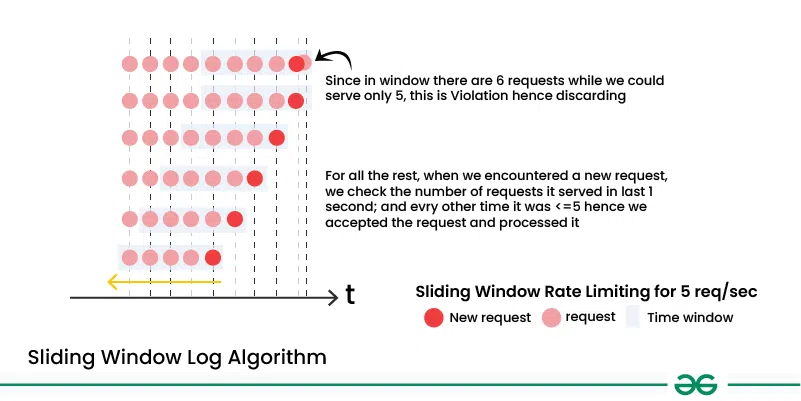

4. Sliding Window Log Algorithm

- The sliding window log algorithm maintains a log of timestamps for each request received.

- Requests older than a predefined time interval are removed from the log, and new requests are added.

- The rate of requests is calculated based on the number of requests within the sliding window.

- This algorithm allows for more precise rate limiting and better handling of bursts of traffic compared to fixed window counting.

Client-Side vs. Server-Side Rate Limiting

Below are the differences between Client-Side and Server-Side Rate Limiting:

|

Aspect

|

Client-Side Rate Limiting

|

Server-Side Rate Limiting

|

|

Location of Enforcement

|

Enforced by the client application or client library.

|

Enforced by the server infrastructure or API gateway.

|

|

Request Control

|

Requests are throttled or delayed before reaching the server.

|

Requests are processed by the server, which decides whether to accept, reject, or delay them based on predefined rules.

|

|

Flexibility

|

Limited flexibility as it relies on client-side implementation and configuration.

|

Offers greater flexibility as rate limiting rules can be centrally managed and adjusted on the server side without client-side changes.

|

|

Security

|

Less secure as it can be bypassed or manipulated by clients.

|

More secure as enforcement is centralized and controlled by the server, reducing the risk of abuse or exploitation.

|

|

Scalability

|

May impact client performance and scalability, especially in distributed environments with a large number of clients.

|

Better scalability as rate limiting can be applied globally across all clients and adjusted dynamically based on server load and resource availability.

|

|

Client Dependency

|

Relies on client compliance and may be circumvented by malicious or misbehaving clients.

|

Independent of client behavior and can be enforced consistently across all clients, regardless of their implementation.

|

|

Overhead

|

Potential overhead on client resources and network bandwidth due to client-side processing and communication.

|

Minimal overhead on clients as rate limiting is handled server-side, reducing the burden on client resources and bandwidth.

|

Rate Limiting in Different Layers of the System

Below is how Rate Limiting can be applied at different layers of the system:

1. Application Layer

Rate limiting at the application layer involves implementing rate limiting logic within the application code itself. It applies to all requests processed by the application, regardless of their source or destination.

- Provides fine-grained control over rate limiting rules and allows for customization based on application-specific requirements.

- Useful for enforcing application-level rate limits, such as limiting the number of requests per user or per session.

2. API Gateway Layer

Rate limiting at the API gateway layer involves configuring rate limiting rules within the API gateway infrastructure. It applies to incoming requests received by the API gateway before they are forwarded to downstream services.

- Offers centralized control over rate limiting policies for all APIs and services exposed through the gateway.

- Suitable for enforcing global rate limits across multiple APIs, controlling access to public APIs, and protecting backend services from excessive traffic.

3. Service Layer

Rate limiting at the service layer involves implementing rate limiting logic within individual services or microservices. It applies to requests processed by each service independently, allowing for fine-grained control and customization.

- Each service can enforce rate limits tailored to its specific workload and resource requirements, enabling better scalability and resource utilization.

- Effective for controlling traffic to individual services, managing resource consumption, and preventing service degradation or failure due to overload.

4. Database Layer

Rate limiting at the database layer involves controlling the rate of database queries or transactions. It applies to database operations performed by the application or services, such as read and write operations.

- Helps prevent database overload and ensures efficient resource utilization by limiting the rate of database access.

- Useful for protecting the database from excessive load, preventing performance degradation, and ensuring fair resource allocation among multiple clients or services.

Benefits of Rate Limiting

Below are the benefits of Rate Limiting:

- Stability: Rate limiting helps maintain system stability by preventing overload situations. By controlling the rate of incoming requests, rate limiting ensures that resources are not exhausted, and the system can operate within its capacity limits.

- Scalability: Rate limiting facilitates better scalability by regulating the flow of traffic and preventing sudden spikes in demand. By enforcing rate limits, systems can handle increased load more effectively and scale resources accordingly.

- Security: Rate limiting enhances security by protecting against denial-of-service (DoS) attacks, brute-force attacks, and other forms of abuse. By limiting the rate of incoming requests, rate limiting mitigates the impact of malicious actors and helps maintain system integrity.

- Fair Resource Allocation: Rate limiting ensures fair resource allocation by preventing certain users or clients from monopolizing resources. By enforcing rate limits, systems can distribute resources more evenly and prioritize serving requests from a diverse user base.

- Optimized Performance: Rate limiting helps optimize performance by preventing excessive resource consumption and improving response times. By controlling the rate of incoming requests, rate limiting reduces the likelihood of bottlenecks and ensures smoother operation.

Challenges of Rate Limiting

Below are the challenges of Rate Limiting:

- Latency: Rate limiting can introduce latency, especially when requests are delayed or throttled due to rate limits being exceeded. This delay can increase response times for clients, leading to a degraded user experience.

- False Positives: Rate limiting may inadvertently block legitimate requests if rate limits are too restrictive or if there are issues with the rate limiting logic. False positives can result in service disruptions and frustration for users.

- Configuration Complexity: Configuring rate limiting rules and thresholds can be complex, especially in systems with diverse traffic patterns and use cases. Finding the right balance between strictness and flexibility requires careful consideration and testing.

- Scalability Challenges: Rate limiting mechanisms themselves can become a bottleneck under high load if not properly scaled. Ensuring that rate limiting systems can handle increasing traffic volumes while maintaining performance is a significant challenge.

- Adaptability: Static rate limiting rules may not always adapt well to changing traffic patterns or evolving threats. Dynamic and adaptive rate limiting mechanisms are needed to effectively respond to fluctuations in demand and emerging security risks.

Share your thoughts in the comments

Please Login to comment...