R Squared | Coefficient of Determination

Last Updated :

03 Apr, 2024

R Squared | Coefficient of Determination: The R-squared is the statistical measure in the stream of regression analysis. In regression, we generally deal with the dependent and independent variables. A change in the independent variable is likely to cause a change in the dependent variable.

The R-squared coefficient represents the proportion of variation in the dependent variable (y) that is accounted for by the regression line, compared to the variation explained by the mean of y. Essentially, it measures how much more accurately the regression line predicts each point’s value compared to simply using the average value of y.

In this article, we shall discuss R squared and its formula in detail. We will also learn about the interpretation of r squared, adjusted r squared, beta R squared, etc.

What is R-squared?

The R-squared formula or coefficient of determination is used to explain how much a dependent variable varies when the independent variable is varied. In other words, it explains the extent of variance of one variable concerning the other.

R-squared Meaning

R-squared, also known as the coefficient of determination, is a statistical measure that represents the proportion of the variance for a dependent variable that’s explained by one or more independent variables in a regression model. In simpler terms, it shows how well the data fit a regression line or curve.

The coefficient of determination which is represented by R2 is determined using the following formula:

R2 = 1 – (RSS/TSS)

Where,

- R2 represents the requrired R Squared value,

- RSS represents the residual sum of squares, and

- TSS represents the total sum of squares.

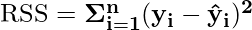

If we are not provided with the residual sum of squares (RSS), it can be calculated as follows:

Where,

- yi is the ith observation, and

is the estimated value of yi.

is the estimated value of yi.

The coefficient of determination can also be calculated using another formula which is given by:

R2 = r2

Where r represents the correlation coefficient and is calculated using the following formula:

![Rendered by QuickLaTeX.com \bold{r = \frac{n\Sigma(xy)-\Sigma x \Sigma y}{\sqrt{[n\Sigma x^2 - (\Sigma x)^2][n\Sigma y^2 - (\Sigma y)^2]}}}](https://www.geeksforgeeks.org/wp-content/ql-cache/quicklatex.com-a8da03530051a20b96e14f500b1bcc78_l3.png)

Where,

- n is the total observations,

- x is the first variable, and

- y is the second variable.

R-Squared Value Interpretation

The R-squared value tells us how good a regression model is in order to predict the value of the dependent variable. A 20% R squared value suggests that the dependent variable varies by 20% from the predicted value. Thus a higher value of R squared shows that 20% of the variability of the regression model is taken into account. A large value of R square is sometimes good but it may also show certain problems with our regression model. Similarly, a low value of R square may sometimes be also obtained in the case of well-fit regression models. Thus we need to consider other factors also when determining the variability of a regression model.

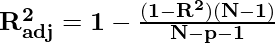

What is Adjusted R Squared?

As R squared formula takes into account only 2 variables. If more variables are to be added, then the value of R square never decreases but increases. Thus, we need to adjust the R square in order to compensate for the added variables. By adjusting the R-squared value the model becomes resistant to overfitting and underfitting. This is called Adjusted R Squared and the formula for it is discussed as follows:

Adjusted R-square formula is given as follows:

Where,

- R2 is the Normal R square value,

- N is the Size of sample, and

- p is the no. of predictors.

Beta R-Square

R squared and adjusted R squared measures the variability of the value of a variable but beta R square is used to measure how large is the variation in the value of the variable.

R-Squared vs Adjusted R-Squared

The key differences between R-Squared and Adjusted R-Squared, are listed as follows:

Parameter

| R-squared

| Adjusted R-squared

|

|---|

Meaning

| It considers all the independent variables to calculate the coefficient of determination for a dependent variable.

| It considers only those independent variables that really affect the value of a dependent variable.

|

|---|

Use

| It is used in case of simple linear regression

| It is used in the case of linear as well as multiple regression.

|

|---|

Range of Value

| Its value ranges from 0 to 1 and can’t be negative

| Its value depends upon the significance of independent variables and may be negative if the value of the R-square is very near to zero.

|

|---|

Advantages and Disadvantages of the R Squared Value

There are various advantages and disadvantages of the r-squared value, some of these advantages and disadvantages are listed as follows:

Advantages of the R Squared Value

The coefficient of determination or R square has the following advantages:

- It helps to predict the value of one variable according to the other.

- It helps to check the accuracy of making predictions from a given data model.

- It helps to predict the degree of association among various variables.

- The coefficient of determination lies in the range [0,1].

- If the coefficient of determination is 0, then the variables are independent, and the value of a variable cannot be predicted at all from the value of the second variable.

- If the coefficient of determination is 1, then the variables are completely dependent and the value of a variable can be accurately predicted from the value of the second variable.

- Any other value tells the extent of the determination of the value of the variable. The higher the value, the higher is the accuracy of the determination.

Disadvantages of the R Squared Value

The coefficient of determination has the following disadvantages:

- It does not tell the fitness of the model and does not consider the bias that the model may exhibit.

- It is also not useful to explain the reliability of the model.

- The value of R square can be low even for a very good model.

R Squared Solved Examples

Problem 1: Calculate the coefficient of determination from the following data:

Solution:

To calculate the coefficient of determination from above data we need to calculate ∑x, ∑y, ∑(xy), ∑x2, ∑y2, (∑x)2, (∑y)2.

X

| Y

| XY

| X2

| Y2

|

|---|

|

1.2

|

0

|

0

|

1.44

|

0

|

|

1

|

5

|

5

|

1

|

25

|

|

2

|

2

|

4

|

4

|

4

|

|

3

|

0

|

0

|

9

|

0

|

∑x = 7.2

| ∑y = 7

| ∑xy = 9

| ∑x

2

= 15.44

| ∑y

2

= 29

|

(∑x)2 = 51.84 and (∑y)2 = 49 and n = 4

Using ![Rendered by QuickLaTeX.com r = \frac{n\Sigma(xy)-\Sigma x \Sigma y}{\sqrt{[n\Sigma x^2 - (\Sigma x)^2][n\Sigma y^2 - (\Sigma y)^2]}}](https://www.geeksforgeeks.org/wp-content/ql-cache/quicklatex.com-933491ce892646f391bdead6bff11685_l3.png)

⇒ ![Rendered by QuickLaTeX.com r = \frac{4(9)-(7.2*7)}{\sqrt{[4(15.44) - 51.84][4(29) - 49]}}](https://www.geeksforgeeks.org/wp-content/ql-cache/quicklatex.com-33e06a0854a0f48c5716a411f1babf5e_l3.png)

⇒ ![Rendered by QuickLaTeX.com r = \frac{36-50.4}{\sqrt{[61.76 - 51.84][116 - 49]}}](https://www.geeksforgeeks.org/wp-content/ql-cache/quicklatex.com-5deb72bd9e9c8e904daa35e537c77b34_l3.png)

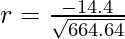

⇒ ![Rendered by QuickLaTeX.com r = \frac{-14.4}{\sqrt{[9.92][67]}}](https://www.geeksforgeeks.org/wp-content/ql-cache/quicklatex.com-c009682be9dd971abd401ab2654669f4_l3.png)

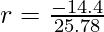

⇒

⇒

⇒

Thus, R^2 = r^2 = (0.558)^2

⇒

Problem 2: Calculate the coefficient of determination from the following data:

Solution:

To calculate the coefficient of determination from above data we need to calculate ∑x, ∑y, ∑(xy), ∑x2, ∑y2, (∑x)2, (∑y)2.

X

| Y

| XY

| X2

| Y2

|

|---|

|

1

|

1

|

1

|

1

|

1

|

|

2

|

2

|

4

|

4

|

4

|

|

3

|

3

|

9

|

9

|

9

|

∑x = 6

| ∑y = 6

| ∑xy = 14

| ∑x2

= 14

| ∑y2

= 14

|

(∑x)2 = 36 and (∑y)2 = 36 and n = 3

Using ![Rendered by QuickLaTeX.com r = \frac{n\Sigma(xy)-\Sigma x \Sigma y}{\sqrt{[n\Sigma x^2 - (\Sigma x)^2][n\Sigma y^2 - (\Sigma y)^2]}}](https://www.geeksforgeeks.org/wp-content/ql-cache/quicklatex.com-933491ce892646f391bdead6bff11685_l3.png)

⇒ ![Rendered by QuickLaTeX.com r = \frac{3(14)-(6*6)}{\sqrt{[3(14) - 36][3(14) - 36]}}](https://www.geeksforgeeks.org/wp-content/ql-cache/quicklatex.com-635f20232bf80964e2ed4a8784836d73_l3.png)

⇒ ![Rendered by QuickLaTeX.com r = \frac{42-36}{\sqrt{[6][6]}}](https://www.geeksforgeeks.org/wp-content/ql-cache/quicklatex.com-9989b094712e34b30177a6a25ea85feb_l3.png)

⇒

⇒

⇒

Thus, R^2 = r^2 = (1)^2

⇒

Problem 3: Calculate the coefficient of determination from the following data:

Solution:

To calculate the coefficient of determination from above data we need to calculate ∑x, ∑y, ∑(xy), ∑x2, ∑y2, (∑x)2, (∑y)2.

X

| Y

| XY

| X2

| Y2

|

|---|

|

1

|

1

|

1

|

1

|

1

|

|

2

|

4

|

8

|

4

|

16

|

|

3

|

6

|

18

|

9

|

36

|

∑x = 6

| ∑y = 11

| ∑xy = 27

| ∑x2

= 14

| ∑y2

= 53

|

(∑x)2 = 36 and (∑y)2 = 121 and n = 3

Using ![Rendered by QuickLaTeX.com r = \frac{n\Sigma(xy)-\Sigma x \Sigma y}{\sqrt{[n\Sigma x^2 - (\Sigma x)^2][n\Sigma y^2 - (\Sigma y)^2]}}](https://www.geeksforgeeks.org/wp-content/ql-cache/quicklatex.com-933491ce892646f391bdead6bff11685_l3.png)

⇒ ![Rendered by QuickLaTeX.com r = \frac{3(27)-(6*11)}{\sqrt{[3(14) - 36][3(53) - 121]}}](https://www.geeksforgeeks.org/wp-content/ql-cache/quicklatex.com-3d868333b1d74a6ec2050f5e6e9f51cd_l3.png)

⇒ ![Rendered by QuickLaTeX.com r = \frac{81-66}{\sqrt{[42-36][159-121]}}](https://www.geeksforgeeks.org/wp-content/ql-cache/quicklatex.com-7a129351c2c95cc646c678468388365f_l3.png)

⇒

⇒

⇒

Thus,

Problem 4: Calculate the coefficient of determination if RSS = 1.5 and TSS = 1.9.

Solution:

Given RSS = 1.5, TSS = 1.9

Using R2 = 1 – (RSS/TSS)

⇒ R2 = 1 – (1.5/1.9)

⇒ R2 = 0.7894 ≈ 79%

Problem 5: Calculate the coefficient of determination if RSS = 1.479 and TSS = 1.89734.

Solution:

Given RSS = 1.479, TSS = 1.89734

Using R2 = 1 – (RSS/TSS)

R2 = 1 – (1.479/1.89734)

⇒ R2 = 0.7795 ≈ 78%

FAQs on R Squared

What is R Squared Formula?

Formula for R Squared is given as follows:

R2 = 1 – (RSS/TSS)

Where,

- R2 represents the requrired R Squared value,

- RSS represents the residual sum of squares, and

- TSS represents the total sum of squares.

What Is Considered As Good Value Of R Squared?

A value between 50 to 70% is considered as good value of R Squared.

What Is The Significance Of R Squared Value?

Coefficient of determination helps use to identify how closely the two variables are related to each other when plotted on a regression line.

Can R Squared Value Be Negative Or Zero?

R Squared value is a square value, so it can never be negative but it may be zero.

What is R squared in regression?

R-squared in regression is a statistical measure that quantifies the proportion of the variance in the dependent variable that is predictable from the independent variable(s). In the context of a regression model, it provides a numerical indicator of how well the model fits the observed data.

Share your thoughts in the comments

Please Login to comment...