How to import structtype in Scala?

Last Updated :

01 Apr, 2024

Scala stands for scalable language. It was developed in 2003 by Martin Odersky. It is an object-oriented language that provides support for a functional programming approach as well. Everything in scala is an object e.g. – values like 1,2 can invoke functions like toString(). Scala is a statically typed language although unlike other statically typed languages like C, C++, or Java, it doesn’t require type information while writing the code. The type verification is done at the compile time. Static typing allows to building of safe systems by default. Smart built-in checks and actionable error messages, combined with thread-safe data structures and collections, prevent many tricky bugs before the program first runs.

Understanding StructType and its need

StructType is used to define the schema in Spark. The schema is used to define the structure of the data stored in data frames and datasets. StructType is the base of the schema in dataframe. It represents a structure that will have data in it. In simpler terms, it defines a table that will have some columns in it. The StructType will always have a StructField inside it. The StructField simply stands for the column in the table. The StructField will then have a type for the data stored in that column like Array, String, nested struct, and other types.

Here is an example of StructType and its fields in Scala. In the following code, we have implemented a function named printSchemaTree to print the schema in a formatted manner. No function in Spark prints the schema in a tree-like manner unless it is used in a dataframe. Since the subject of this article is structtype and its import, the custom function has been implemented to avoid the use of other data structures.

Scala

def printSchemaTree(structType: StructType, indent: String = ""): Unit = {

structType.foreach { field =>

val fieldType = field.dataType

val fieldTypeName = if (fieldType.isInstanceOf[StructType]) "StructType" else fieldType.typeName

val nullable = if (field.nullable) "true" else "false"

println(s"$indent- ${field.name}: $fieldTypeName ($nullable)")

if (fieldType.isInstanceOf[StructType]) {

printSchemaTree(fieldType.asInstanceOf[StructType], indent + " ") // Indent for nested structures

}

}

}

val schema = StructType(Seq(

StructField("Class", StringType, true),

StructField("Count", IntegerType, true),

StructField("Students", StructType(Seq(

StructField("Name", StringType, true),

StructField("Id", IntegerType, true)

)), true)

))

printSchemaTree(schema, " ")

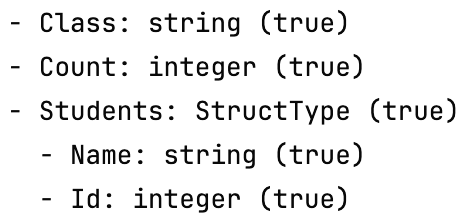

Output:

Output

As seen in the pic above the StructType defines the base of the schema and can be nested inside the StructFields as well.

Importing StructType in Scala

The only command to import modules in scala is the import statement. However, there are various methods of using the import command to import the StructType class. Let us see each of them.

Method 1: Importing the entire package

We can import the entire types package into our code and use all the types inside it to build our schema. Let us import the entire package in the following example:

Scala

import org.apache.spark.sql.types._

val schema = StructType(Seq(

StructField("Name", StringType, true),

StructField("Id", IntegerType, true)

))

printSchemaTree(schema, " ")

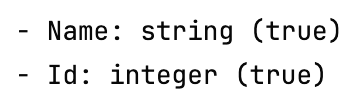

Output:

Output

In the above, the entire package was imported.

Method 2: Importing only specific types

We can specify only the types we require to be imported from the types package. This will help to reduce the memory requirement and help in building concise code. Let us follow this method in the following example:

Scala

import org.apache.spark.sql.types.{StringType, IntegerType, StructField, StructType}

val schema = StructType(Seq(

StructField("Type", StringType, true),

StructField("Serial No.", IntegerType, true)

))

printSchemaTree(schema, " ")

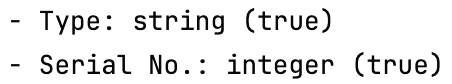

Output:

Output

Here we just imported the required types.

Method 3: Alias the name while importing

The names might seem too lengthy when we have to define alot of schema. To avoid using such long names we can alias them to acronyms or other short names that suit the needs. Let us alias the names while importing in the following example:

Scala

import org.apache.spark.sql.types.{StringType => st, IntegerType => it, StructField => sf, StructType => sty}

val schema = sty(Seq(

sf("Case", st, true),

sf("Serial No.", it, true)

))

printSchemaTree(schema, " ")

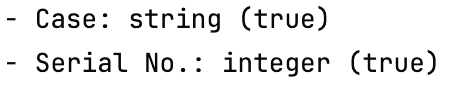

Output:

Output

Here we used alias names for the classes to define the schema.

Conclusion

In the end, we have seen that we can import the StructType only by using the import statement. However, there are various ways of importing the StructType using the import statement only. The first is to directly import the entire package and then use the names of the types we need. The second is to specify only the types we need in the import statement. This method results in less imports, thus reducing the memory requirement and increasing execution speed. The last is to use alias to avoid name collisions or the use of lengthy names.

Share your thoughts in the comments

Please Login to comment...