In the fifth phase of compiler design, code optimization is performed. There are various code optimization techniques. But the order of execution of code in a computer program also matters in code optimization. Global Code Scheduling in compiler design is the process that is performed to rearrange the order of execution of code which improves performance. It comprises the analysis of different code segments and finding out the dependency among them.

The goals of Global Code Scheduling are:

- Optimize the execution order

- Improving the performance

- Reducing the idle time

- Maximize the utilization of resources

There are various techniques to perform Global Code Scheduling:

- Primitive Code Motion

- Upward Code Motion

- Downward Code Motion

Primitive Code Motion

Primitive Code Motion is one of the techniques used to improve performance in Global Code Scheduling. As the name suggests, Code motion is performed in this. Code segments are moved outside of the basic blocks or loops which helps in reducing memory accesses, thus improving the performance.

Goals of Primitive code motion:

- Eliminates repeated calculations

- Reduces redundant computations

- Improving performance by reducing number of operations

Primitive Code Motion can be done in 3 ways as follows

Code Hoisting: In this technique, the code segment is moved from inside a loop to outside the loop. It is done when the output of the code segment does not change with loop’s iteration. It reduces loop overhead and redundant computation.

C++

int main() {

int x,y,b,a;

x=1,y=2,a=0;

while(a<10)

{

b=x+y;

cout<<a;

a++;

}

}

int main() {

int x,y,b,a;

x=1,y=2,a=0;

b=x+y;

while(a<10)

{

cout<<a;

a++;

}

}

|

Code Sinking: In this technique, the code segment is moved from outside to inside the loop. It is performed when the code’s output changes with each iteration of the loop. It reduces the number of computation.

C++

int main() {

int a,b;

a=0,b=1;

for(int i=0;i<5;i++)

{

cout<<a++;

}

for(int i=0;i<5;i++)

{

cout<<b++;

}

}

int main() {

int a,b;

a=0,b=1;

for(int i=0;i<5;i++)

{

cout<<a++;

cout<<b++;

}

}

|

Memory Access Optimization: In this technique, the memory’s read or write operation is moved out from the loops or blocks. This method eliminates redundant memory accesses and enhances cache utilization.

Upward Code Motion

Upward Code Motion is another technique used to improve performance in Global Code Scheduling. In this, the code segment is moved outside of the block or loop to a position above the block.

Goals of Upward Code Motion:

- Reduces computational overheads

- Eliminates repeated calculations

- Improves performance

Steps involved in Upward Code Motion:

- Identification of loop invariant code

- Moving the invariant code upward

- Updating variable dependencies accordingly

C++

void sum(int a, int b) {

int ans= 0;

for (int i=0;i<4;i++) {

ans+=a+b;

}

cout<<ans;

}

void sum(int a, int b) {

int z=a+b;

int ans= 0;

for (int i=0;i<4;i++) {

ans+=z;

}

cout<<ans;

}

|

Downward Code Motion

This is another technique used to improve performance in Global Code Scheduling. Downward Code Motion is almost same as Upward Code Motion except the fact that in this method code segments is moved from outside the loop to inside the loop. It should be applied in a way so that it do not add new dependencies in the code.

Goals of Downward Code Motion:

- Reduces computational overheads

- Eliminates repeated calculations

- Improves performance

Steps involved in Downward Code Motion:

1. Identification of loop invariant code

2. Moving the invariant code downward

3. Updating variable dependencies accordingly

C++

void add(int a, int b) {

int ans = a+b;

for (int i=0;i<100;i++) {

ans+= i;

}

cout<<ans;

}

void add(int a, int b) {

int ans = a+b;

cout<<ans;

for (int i=0;i<100;i++) {

ans+= i;

}

}

|

Updating Data Dependencies

Code motion techniques helps to improve the performance of the code but at the same time can introduce errors in the code. To prevent this, it is important to update data dependencies. This helps to check if the moved code performs in a non-erroneous manner.

Steps involved:

Step 1: Analyze the code segment that is being moved and note all the variables that depends on it.

Step 2: If a code segment is moved from outside of the block to inside, some of the functions may become unavailable due to the change in scope of the code. We need to introduce new declarations inside the block.

Step 3: In this step, references of the variables are updated that are being moved. Replace references to variables that were defined outside the block with references to the new declarations inside the block.

Step 4: If the code segment includes assignments to variables, make sure they are updated accordingly. Example, replace references to variables that were defined outside the block with references to the new declarations inside the block.

Step 5: At last, verification is done to ensure that the moved code gives the correct result and doesn’t produce any error.

Global Scheduling Algorithms

Global Scheduling Algorithms are used to improve performance by reducing the execution time or maximizing resource utilization.

1. Trace Scheduling:

In trace scheduling algorithm, we rearrange the instructions along traces (frequently executed paths). This helps in improving the performance of the code.

Goals of Trace Scheduling Algorithm:

- Minimize branch misprediction

- Maximize instruction-level parallelism

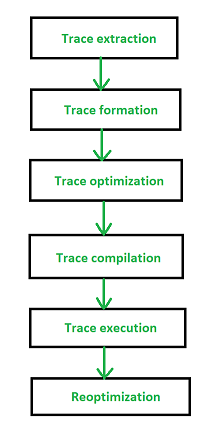

Steps in Trace Scheduling

2. List Scheduling:

In list scheduling algorithm, the overall execution time of a program can be reduced by rescheduling instructions. They can be rescheduled based on their availability or resource constraints.

Advantage of List Scheduling Algorithm:

- It is a flexible algorithm

- Can handle multiple constraints like resource constraints and instructions latencies

- Used in modern compilers

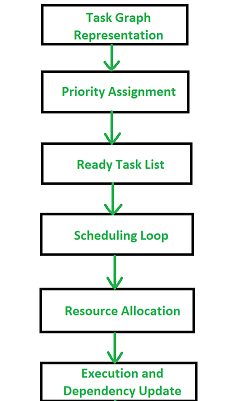

Steps in List scheduling

3. Modulo Scheduling:

In Modulo Scheduling Algorithm, iteration counts must be known in advance. It works by dividing the iterations of loop into groups and schedules instructions from different iterations parallelly. It aims at exploiting parallelism within loops.

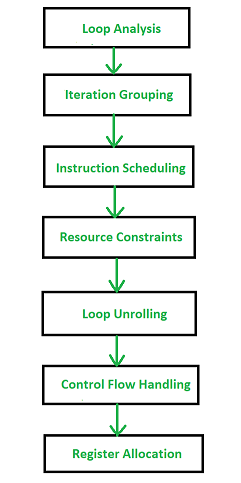

Steps in Modulo scheduling

4. Software Pipelining:

In this algorithms, loop iterations are overlapped to improve performance and reduce time complexity. It executes multiple loops simultaneously. The main aim of this algorithm is to reduce loop-level parallelism and increase instruction-level parallelism

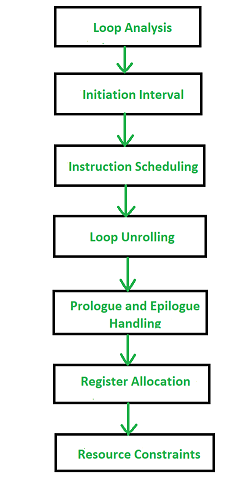

Steps in software pipelining

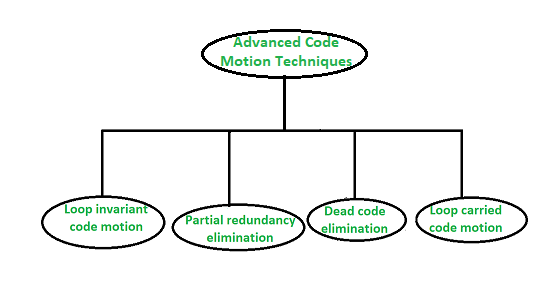

Advanced Code Motion Techniques:

In Code optimization phase of compiler, the main aim is to increase the overall performance of the code. Code Motion Techniques are used to improve the performance of the program.

Code motion techniques

Loop Invariant Code Motion

C++

int main() {

int sum=0;

for(int i=0;i<5;i++)

{

sum+=5;

cout<<i;

}

}

int main() {

int sum=0;

sum+=5;

for(int i=0;i<5;i++)

{

cout<<i;

}

}

|

Partial Redundancy Elimination

C++

void prod(int a, int b) {

int ans= 0;

for (int i=0;i<4;i++) {

ans+=a*b;

}

cout<<ans;

}

void prod(int a, int b) {

int z=a*b;

int ans= 0;

for (int i=0;i<4;i++) {

ans+=z;

}

cout<<ans;

}

|

In this example, we remove the partial redundancy by computing a*b only one time instead of computing it repeatedly inside a loop.

Dead Code Elimination: It is the code that is unreachable or has no effect on the program.

C++

int sum(int a, int b) {

int sum = 0;

if (a>0)

{

sum=a+b;

}

return sum;

else

{

...

}

}

int sum(int a, int b) {

int sum = 0;

if (a>0)

{

sum=a+b;

}

return sum;

}

|

In this example, the else part is removed as it is unreachable part of the code.

Loop Carried Code Motion: It is same as loop invariant code motion. In this, the loop invariant part of the loop is moved out of the loop to reduce the number of computations.

Interaction with Dynamic Schedulers

Dynamic schedulers are used in processors. They dynamically reorder the instructions and helps in maximizing the utilization of resources.

Goals of Dynamic Scheduler

- Improves efficiency of the processor

- Exploits instruction-level parallelism

When interacting with Dynamic Schedulers, the analysis of instruction dependency must be done correctly. Dynamic schedulers determines the order of execution of instructions correctly only when the data is accurate. Thus, the analysis of instruction dependency is important when interacting with dynamic schedulers. It involves analyzing data dependencies, control dependencies and resource dependencies. They work by assigning priorities to instructions so that they can be executed in a certain order. Dynamic schedulers makes decision based on the availability of resources. Dynamic Schedulers also helps in handling resource conflicts when there is a conflict among various resources. Code optimization also helps dynamic schedulers to improve instruction scheduling and resource utilization.

Share your thoughts in the comments

Please Login to comment...