Float Precision in Programming

Last Updated :

03 May, 2024

Float Precision refers to the way in which floating-point numbers, or floats, are represented and the degree of accuracy they maintain. Floating-point representation is a method used to store real numbers within the limits of finite memory in computers, maintaining a balance between range and precision.

Floating-Point Representation in Programming:

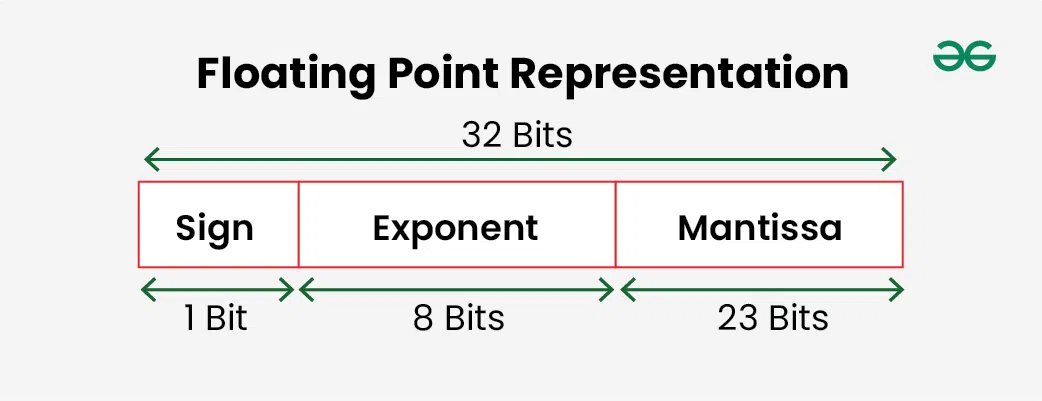

Floating-point numbers are represented in computers using a format specified by the IEEE 754 standard. This format includes three parts: the sign, the exponent, and the significand (or mantissa). The combination of these allows for the representation of a wide range of numbers, but not without limitations.

The precision of a floating point type defines how many significant digits it can represent without losing any information about the stored number. The number of digits of precision a floating point type has depends on both the size (double has greater precision than float) and the particular value being stored (some values can be represented more precisely than others).

Float has 6 to 9 digits of precision so a float can represent any number up to 6 significant digits. A number with 7 to 9 significant digits might not be represented correctly and depends on the specific value. Number with more than 9 digits of precision cannot be represented exactly using floating-point precision and requires a double to store correctly.

Float Precision Issues in Programming:

Due to the finite memory available for storing these numbers, not all real numbers can be represented exactly. This limitation leads to precision issues such as rounding errors and the problem of representational inaccuracy for very large or very small numbers. Operations on floating-point numbers can accumulate these errors, leading to significant discrepancies in calculations.

Understanding Float Precision in Different Programming Languages:

Understanding float precision across different programming languages is crucial due to the ubiquitous nature of floating-point arithmetic in computing. Each language may handle floating-point numbers slightly differently, but all are subject to the limitations imposed by the IEEE 754 standard. Here, we explore how float precision manifests in Python, Java, and JavaScript, offering code examples and explanations to highlight the nuances in each.

Float Precision in Python:

In Python, floating-point numbers are represented according to the IEEE 754 double-precision format. This can lead to precision issues, especially when dealing with decimal fractions that cannot be precisely represented in binary.

Example:

Python

a = 0.1

b = 0.2

sum = a + b

print("Sum:", sum)

OutputSum: 0.30000000000000004

Explanation: Despite expecting the sum of 0.1 and 0.2 to be exactly 0.3, the output reveals a slight discrepancy. This is due to the internal binary representation of these decimal numbers, illustrating the inherent precision limitations in floating-point arithmetic.

Float Precision in Java:

Java, like Python, adheres to the IEEE 754 standard for floating-point arithmetic. Java provides two primary data types for floating-point numbers: float (single precision) and double (double precision).

Example:

Java

public class FloatPrecision {

public static void main(String[] args) {

double a = 0.1;

double b = 0.2;

double sum = a + b;

System.out.println("Sum: " + sum); // Outputs: Sum: 0.30000000000000004

}

}

OutputSum: 0.30000000000000004

Explanation: Java’s double data type also cannot precisely represent the sum of 0.1 and 0.2 due to the binary floating-point representation.

Float Precision in JavaScript:

JavaScript uses a number type for all numbers, following the IEEE 754 double-precision format. It shares similar floating-point precision issues as Python and Java.

Example:

JavaScript

let a = 0.1;

let b = 0.2;

let sum = a + b;

console.log('Sum:', sum); // Outputs: Sum: 0.30000000000000004

OutputSum: 0.30000000000000004

Explanation: JavaScript also demonstrates the floating-point precision issue, where the sum of 0.1 and 0.2 does not equal 0.3 exactly. This is a common point of confusion and bugs in web development, especially in applications requiring high numerical accuracy.

Despite the differences in syntax and some language-specific optimizations, Python, Java, and JavaScript all exhibit the characteristic limitations of floating-point arithmetic as defined by the IEEE 754 standard. Understanding these limitations is essential for developers working across different languages, as it impacts everything from financial calculations to scientific computing. The key takeaway is to be mindful of precision issues and employ strategies such as using libraries for arbitrary precision arithmetic or applying suitable rounding techniques when exact decimal representation is required.

Common Errors of Float Precision in Programming:

- Assuming Equality: Expecting two floating-point numbers to be exactly equal can lead to errors.

- Accumulation of Errors: Repeated operations can accumulate rounding errors, significantly affecting results.

- Loss of Precision: Operations on numbers with vastly different magnitudes can lead to a loss of precision in the result.

Best Practices for Handling Float Precision in Programming:

- Use of Arbitrary Precision Libraries: Libraries like Python’s decimal can handle numbers with many digits of precision.

- Rounding Operations: Applying appropriate rounding strategies can mitigate some of the precision issues.

- Comparison with a Tolerance: Instead of direct equality, compare floating-point numbers within a small range or tolerance.

Conclusion

While floating-point numbers are a fundamental part of computing, handling them requires an understanding of their limitations and precision issues. By employing best practices and understanding the nuances in different programming languages, developers can mitigate the risks associated with float precision and ensure more accurate and reliable calculations.

Share your thoughts in the comments

Please Login to comment...