Bernoulli Naive Bayes

Last Updated :

25 Oct, 2023

Supervised learning is a subcategory of machine learning algorithms. In this way, the models are trained on labeled datasets. Under supervised learning, there are two categories: one is classification, and the other is regression. Classification is used for discrete prediction, while regression is used for continuous value prediction.

Naive Bayes

The Naive Bayes algorithm is a supervised machine learning algorithm. It uses the Bayes Theorem to predict the posterior probability of any event based on the events that have already occurred. Naive Bayes is used to perform classification and assumes that all the events are independent. The Bayes theorem is used to calculate the conditional probability of an event, given that another event has already occurred.

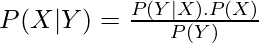

Let X and Y be two events. Then the probability of occurrence of X given Y has already occurred is given by

Here, P(X|Y) is the posterior probability

P(Y|X) is the likelihood of the occurrence of the event

P(X) is the prior probability

Bernoulli distribution

Bernoulli distribution is used for discrete probability calculation. It either calculates success or failure. Here the random variable is either 1 or 0 whose chance of occurring is either denoted by p or (1-p) respectively.

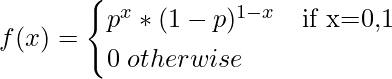

The mathematical formula is given

Now in the above function if, we put x=1 then the value of f(x) is p and if we put x=0 then the value of f(x) is 1-p. Here, p denotes the success of an event.

Bernoulli Naive Bayes

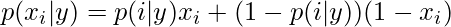

Bernoulli Naive Bayes is a subcategory of the Naive Bayes Algorithm. It is used for the classification of binary features such as ‘Yes’ or ‘No’, ‘1’ or ‘0’, ‘True’ or ‘False’ etc. Here it is to be noted that the features are independent of one another. Bernoulli Naive Bayes is basically used for spam detection, text classification, Sentiment Analysis, used to determine whether a certain word is present in a document or not. The decision rule of Bernoulli NB is given as follows

Here, p(xi |y) is the conditional probability of xi occurring provided y has occurred.

i is the event

xi holds binary value either 0 or 1

Implementing Bernoulli Naive Bayes

For performing classification using Bernoulli Naive Bayes we have considered an email dataset.

The email dataset comprises of four columns named Unnamed: 0, label, label_num and text. The category of label is either ham or spam. For ham the number assigned is 0 and for spam 1 is assigned. Text comprises the body of the mail. The length of the dataset is 5171. The dataset can be downloaded from here.

Python3

import numpy as np

import pandas as pd

from sklearn.naive_bayes import BernoulliNB

from sklearn.feature_extraction.text import CountVectorizer

|

In the above code we have imported necessary libraries like pandas, numpy and sklearn. Bernoulli Naive Bayes is a part of sklearn package.

Python3

df=pd.read_csv("/content/spam_ham_dataset.csv")

print(df.shape)

print(df.columns)

df= df.drop(['Unnamed: 0'], axis=1)

|

In this above code we have performed a quick data analysis that includes reading the data, dropping unnecessary columns, printing shape of data, information about dataset etc.

Python3

x =df["text"].values

y = df["label_num"].values

cv = CountVectorizer()

x = cv.fit_transform(x)

|

In the above code since text data is used to train our classifier we convert the text into a matrix comprising numbers using Count Vectorizer so that the model can perform well.

Python3

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(x, y, test_size=0.20, random_state=0)

bnb = BernoulliNB(binarize=0.0)

model = bnb.fit(X_train, y_train)

y_pred = bnb.predict(X_test)

from sklearn.metrics import classification_report

print(classification_report(y_test, y_pred))

|

Output:

precision recall f1-score support

0 0.84 0.98 0.91 732

1 0.92 0.56 0.70 303

accuracy 0.86 1035

macro avg 0.88 0.77 0.80 1035

weighted avg 0.87 0.86 0.84 1035

In the above code we have divided the data into train and test in the ration 80:20. Then we trained the model using the training data and generated a classification report using test data and predicted data. From the classification report it can be seen that the precision, recall and f1 score of class 0 is 0.84, 0.98 and 0.91 respectively whereas for class 1 the precision, recall and f1 score are 0.92, 0.56 and 0.70 respectively. Since 13% of the dataset comprises spam category there is a drop in the value of recall. The overall accuracy of the model is 86% which is good.

Advantages

There are many advantages of Bernoulli Naive Bayes

- It is simple and efficient as it gives good accuracy for small dataset.

- It performs well for binary dataset.

- It works best for text classification as it works on the principle of independence.

Disadvantages

There are many disadvantages of using this model. Some of them are as follows:

- Since it uses Naive Bayes, it assumes that all the features are independent which often causes the model to generate inappropriate results.

- It is not suitable for multiclass problem.

- When there is class imbalance Bernoulli Naive Bayes cannot handle properly thus leading to a drop in overall accuracy of the model.

Share your thoughts in the comments

Please Login to comment...