AWS S3 CP Recursive

Last Updated :

11 Mar, 2024

Amazon Web Services (AWS) is a comprehensive cloud computing platform offering many services, including storage, computing, databases, and more. Amazon S3 (Simple Storage Service) is a scalable object storage service from AWS. S3 provides a highly durable and cost-effective platform for storing various data types, including documents, images, videos, backups, and archives. S3 has different pricing models based on the frequency of accessing those objects. You can access your S3 data from anywhere with an internet connection, making it ideal for various cloud-based applications.

AWS CLI

Amazon’s command-line interface (CLI) is a powerful tool that allows users to interact with various AWS services through a command-line interface.

Installations

If you haven’t set up your system for Boto3 yet, follow the below steps

Install AWS CLI

Assuming you already have an AWS account, follow the steps below to install AWS CLI on your system (these steps are based on Ubuntu OS). You can either follow the instructions from the AWS documentation or you can run the below commands in your terminal to install AWS CLI in your system

sudo apt-get install awscli -y

.png)

Install AWS CLI

Configure AWS Credentials

- Login to AWS Console

- Click on your username at top right corner and click on Security Credentials

- Under Access keys click on Create access key –> Choose Command Line Interface (CLI) –> add some description for it -> Create

- Either copy Access key ID and Secret access key displayed on the screen or download csv file.

aws configure --profile <profile-name>

For example:

aws configure --profile dillip-tech

-1024.png)

Configure AWS profile

Fill the prompts for access key and secret you’ve copied in above steps, and now you’re all set to tryout AWS S3 cp recusive command.

S3 recursive Copy

The aws s3 cp recursive command makes your task easier when you’re about to copy files or entire directories between your local machine and S3 buckets. Here’s the basic syntax

aws s3 cp <SOURCE_PATH> <DESTINATION_PATH> --recursive --profile <myprofile>

- –recursive: This flag enables recursive copying, meaning it copies all files and sub-directories within the source path.

- SOURCE_PATH: The location of the file or directory on your local machine, or s3 object path

- DESTINATION_PATH: The path to the S3 bucket and object key (file name) within the bucket where you want to copy the data, (you can mention your local path as well)

Note: As both source_path and Destination path accepts both local path, & s3 path, you should make sure you use it appropriately, as giving both the values as local paths is meaning less.

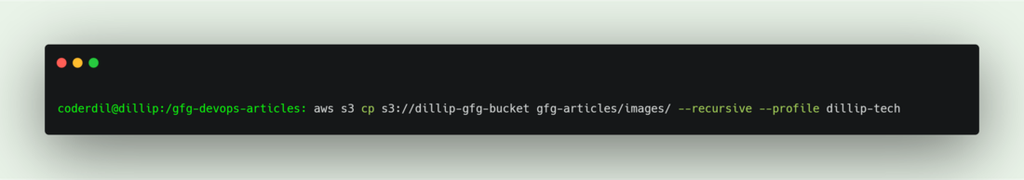

Sample Command:

aws s3 cp <local-dir-path> s3://my-bucket/my-path/ --recursive --profile <profile-name>

Common Scenarios

We gonna demonstrate the common scenarios we might come through, that we can do with AWS S3, do hands-on with the below commands.

1. Uploading a Local Directory to S3:

aws s3 cp /path/to/local/directory s3://your-bucket-name/ --recursive --profile <myprofile>

This command copies the entire directory /path/to/local/directory and its contents to your S3 bucket named your-bucket-name, we can slo filter the files to be uploaded using the additional parameters discussed in further sections below.

S3 cp recursive example 1

2. Downloading a Directory from S3:

aws s3 cp s3://your-bucket-name/directory-path /path/to/local/destination --recursive --profile <myprofile>

This command downloads the directory named directory-path from your S3 bucket your-bucket-name and places it in the local directory /path/to/local/destination, we can use this command whenever we need to copy our s3 data to local or viceversa, using this we need not follow traditinal steps like compressing the data, downloading the zip/tar file and then extracting stuff, s3 simplifies the process.

S3 cp recursive example 2

3. Upload current directory to S3

To upload the all files in the current directly we can use source location as ‘.’ and use recursive, below is the sample command to do so.

aws s3 cp . s3://your-bucket-name/ --recursive --profile <myprofile>

S3 cp recursive example 3

Dry Run

In aws cli you can dry run the command using the –dryrun argument, It displays the operations that would be performed using the specified command without actually running them, in this case the files to be copied to s3 from local storage.

aws s3 cp . s3://your-bucket-name/ --recursive --dryrun --profile <myprofile>

Advanced parameters:

AWS S3 also provides more filtering options when copying files from local to S3 or vice versa, i.e –include and –exclude arguments.

- –include (string) Don’t exclude files or objects in the command that match the specified pattern. See Use of Exclude and Include Filters for details.

- –exclude (string) Exclude all files or objects from the command that matches the specified pattern.

The pattern should contain the following expressions

- * Matches everything

- ? Matches any single character

- [sequence] Matches any character in sequence

- [!sequence] Matches any character not in sequence

The below example will copy all files in the current directory to s3 bucket except the files in the .git folder

aws s3 cp . s3://your-bucket-name/ --recursive --exclude ".git/*" --profile <myprofile>

S3 cp recursive – FAQ’s

Can I copy between different S3 buckets?

Yes, you can modify the command syntax to specify source and destination paths within S3 buckets:

aws s3 cp --recursive s3://source-bucket/directory s3://destination-bucket/new-directory

How can I copy files with specific extensions?

You can use wildcards with the source path to filter files based on extensions, this might help often when you’re working with static websites with s3, where you need to upload only css, images, js etc, ignore rest of the redundnt files.

aws s3 cp s3://source-bucket/source-path/ local-directory/ --recursive --exclude "*" --include "*.jpg"

How does AWS S3 CP Recursive handle large datasets efficiently?

Strategies such as multi-part uploads and parallel transfers are employed to optimize the transfer of large datasets, enhancing efficiency.

Share your thoughts in the comments

Please Login to comment...