What are In-Memory Caches?

Last Updated :

16 Apr, 2024

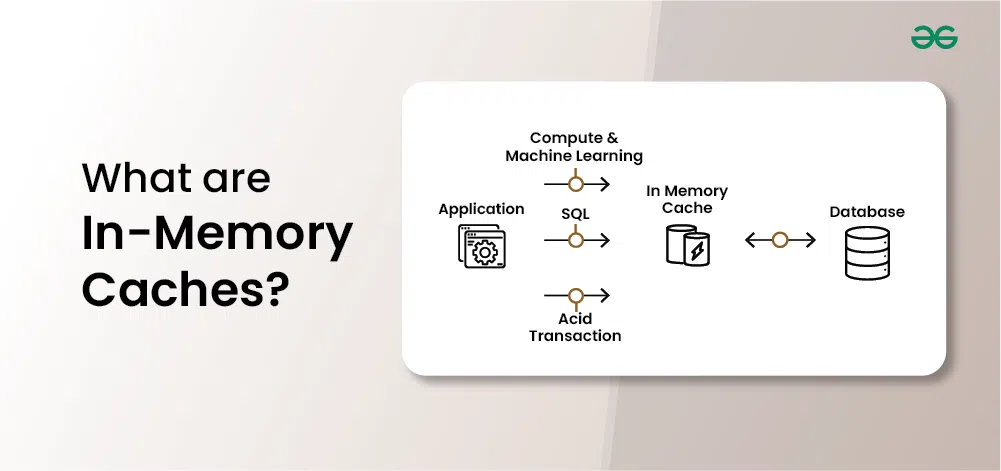

In-memory caches are essential tools in modern computing, providing fast access to frequently used data by storing it in memory. This article explores the concept of in-memory caches, their benefits, and their role in enhancing system performance and scalability.

Important Topics for In-Memory Caches

What are In-Memory Caches?

In-memory caches, additionally recognized as memory caches, are high-speed data storage structures that save regularly used data in the principal memory of a computer.

- This suggests that the data is saved in RAM rather than being retrieved from a slower storage system like a solid-state drive.

- The predominant reason for in-memory caches is to limit the time it takes to get to access and retrieve data, finally enhancing the standard overall performance of the system.

Importance of Caching in System Design

Caching has a significant impact on system design, particularly in systems that take care of an excessive quantity of records and user requests. Below are some of the key motives why caching is indispensable in device design:

- Improved Performance: As stated earlier, caching helps decrease the time it takes to get access to and retrieve data. This affects in enhanced system performance and makes the user experience better.

- Scalability: Caching additionally helps enhance the scalability of a system. By lowering the load on the principal storage system, caching lets the system deal with a greater quantity of information and user requests except slowing down.

- Cost-Effective: Caches are fairly more cost-effective in contrast to different data storage systems like solid-state drives or hard drives. This makes them a within-your-budget answer for managing giant amounts of data.

Purpose of In-Memory Caches

In-memory caches serve several key purposes:

- Faster Access Times: Data stored in memory can be accessed much faster than data stored on disk. In-memory caches allow applications to retrieve frequently accessed data with minimal latency, improving overall system responsiveness.

- Reduced Load on Backend Systems: By caching frequently accessed data in memory, in-memory caches reduce the number of requests that need to be served by backend systems, such as databases or external APIs. This helps alleviate the load on these systems, improving their scalability and performance.

- Improved Scalability: In-memory caches help distribute the workload across multiple instances of an application by reducing the need for each instance to access backend systems independently. This makes it easier to scale out applications horizontally to handle increasing levels of traffic or demand.

- Optimized Resource Utilization: By caching data in memory, in-memory caches make more efficient use of available resources, such as CPU and disk I/O. This can lead to cost savings by reducing the need for expensive hardware or cloud resources.

- Enhanced User Experience: Faster access times and improved system responsiveness resulting from in-memory caching lead to a better user experience for application users. Users experience shorter response times and smoother interactions, increasing satisfaction and engagement.

Key Components of In-Memory Caches

The key components of In-Memory Caches include:

- Cache Store: The primary storage component where data is stored in memory.

- Cache Entries: Individual items of data stored in the cache, typically consisting of key-value pairs.

- Cache Eviction Policy: Determines which cache entries are removed when the cache reaches its maximum capacity.

- Cache Expiration Policy: Specifies how long cache entries are valid before they expire and need to be refreshed or removed.

- Concurrency Control: Mechanisms to ensure safe and efficient access to the cache in multi-threaded or distributed environments.

- Monitoring and Management: Tools for monitoring cache performance, usage, and health, and managing cache configurations.

- Integration with Application Frameworks: Support for integration with application frameworks and libraries to facilitate easy use of caching within applications.

How In-Memory Caches Work

In-memory caches work by storing frequently accessed data in the computer’s Random Access Memory (RAM), which is much faster to access than retrieving data from disk or external sources like databases.

Below is the explanation of how In-Memory Cache work:

- Data Access: When an application needs certain data, it first checks the in-memory cache to see if the data is already stored there.

- Cache Hit: If the data is found in the cache (known as a cache hit), the application can retrieve it quickly, since accessing data from memory is much faster than accessing it from disk or external sources.

- Cache Miss: If the data is not found in the cache (known as a cache miss), the application retrieves the data from its original source, such as a disk or a database.

- Populating the Cache: After retrieving the data, the application stores it in the cache so that it’s readily available for future requests.

- Expiration and Eviction: In-memory caches often have mechanisms for managing the stored data, such as setting expiration times or evicting less frequently used data to make room for new data.

- Cache Efficiency: The effectiveness of an in-memory cache depends on factors like the cache size, the caching algorithm used, and the frequency of data access. Optimizing these factors can improve the cache hit rate and overall performance of the system.

Overall, in-memory caches work by keeping frequently accessed data readily available in fast-access memory, reducing the need to retrieve data from slower storage systems and improving the performance and scalability of application.

Real-world Examples of In-Memory Cache

A real-world example of an in-memory cache is the caching layer used in web applications to store frequently accessed data, such as HTML fragments, database query results, or session information.

Let’s consider a simplified scenario of an e-commerce website to illustrate how an in-memory cache can be implemented:

- Product Catalog: The e-commerce website has a product catalog that contains information about various products, including their names, descriptions, prices, and availability.

- Product Page: When a user visits a product page on the website, the application needs to retrieve product information from the database to display on the page. This involves executing a database query to fetch the relevant data.

- Caching Strategy: To improve performance and reduce database load, the application implements an in-memory cache to store product information. When the application receives a request for a product page, it first checks the cache to see if the product information is already stored there.

- Cache Hit: If the product information is found in the cache (a cache hit), the application retrieves the data from the cache, avoiding the need to execute a database query. This reduces latency and improves the responsiveness of the website.

- Cache Miss: If the product information is not found in the cache (a cache miss), the application retrieves the data from the database and stores it in the cache for future requests. This ensures that frequently accessed product information is cached and readily available for subsequent requests.

- Cache Expiration: To ensure that cached data remains fresh and up-to-date, the application implements a cache expiration policy. Cached product information is periodically invalidated or refreshed based on predefined expiration times or triggers such as product updates or inventory changes.

- Cache Eviction: In scenarios where the cache reaches its maximum capacity, the application uses eviction policies to remove less frequently accessed or expired data from the cache to make room for new data.

- Session Management: The application also uses the in-memory cache to store session information for authenticated users. This includes user authentication tokens, session IDs, and user-specific data such as shopping cart contents or preferences.

By implementing an in-memory cache in this real-world example, the e-commerce website improves its performance, scalability, and user experience by reducing database load, minimizing latency, and efficiently managing data access.

Types of In-Memory Caches

1. Single-Level Cache

This is the simplest form of in-memory cache, where data is stored in a single cache layer. It’s suitable for applications with relatively small datasets or low access frequency.

2. Multi-Level Cache

In a multi-level cache, data is stored across multiple cache layers, with each layer serving as a cache for the layer below it. This hierarchy allows for more efficient use of memory and better performance by prioritizing frequently accessed data in higher-level caches.

3. Distributed Cache

A distributed cache spans multiple nodes or servers, allowing for horizontal scalability and fault tolerance. Data is partitioned and replicated across multiple cache nodes to ensure high availability and reliability.

4. Near Cache

A near cache is a small cache located near the application process, typically within the same JVM or process. It acts as a front-end cache to reduce latency and overhead when accessing a remote or distributed cache.

5. Transactional Cache

A transactional cache supports atomicity, consistency, isolation, and durability (ACID) properties for cache operations within a transactional context. This ensures that cache operations are consistent and reliable, even in the presence of concurrent accesses and failures.

6. In-Memory Data Grid (IMDG)

An in-memory data grid is a distributed, scalable, and fault-tolerant data storage system that provides in-memory caching capabilities. IMDGs typically offer features such as distributed caching, data partitioning, replication, and distributed computing capabilities.

Use Cases and Applications of In-Memory Caches

In-memory caches have a extensive vary of use cases and applications, some of which include:

- E-commerce: In-memory caches are regularly used in e-commerce purposes to save product data, pricing, and stock information. This lets in for speedy and environment friendly retrieval of data, ensuring in a seamless buying experience for customers.

- Real-time Analytics: In-memory caches are well-suited for real-time analytics as they can quickly process and analyze massive quantities of data. This is mainly beneficial in industries such as finance, as real-time data evaluation is necessary for making funding related decisions.

- Ad Tech: In the marketing industry, in-memory caches are used to store real-time bidding data. This approves for rapid decision-making and ensures that the proper ad is served to the proper person at the proper time.

Considerations for using and implementing In-Memory Caches

Below are the main considerations for using and implementing in-memory caches.

- Data Access Patterns: Understand which data is frequently accessed and could benefit from caching.

- Cache Size and Capacity Planning: Determine the appropriate size and capacity of the cache based on available memory resources.

- Eviction Policies: Choose appropriate eviction policies to maximize cache hit rates and minimize cache misses.

- Concurrency Control: Implement mechanisms to handle concurrent access to the cache safely and efficiently.

- Cache Consistency: Ensure consistency between cached data and the underlying data source.

- Monitoring and Metrics: Implement monitoring to track cache usage, performance, and health for optimization and troubleshooting

Implementing Solutions for In-Memory Caches

Redis and Amazon ElastiCache are both popular solutions for implementing in-memory caching in distributed systems. Here’s an overview of each:

Redis is an open-source, in-memory data structure store that can be used as a database, cache, and message broker. It supports various data structures such as strings, hashes, lists, sets, and sorted sets, making it versatile for a wide range of use cases.

- Redis is known for its fast performance, high availability, and rich feature set, including built-in replication, persistence, clustering, and pub/sub messaging.

- It’s widely used in web applications, real-time analytics, caching layers, message queues, and more.

2. Amazon ElastiCache

Amazon ElastiCache is a fully managed, in-memory caching service provided by Amazon Web Services (AWS). It supports two popular in-memory caching engines: Redis and Memcached. With ElastiCache, you can easily deploy, operate, and scale in-memory caches in the cloud without the need to manage infrastructure.

- ElastiCache offers features such as automatic failover, data durability options, security controls, monitoring, and integration with other AWS services.

- It’s ideal for cloud-based applications, microservices architectures, and scenarios where high availability and scalability are paramount.

Benefits of In-Memory Caches

Below are the benefits of In-Memory Caches:

- Increased Speed and Performance: One of the most remarkable advantages of in-memory caches is to amplify the data access speed. As data is saved in the primary memory, it can be accessed a lot quicker than ordinary disk-based databases. This approves for real-time data processing and analysis, enabling companies to make quicker and greater knowledgeable decisions.

- Cost-Effective: In-memory caches are low cost in contrast to usual disk-based databases. As data is saved in the principal memory, there is no reason for costly disk storage, ensuring financial savings for businesses.

- Reduced Data Processing Time: With ordinary disk-based databases, information need to be retrieved from the disk earlier than it can be processed. In contrast, in-memory caches keep records in the principal memory, disposing of the need for disk access. This outcomes in decreased data processing time and enhances system performance.

- Improved Scalability: In-memory caches are relatively scalable, permitting organizations to take care of large quantities of data besides having any overall performance degradation. As data is saved in the principal memory, it can be elevated by means of adding the memory to the system if required without any problem.

Challenges and Trade-offs of In-Memory Cache

Implementing and using in-memory caches come with several challenges and trade-offs that need to be carefully considered:

- Memory Overhead: In-memory caching consumes additional memory resources, which can be a concern for applications with large datasets or limited memory availability. Allocating excessive memory for caching may lead to increased operational costs and resource contention.

- Cache Invalidation: Ensuring cache consistency and validity can be challenging, especially when dealing with frequently changing data or distributed cache environments. Implementing effective cache invalidation strategies is crucial to maintain data integrity and prevent stale or outdated data from being served.

- Cache Coherency: In distributed cache environments, maintaining cache coherency across multiple cache nodes can be complex. Synchronization and coordination mechanisms are required to ensure that updates made to cached data are propagated consistently and efficiently across all cache replicas.

- Performance Trade-offs: While in-memory caches can improve application performance by reducing access latency, there may be trade-offs in terms of CPU overhead, cache eviction overhead, and memory management overhead. It’s essential to carefully balance these trade-offs to achieve optimal performance.

- Concurrency Control: Managing concurrent access to the cache from multiple threads or processes requires careful consideration of concurrency control mechanisms such as locking, synchronization, and transactional semantics. Inefficient concurrency control can lead to contention, deadlock, or performance degradation.

Share your thoughts in the comments

Please Login to comment...