Types of Cache

Last Updated :

07 Mar, 2024

Cache plays an important role in enhancing system performance by temporarily storing frequently accessed data and reducing latency. Understanding the various types of cache is crucial for optimizing system design and resource utilization.

Important Topics for the Types of Cache

The cache is a high-speed data storage mechanism used to temporarily store frequently accessed or recently used data and instructions. It is situated between the main memory (RAM) and the central processing unit (CPU) in a computer system. By storing frequently accessed data closer to the CPU, cache enables quicker access times, reducing the latency associated with fetching data from slower main memory.

Importance of Caching

Caching plays a crucial role in optimizing system performance and improving overall efficiency in various computing environments. Its importance lies in several key aspects:

- Faster Data Access: By storing frequently accessed data closer to the processor, caching reduces the time required to fetch data from slower storage mediums, such as disk drives or network servers. This results in faster data retrieval times and improved system responsiveness.

- Reduced Latency: Caching helps mitigate the latency associated with accessing data from distant or slower storage devices. By keeping frequently used data readily available in cache memory, systems can minimize the delay experienced by users when accessing resources.

- Bandwidth Conservation: Caching conserves network bandwidth by serving frequently requested content locally instead of fetching it repeatedly from remote servers. This reduces the load on network infrastructure and improves overall network performance.

- Scalability: Caching enhances system scalability by offloading processing and storage burdens from backend systems. By caching frequently accessed data, systems can efficiently handle increasing user loads without experiencing performance degradation.

- Improved User Experience: Faster data access and reduced latency contribute to a smoother and more responsive user experience. Whether it’s web browsing, accessing files, or running applications, caching helps deliver content and services quickly, leading to higher user satisfaction.

- Enhanced Reliability: Caching can improve system reliability by reducing the risk of service disruptions or downtime. By serving cached content during periods of high demand or network instability, systems can maintain service availability and mitigate the impact of potential failures.

Types of Cache

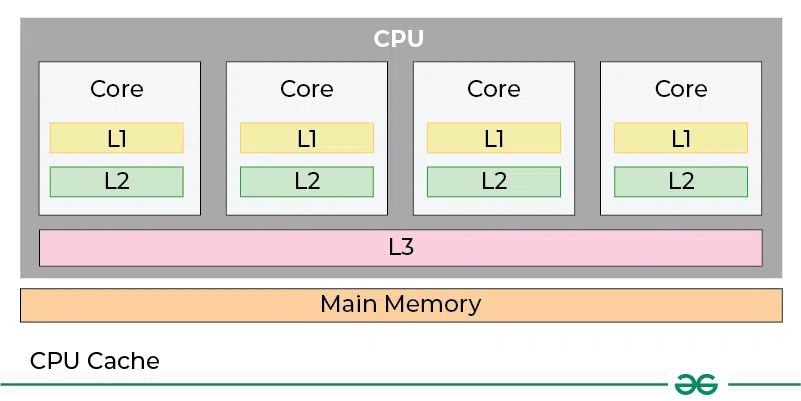

1. CPU Cache

CPU cache, a fundamental component of modern processors, significantly impacts system performance by reducing the time it takes to access frequently used data and instructions. Let’s explore CPU cache in-depth:

Characteristics

- Proximity to CPU: CPU cache is located directly on the processor chip, providing extremely fast access compared to accessing data from main memory (RAM).

- Hierarchical Structure: CPU cache is organized into multiple levels (L1, L2, L3), each with varying sizes, speeds, and proximity to the CPU cores. L1 cache is the smallest and fastest, located closest to the CPU cores, followed by L2 and L3 caches, which are larger but slower.

Functionality

- Data and Instruction Caching:

- CPU cache stores both frequently accessed data and instructions.

- When the CPU needs to access data or instructions, it first checks the cache.

- If the required data or instructions are found in the cache (cache hit), it can be quickly retrieved. If not found (cache miss), the CPU retrieves the data or instructions from main memory and stores them in the cache for future use.

- Cache Coherency:

- To ensure data consistency, modern CPUs use cache coherency protocols to synchronize data between different cache levels and CPU cores. These protocols prevent inconsistencies that could arise from multiple cores accessing and modifying the same data.

Types of CPU Cache

- L1 Cache: L1 cache is divided into separate instruction cache (L1i) and data cache (L1d). L1 cache is extremely fast but limited in size, typically ranging from a few KBs to a few MBs per core.

- L2 Cache: L2 cache serves as a secondary cache, larger than L1 but slower in access speed. It is shared among CPU cores within a single CPU core complex or chip.

- L3 Cache: L3 cache is the last level of cache in the hierarchy, shared among multiple CPU cores or across multiple CPU sockets. It is larger than L2 cache but slower in access speed.

Use Cases and Benefits

- Improved Performance: CPU cache dramatically reduces the time required to access frequently used data and instructions, resulting in faster program execution and improved system responsiveness.

- Reduced Memory Latency: By storing frequently accessed data and instructions closer to the CPU cores, cache minimizes the latency associated with fetching data from main memory.

- Higher Throughput: Cache enables processors to execute instructions more efficiently by reducing the frequency of stalls caused by waiting for data from main memory.

- Optimized Multithreading: In multithreaded applications, CPU cache helps improve thread performance by reducing contention for shared resources and minimizing cache thrashing.

2. Disk Cache

Disk cache, also known as buffer cache, is a mechanism used to improve disk I/O performance by temporarily storing recently accessed data in memory. Let’s explore disk cache in-depth

Characteristics

- RAM-based Storage: Disk cache utilizes a portion of random-access memory (RAM) to temporarily store data blocks that have been recently read from or written to a disk.

- Write-Back or Write-Through: Disk cache can operate in either write-back mode, where data is first written to cache and later flushed to disk, or write-through mode, where data is simultaneously written to cache and disk.

- Transparent Operation: Disk cache operates transparently to the operating system and applications, intercepting disk I/O requests and caching data blocks without requiring explicit user intervention.

Functionality

- Read Caching:

- Disk cache caches recently read data blocks from disk into memory. When a read request is issued for a data block, the cache first checks if the block is present in memory (cache hit).

- If found, the data is quickly retrieved from cache. Otherwise, the block is read from the disk (cache miss) and stored in cache for future use.

- Write Caching:

- Disk cache can cache write operations to improve disk write performance. In write-back mode, data is initially written to cache and acknowledged to the application, reducing disk I/O latency.

- The cached data is later flushed to disk asynchronously. Write caching can improve application responsiveness and reduce write latency.

- Prefetching:

- Disk cache can prefetch data blocks into memory based on access patterns or predictive algorithms.

- By anticipating future access requests, prefetching helps reduce disk access latency and improve overall system performance.

Types of Disk Cache

- Operating System Cache: Many operating systems maintain a disk cache as part of their file system implementation. The cache is managed by the operating system kernel and is used to buffer disk I/O operations.

- Disk Controller Cache: Some disk controllers feature built-in cache memory to accelerate disk operations. These controller caches can operate independently of the operating system cache and may offer additional features such as battery backup to protect cached data in case of power loss.

- Disk Cache Software: Third-party disk caching software can be installed on systems to augment or replace the built-in disk cache provided by the operating system. These software solutions often offer advanced caching algorithms and configuration options to optimize disk performance.

Use Cases and Benefits

- Improved Disk Performance: Disk cache reduces disk I/O latency by caching frequently accessed data blocks in memory, resulting in faster read and write operations.

- Enhanced System Responsiveness: By reducing the time required to access data from disk, disk cache improves overall system responsiveness and application performance.

- Reduced Disk Wear: Disk cache reduces the number of disk accesses, leading to less wear and tear on physical disk drives and potentially extending their lifespan.

- Optimized Resource Utilization: Disk cache helps optimize system resources by reducing the need for frequent disk accesses, freeing up CPU and disk bandwidth for other tasks.

3. Web Cache

Web cache, also known as a web proxy cache, is a mechanism used to store copies of web content such as HTML pages, images, and multimedia files locally on servers or client devices. Let’s explore web cache in-depth:

.webp)

Characteristics

- Proxy-based Storage: Web cache operates as an intermediary between clients (such as web browsers) and web servers, intercepting and caching web content requested by clients.

- Caching Policies: Web cache employs caching policies to determine which web content to cache and for how long. These policies may be based on factors such as content popularity, freshness, and cache size limitations.

- Hierarchical Caching: In large-scale deployments, web caches can be organized hierarchically, with lower-level caches serving individual clients and higher-level caches serving as intermediaries between lower-level caches and web servers.

Functionality

- Content Caching:

- Web cache stores copies of web content requested by clients locally on servers or client devices. When a client requests a web resource, the cache first checks if a cached copy is available (cache hit).

- If found, the cached content is served directly to the client, avoiding the need to fetch it from the original web server.

- If not found (cache miss), the cache retrieves the content from the web server and stores it locally for future requests.

- Cache Invalidation:

- Web cache implements mechanisms to invalidate stale or outdated cached content and ensure that clients receive the latest versions of web resources.

- This may involve checking for updates using HTTP headers like Last-Modified or ETag and revalidating cached content with the origin server.

- Content Compression:

- Some web caches support content compression techniques such as gzip or brotli to reduce the size of cached web content, thereby conserving storage space and improving cache efficiency.

Types of Web Cache

- Proxy Cache:

- Proxy caches are deployed at network edges or within organizations’ network infrastructure to intercept and cache web content requested by clients.

- They serve as intermediaries between clients and web servers, caching content to reduce latency and bandwidth usage.

- Browser Cache:

- Web browsers maintain their own local cache of web content, storing copies of previously visited web pages, images, and other resources.

- Browser cache helps speed up subsequent visits to the same websites by serving cached content directly from the local storage.

- Content Delivery Network (CDN) Cache:

- CDNs operate distributed networks of edge servers located in geographically dispersed locations.

- These edge servers cache copies of web content and serve them to users based on their geographic proximity, reducing latency and improving content delivery speed.

Use Cases and Benefits

- Faster Web Browsing: Web cache accelerates web browsing by delivering cached content directly to users, reducing the time required to fetch resources from remote web servers.

- Bandwidth Conservation: By serving cached content locally, web cache reduces the need for repeated downloads from remote servers, conserving network bandwidth and reducing internet traffic.

- Improved Website Performance: Web cache reduces server load and improves website performance by offloading content delivery tasks to local cache servers, thereby enhancing scalability and responsiveness.

- Reduced Latency: Caching frequently accessed web content locally minimizes the latency associated with fetching resources from distant web servers, resulting in faster page load times and improved user experience.

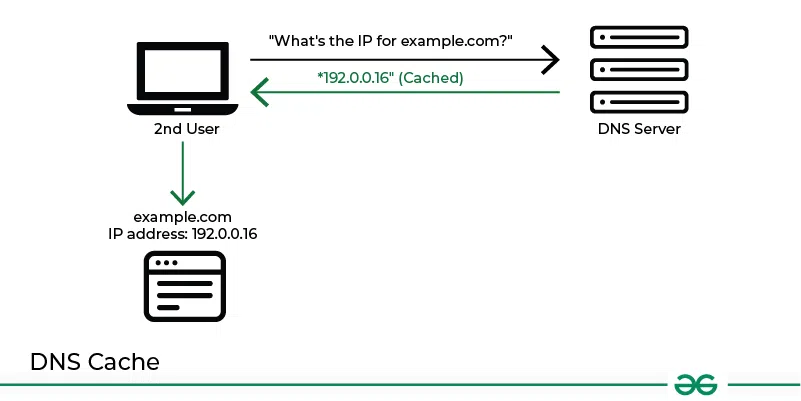

4. DNS Cache

DNS cache, also known as a resolver cache, is a mechanism used to temporarily store recently resolved domain name-to-IP address mappings locally on client devices or DNS servers. Let’s explore DNS cache in-depth:

Characteristics

- Local Storage: DNS cache resides locally on client devices or DNS servers, storing recently resolved domain name-to-IP address mappings for a configurable period.

- Time-to-Live (TTL): Each cached DNS record is associated with a Time-to-Live (TTL) value, specifying how long the record should be cached before it expires. Once the TTL expires, the cached record is discarded, and the DNS resolver must query the authoritative DNS servers to obtain updated information.

- Hierarchical Caching: DNS cache can be hierarchical, with local DNS caches serving individual client devices and intermediate DNS servers caching resolved DNS records on behalf of multiple clients.

Functionality

- DNS Resolution Caching:

- DNS cache stores recently resolved domain name-to-IP address mappings locally on client devices or DNS servers.

- When a client requests the IP address corresponding to a domain name, the DNS resolver first checks its local cache for a cached mapping (cache hit).

- If found, the resolver returns the cached IP address to the client, avoiding the need to query external DNS servers.

- If not found (cache miss), the resolver queries the authoritative DNS servers and caches the resolved mapping for future use.

- Negative Caching:

- DNS cache also caches negative responses, such as DNS resolution failures (e.g., domain does not exist).

- This helps reduce the load on authoritative DNS servers and improves DNS resolution efficiency.

Types of DNS Cache

- Client-Side DNS Cache:

- Client devices, such as computers, smartphones, and routers, maintain their own local DNS caches.

- These caches store resolved DNS records for recently visited domain names, speeding up subsequent DNS lookups and improving browsing performance.

- DNS Server Cache:

- DNS servers, including recursive DNS servers operated by internet service providers (ISPs) and public DNS resolvers like Google Public DNS and OpenDNS, maintain their own DNS caches.

- These caches store resolved DNS records on behalf of multiple clients, reducing DNS resolution latency and improving overall network performance.

Use Cases and Benefits

- Faster DNS Resolution: DNS cache accelerates DNS resolution by caching recently resolved domain name-to-IP address mappings locally, reducing the time required to fetch mappings from external DNS servers.

- Improved Network Performance: By caching DNS records locally, DNS cache reduces DNS resolution latency and improves overall network performance, particularly for frequently visited websites and domains.

- Reduced DNS Query Traffic: DNS cache reduces the volume of DNS queries sent to authoritative DNS servers by serving cached DNS records locally. This helps alleviate the load on DNS infrastructure and enhances DNS resolution efficiency.

- Enhanced Reliability: DNS cache enhances DNS resolution reliability by providing cached mappings even when authoritative DNS servers are unreachable or experiencing downtime. This improves system availability and ensures uninterrupted access to web services and resources.

Best Practices for Cache Management

Effective cache management is essential for optimizing system performance, ensuring data consistency, and minimizing resource usage. Here are some best practices for cache management:

- Identify Performance Hotspots:

- Analyze your application’s performance to identify areas where caching can provide the most significant performance improvements. Focus on frequently accessed data or computational-intensive operations that can benefit from caching.

- Use Caching Strategically:

- Implement caching selectively for data or operations that are accessed frequently but change infrequently. Avoid caching data that is volatile or frequently updated, as it may lead to stale cache entries and data inconsistency.

- Cache Invalidation:

- Implement cache invalidation mechanisms to ensure that cached data remains up-to-date. Use techniques such as time-based expiration, versioning, or event-driven invalidation to refresh cache entries when underlying data changes.

- Eviction Policies:

- Choose appropriate eviction policies to manage cache size and ensure efficient cache utilization. Common eviction policies include Least Recently Used (LRU), Least Frequently Used (LFU), and Time-to-Live (TTL) expiration.

- Select the eviction policy that best suits your application’s access patterns and data retention requirements.

- Cache Sizing:

- Size your cache appropriately based on available memory resources and expected workload characteristics.

- Oversized caches may lead to memory pressure and increased garbage collection overhead, while undersized caches may result in frequent cache misses and reduced performance.

- Fallback Mechanisms:

- Implement fallback mechanisms to handle cache misses gracefully.

- When a cache miss occurs, fall back to the underlying data source (e.g., database, file system) to retrieve the required data.

- Use caching as a performance optimization, but ensure that your application remains functional even in the absence of cached data.

- Security Considerations:

- Consider security implications when caching sensitive or confidential data. Implement appropriate access controls, encryption, and data masking techniques to protect cached data from unauthorized access or exposure.

Share your thoughts in the comments

Please Login to comment...