Setup API for GeeksforGeeks user data using WebScraping and Flask

Last Updated :

25 Apr, 2019

Prerequisite: WebScraping in Python, Introduction to Flask

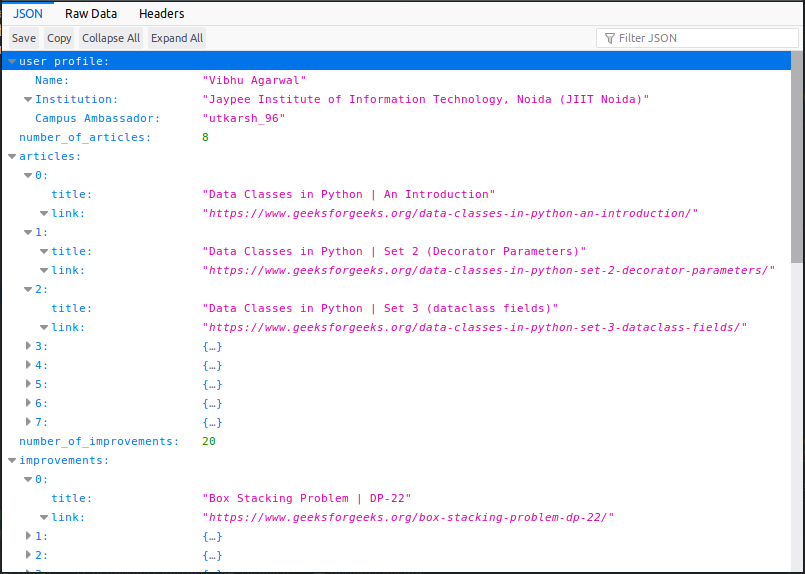

In this post, we will discuss how to get information about a GeeksforGeeks user using web scraping and serve the information as an API using Python’s micro-framework, Flask.

Step #1: Visit the auth profile

To scrape a website, the first step is to visit the website.

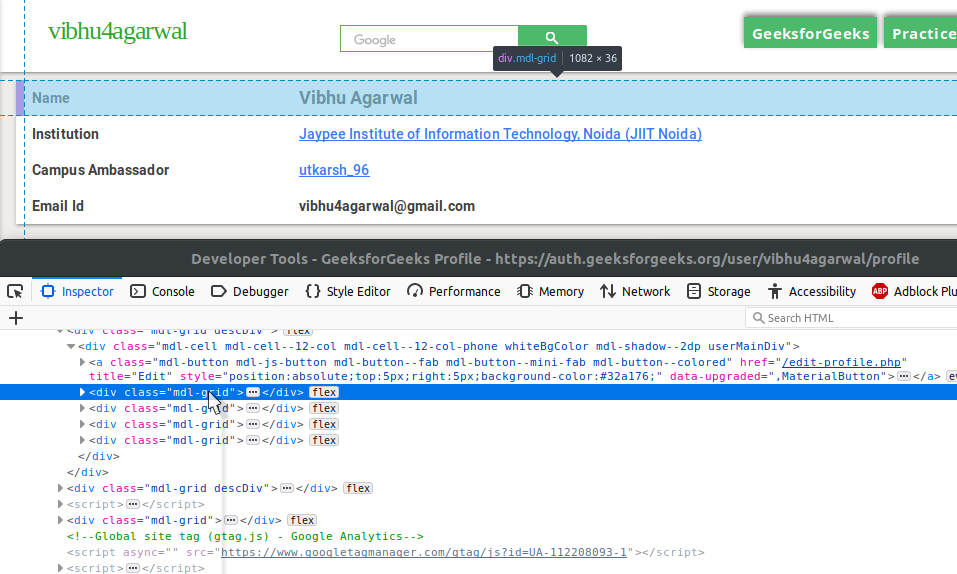

Step #2: Inspect the Page Source

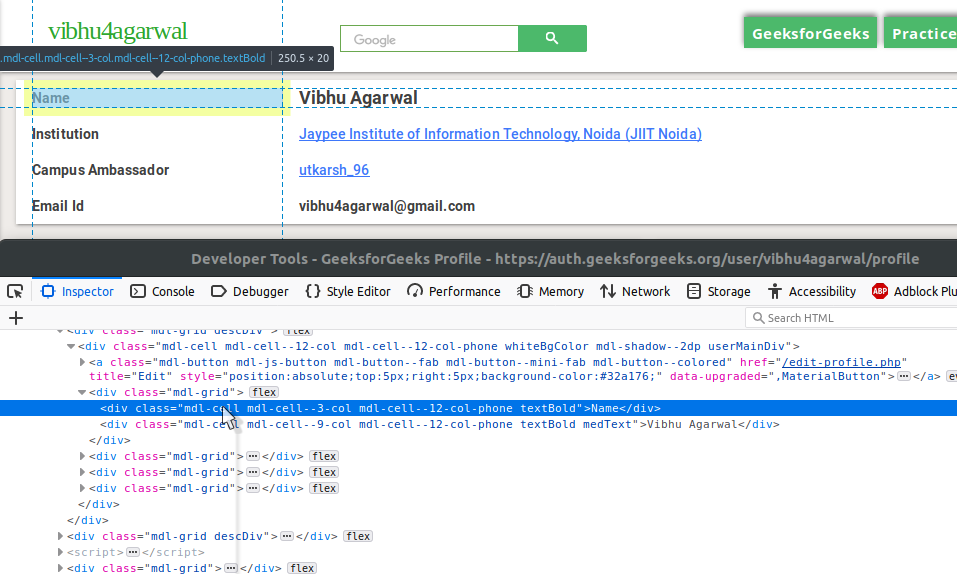

In the above image, you can spot the descDiv div which contains user Data. In the following image, spot the four mdl-grid divs for four pieces of information.

Dive deep, spot the two blocks for attribute and its corresponding value. That’s your user-profile data.

Find all this in the following function which returns all the data in the form of a dictionary.

def get_profile_detail(user_handle):

response = requests.get(url)

soup = BeautifulSoup(response.content, 'html5lib')

description_div = soup.find('div', {'class': 'descDiv'})

if not description_div:

return None

user_details_div = description_div.find('div', {'class': 'mdl-cell'})

specific_details = user_details_div.find_all('div', {'class': 'mdl-grid'})

user_profile = {}

for detail_div in specific_details:

block = detail_div.find_all('div', {'class': 'mdl-cell'})

attribute = block[0].text.strip()

value = block[1].text.strip()

user_profile[attribute] = value

return {'user profile': user_profile}

|

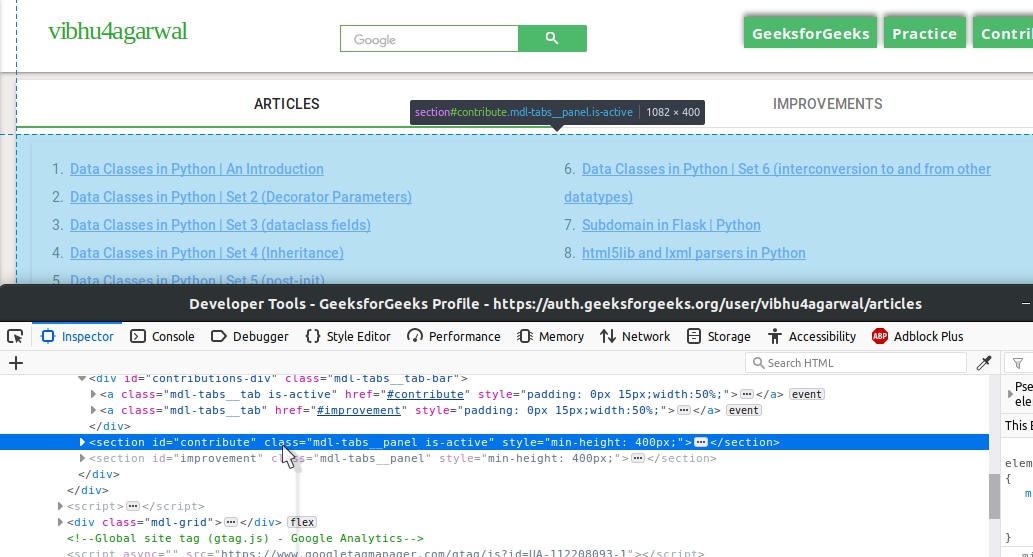

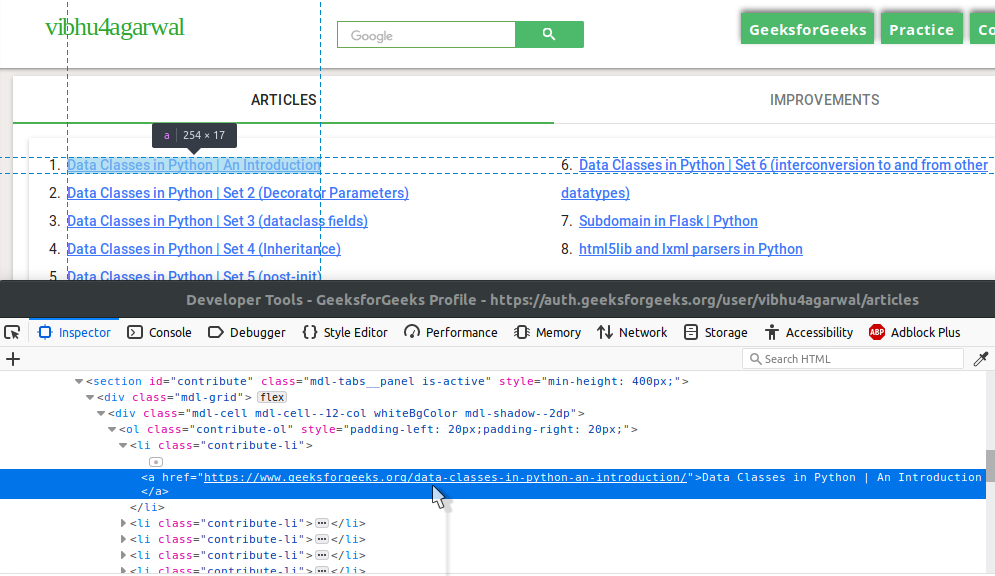

Step #3: Articles and Improvements list

This time, try to spot the various tags yourself.

If you could spot the various elements of the HTML, you can easily write the code for scraping it too.

If you couldn’t, here’s the code for your help.

def get_articles_and_improvements(user_handle):

articles_and_improvements = {}

response = requests.get(url)

soup = BeautifulSoup(response.content, 'html5lib')

contribute_section = soup.find('section', {'id': 'contribute'})

improvement_section = soup.find('section', {'id': 'improvement'})

contribution_list = contribute_section.find('ol')

number_of_articles = 0

articles = []

if contribution_list:

article_links = contribution_list.find_all('a')

number_of_articles = len(article_links)

for article in article_links:

article_obj = {'title': article.text,

'link': article['href']}

articles.append(article_obj)

articles_and_improvements['number_of_articles'] = number_of_articles

articles_and_improvements['articles'] = articles

improvement_list = improvement_section.find('ol')

number_of_improvements = 0

improvements = []

if improvement_list:

number_of_improvements = len(improvement_list)

improvement_links = improvement_list.find_all('a')

for improvement in improvement_links:

improvement_obj = {'title': improvement.text,

'link': improvement['href']}

improvements.append(improvement_obj)

articles_and_improvements['number_of_improvements'] = number_of_improvements

articles_and_improvements['improvements'] = improvements

return articles_and_improvements

|

Step #4: Setup Flask

The code for web scraping is completed. Now it’s time to set-up our Flask Server. Here’s the setup for the Flask app, along with all the necessary libraries needed for the entire script.

from bs4 import BeautifulSoup

import requests

from flask import Flask, jsonify, make_response

app = Flask(__name__)

app.config['JSON_SORT_KEYS'] = False

|

Step #5: Setting Up for API

Now that we’ve got the appropriate functions, our only task is to combine both of their results, convert the dictionary to JSON and then serve it on the server.

Here’s the code for an endpoint which serves the API on the basis of user handle it receives. Remember, we need to take care of the improper user handle, our endpoint can receive any time.

@app.route('/<user_handle>/')

def home(user_handle):

response = get_profile_detail(user_handle)

if response:

response.update(get_articles_and_improvements(user_handle))

api_response = make_response(jsonify(response), 200)

else:

response = {'message': 'No such user with the specified handle'}

api_response = make_response(jsonify(response), 404)

api_response.headers['Content-Type'] = 'application/json'

return api_response

|

Combining all the code, you’ve a got a fully functioning server serving dynamic APIs.

Share your thoughts in the comments

Please Login to comment...