Jenkins is a Java-based open-source automation platform with plugins designed for continuous integration. It is used to continually create and test software projects. If you are even slightly aware of Jenkins, then you must know that continuous integration is one of the most important parts of DevOps and the jobs in this field are high in demand.

If you are preparing for jobs in the DevOps domain, you have arrived at the right place. Through extensive research and consultation from experts, we have compiled a list of the 50 most frequently asked Jenkins interview questions in increasing order of difficulty for Freshers, Intermediate-level, and Experienced candidates.

Let’s begin with some basic Jenkins Interview questions for aspiring DevOps engineers.

Basic Jenkins Interview Questions for Freshers

1. What Is Jenkins Used For?

Jenkins is used for automating software development tasks such as code compilation, testing, code quality checks, artifact creation, and deployment. It streamlines the development process, ensuring reliability and quality by automating repetitive tasks in a DevOps context.

2. How To Trigger a Build In Jenkins Manually?

To manually trigger a build in Jenkins:

- Access the Jenkins Dashboard.

- Select the specific Jenkins job.

- Click “Build Now” to start the manual build.

- Provide build parameters if necessary.

- Confirm and monitor the build progress in real time.

- Review the build results on the job’s dashboard.

- Access build artifacts if applicable.

- Trigger additional builds as needed.

3. What Is The Default Path For The Jenkins Password When You Install It?

The default path for the Jenkins password when you install it can vary depending on your operating system and how you installed Jenkins. Here are the general default locations for the Jenkins password:

1. On Windows

If you installed Jenkins as a Windows service, the initial administrative password is typically stored in a file called initialAdminPassword inside the secrets directory within the Jenkins installation directory. The path may look something like this: C:\Program Files (x86)\Jenkins\secrets\initialAdminPassword.

2. On Linux/Unix

If you installed Jenkins using a package manager like apt or yum, the initial password might not be stored directly on the file system. In this case, you can typically find it in the console output when you start Jenkins for the first time. You’ll see a message that includes the initial password.

If you installed Jenkins manually, you might need to check the Jenkins home directory, which is often located at /var/lib/jenkins. Within this directory, you can find the secrets directory and, inside it, the initialAdminPassword file.

3. On MacOS

If you installed Jenkins on MacOS, the initial password is generally located in the same way as on Linux or Unix systems. You can look in the secrets directory within the Jenkins home directory.

Please note that these paths can change depending on your Jenkins installation method and configuration, so it’s a good idea to refer to the documentation or installation instructions specific to your setup if you encounter any issues locating the initial Jenkins password.

4. How To Integrate Git With Jenkins?

To integrate Git with Jenkins:

- Install the “Git Plugin” in Jenkins through the plugin manager.

- Configure Git in the global tool configuration, ensuring automatic installation is enabled.

- Create or configure a Jenkins job, selecting Git as the version control system.

- Specify the Git repository URL and, if necessary, credentials for authentication.

- Define the branches to monitor and build.

- Set up build triggers as needed.

- Save the job configuration and trigger builds manually or automatically based on your settings.

- Monitor build progress and results in the Jenkins dashboard.

5. What Does “Poll SCM” Mean In Jenkins?

In Jenkins, “poll SCM” means periodically checking a version control system (e.g., Git) for changes. You can schedule how often Jenkins checks for updates. When changes are detected, Jenkins triggers a build, making it a key feature for continuous integration, scheduled tasks, and automated response to code changes.

6. How To Schedule Jenkins Build Periodically (hourly, daily, weekly)? Explain the Jenkins schedule format.

To schedule Jenkins builds periodically at specific intervals, you can use the built-in scheduling feature. Jenkins uses a cron-like syntax for scheduling, allowing you to specify when and how often your builds should run. Here’s a detailed explanation of the Jenkins schedule format and how to schedule builds:

1. Jenkins Schedule Format

The Jenkins schedule format closely resembles the familiar cron syntax, with a few minor differences. A typical Jenkins schedule consists of five fields, representing minute, hour, day of the month, month, and day of the week, in that order:

Here’s what each field means:

- Minute (0 – 59): Specifies the minute of the hour when the build should run (e.g., 0 for the top of the hour, 30 for the half-hour).

- Hour (0 – 23): Specifies the hour of the day when the build should run (e.g., 1 for 1 AM, 13 for 1 PM).

- Day of the month (1 – 31): Specifies the day of the month when the build should run (e.g., 1 for the 1st day of the month, 15 for the 15th day).

- Month (1 – 12): Specifies the month when the build should run (e.g., 1 for January, 12 for December).

- Day of the week (0 – 7): Specifies the day of the week when the build should run (e.g., 0 or 7 for Sunday, 1 for Monday, and so on).

Scheduling Examples:

Now, let’s look at some scheduling examples:

| 0 0 * * * |

Schedules a build every day at midnight (00:00). |

| 30 * * * * |

Schedules a build every hour at the 30th minute (e.g., 1:30 AM, 2:30 AM). |

| 0 15 * * 1 |

Schedules a build every Monday at 3 PM. |

| 0 8,20 * * * |

Schedules a build every day at 8 AM and 8 PM. |

| 30 22 * * 5 |

Schedules a build every Friday at 10:30 PM. |

Configuring The Schedule In Jenkins

To schedule a build in Jenkins:

- Open your Jenkins job’s configuration page.

- In the “Build Triggers” section, check the “Build periodically” option.

- In the text box that appears, enter your desired schedule using the cron-like syntax.

For example, to schedule a daily build at midnight (00:00), enter 0 0 * * *. Make sure to include the five fields in the schedule.

- Click “Save” to apply the schedule.

Jenkins will now automatically trigger your builds according to the specified schedule. You can use this scheduling feature to automate tasks, such as nightly builds, daily backups, or any other recurring job that fits your project’s needs.

7. What Is Jenkins Home Directory Path?

The Jenkins home directory is where Jenkins stores its critical data, including job configurations, logs, plugins, and more. The location of this directory varies by operating system but can typically be found at:

- Linux/Unix: /var/lib/jenkins

- Windows: C:\Users<YourUsername>.jenkins

- macOS: /Users/<YourUsername>/.jenkins

You can configure its location during installation or in the Jenkins startup script. Understanding this directory is essential for managing and backing up Jenkins data.

8. How To Integrate Slack With Jenkins?

To integrate Slack with Jenkins for notifications:

- Set up a Slack Incoming Webhook in your Slack workspace to get a Webhook URL

- Install the “Slack Notification” plugin in Jenkins.

- Configure Jenkins global Slack settings by adding the Slack Webhook URL.

- In your Jenkins job configuration, add a “Slack Notifications” post-build action.

- Specify the Slack channel, customize message options, and select notification preferences (e.g., success, failure).

- Save the job configuration.

- Run a build, and Jenkins will send notifications to the specified Slack channel based on build results.

- Now, Jenkins is integrated with Slack, providing real-time notifications to keep your team informed about build status and progress.

9. What Is A Jenkins Agent?

A Jenkins agent, also called a Jenkins slave or node, is a separate machine or resource that collaborates with a Jenkins master to execute jobs and build tasks. Agents enable parallel and distributed builds, scaling Jenkins’ capacity.

They register with the master, get assigned jobs, execute them on their own hardware or VMs, and report back results. Agents can run on various platforms, making it possible to test and build in different environments.

10. How To Restart Jenkins?

To restart Jenkins, you can follow these steps:

Method 1.Using the Jenkins Web Interface (if available):

- Open a web browser and navigate to your Jenkins server’s URL.

- Log in to the Jenkins web interface if required.

- In the top-right corner, you may see a “Restart” option. Click on it to initiate the restart process.

- Jenkins will display a confirmation dialog. Confirm that you want to restart Jenkins.

Method 2.Using Command Line (Linux/Unix):

- If you have SSH access to the server where Jenkins is installed, you can use the following commands:

- Open a terminal or SSH into the Jenkins server.

- Run the following command with superuser privileges (e.g., using sudo):

sudo systemctl restart jenkins

This command assumes that Jenkins is managed as a systemd service. If Jenkins is managed differently on your system, you may need to use an alternative command.

Step 3. Using Command Line (Windows):

On Windows, you can restart Jenkins as a service using the following commands:

- Open a Command Prompt or PowerShell window with administrator privileges.

- Stop the Jenkins service:

net stop “Jenkins”

- Start the Jenkins service:

net start “Jenkins”

Ensure that you use double quotes around “Jenkins” if the service name has spaces.

Method 4: Using a Container Or Other Deployment Method:

If Jenkins is running inside a container or managed through a different deployment method, you should refer to the specific instructions for that environment. The procedure for restarting Jenkins may differ based on the setup.

Please choose the method that best suits your Jenkins deployment and the level of access you have to the server or environment where Jenkins is installed.

11. What Is The Default Port Number For Jenkins?

The default port number for Jenkins is 8080. When you access the Jenkins web interface via a web browser, you typically use the URL: http://your_jenkins_server:8080/.

12. Types of build triggers in Jenkins.

Types of build triggers in Jenkins include:

- SCM Polling Trigger: Monitors source code repositories for changes and triggers builds.

- Scheduled Build Trigger: Runs jobs on a predefined schedule using cron-like syntax.

- Webhook Trigger: Listens for external events or notifications to start builds.

- Upstream/Downstream Trigger: Triggers downstream jobs based on the success of upstream jobs, creating build pipelines.

- Manual Build Trigger: Requires manual user intervention to start a job.

- Dependency Build Trigger: Triggers jobs when another job is completed, regardless of success or failure.

- Parameterized Trigger: Passes parameters from one job to another during triggering.

- Pipeline Trigger: Allows custom triggering logic within Jenkins Pipelines.

Using the right trigger type is crucial for automating and managing your CI/CD pipelines effectively.

13. What is the language used to write the Jenkins CI/CD pipeline?

Jenkins CI/CD pipelines are typically written using a domain-specific language called Groovy. Specifically, Jenkins uses the Jenkins Pipeline DSL (Domain-Specific Language), which is an extension of Groovy tailored for defining and orchestrating continuous integration and continuous delivery pipelines.

Here are some key points about the language used to write Jenkins CI/CD pipelines:

- Groovy: Groovy is a versatile and dynamic programming language that runs on the Java Virtual Machine (JVM). It is known for its simplicity and flexibility, making it well-suited for scripting and automation tasks.

- Declarative and Scripted Syntax: Jenkins Pipelines support two syntax flavours. Declarative and Scripted. Declarative syntax provides a simplified and structured way to define pipelines, while Scripted syntax allows for more fine-grained control and scripting capabilities.

- Pipeline DSL: The Jenkins Pipeline DSL provides a set of domain-specific constructs and functions for defining stages, steps, and post-build actions within a pipeline. It also includes built-in support for parallel execution, error handling, and integrations with various plugins.

- Pipeline as Code: Jenkins Pipelines are often referred to as “Pipeline as Code” because you define your build and deployment processes as code within a version-controlled file. This approach allows for versioning, code review, and collaboration on pipeline definitions.

- Version Control Integration: Jenkins Pipelines can be stored in version control repositories, such as Git. This integration allows you to manage and version your pipeline definitions alongside your application code.

- Customization: The Groovy-based Jenkins Pipeline DSL allows you to customize and extend your pipelines with custom functions, logic, and integrations. You can use existing Groovy libraries and create reusable components.

- IDE Support: Groovy is supported by various integrated development environments (IDEs), such as IntelliJ IDEA and Visual Studio Code, which provide code completion, syntax highlighting, and debugging capabilities for pipeline development.

- Shared Libraries: Jenkins allows you to define shared libraries written in Groovy, which can be used across multiple pipelines. Shared libraries enable code reuse and maintainability for common pipeline tasks.

In summary, Jenkins CI/CD pipelines are written using Groovy and the Jenkins Pipeline DSL, which provides a powerful and flexible way to define and automate your continuous integration and delivery workflows. Groovy’s ease of use and Jenkins’ robust features make it a popular choice for the pipeline as code implementations.

14. What is the difference between Continuous Delivery and Continuous Deployment?

Continuous Delivery (CD) and Continuous Deployment (CD) are two distinct practices in the DevOps and software development lifecycle, but they are closely related. Here are the key differences between the two:

| Continuous Delivery is a software development practice that focuses on automating the process of delivering code changes to production-like environments (staging or testing environments) after passing through the entire pipeline of build, test, and deployment. |

Continuous Deployment is an extension of Continuous Delivery. It is a practice where code changes that pass automated tests are automatically and immediately deployed to the production environment without requiring manual intervention or approval.

|

| In Continuous Delivery, the deployment to the production environment is not automated. Instead, it requires a manual trigger or approval process. The code is considered “production-ready” and can be deployed to the live environment, but this step is not automated. |

In Continuous Deployment, the deployment to the production environment is fully automated. As soon as code changes pass all automated tests, they are automatically released to the live environment.

|

| CD allows for human intervention and decision-making before deploying code to the production environment. It allows teams to assess the changes, perform final testing, and ensure that business requirements are met. |

CD eliminates the need for human intervention or approval in the production deployment process. If the automated tests pass, the code goes live. |

|

Continuous Delivery is often chosen in scenarios where organizations want to achieve a balance between rapid development and the need for human validation before releasing changes to customers. It reduces the risk of unexpected issues in production.

|

Continuous Deployment is often implemented by organizations that prioritize rapid delivery of new features and bug fixes to end-users. It is common in environments where there is a strong focus on continuous improvement and automation. |

In summary, the main difference between Continuous Delivery and Continuous Deployment is the level of automation and human intervention in the final deployment to the production environment. Continuous Delivery stops short of fully automated production deployment and includes a manual approval step, while Continuous Deployment automates the entire process, releasing code changes to production as soon as they pass automated tests. The choice between the two practices depends on an organization’s risk tolerance, release strategy, and the need for manual validation.

15. Explain about Master-Slave Configuration in Jenkins.

A Master-Slave configuration in Jenkins, also known as a Jenkins Master-Agent configuration, is a setup that allows Jenkins to distribute and manage its workload across multiple machines or nodes. In this configuration, there is a central Jenkins Master server, and multiple Jenkins Agent nodes (slaves) that are responsible for executing build jobs. This architecture offers several advantages, including scalability, parallelism, and the ability to run jobs in diverse environments.

Here’s an explanation of the key components and benefits of a Master-Slave configuration in Jenkins:

Components:

- Jenkins Master:

- The Jenkins Master is the central server responsible for managing and coordinating the entire Jenkins environment.

- It hosts the Jenkins web interface and handles the scheduling of build jobs, job configuration, and the storage of build logs and job history.

- The Master communicates with Jenkins Agents to delegate job execution and collects the results.

- Jenkins Agent (Slave)

- Jenkins Agents, often referred to as Jenkins Slaves or nodes, are remote machines or virtual instances that perform the actual build and testing tasks.

- Agents can run on various operating systems and environments, enabling the execution of jobs in different configurations.

- Agents are registered with the Jenkins Master and are available to accept job assignments.

Benefits:

- Scalability: Easily handle more build jobs by adding Agents.

- Parallelism: Run multiple jobs simultaneously for faster results.

- Resource isolation: Isolate jobs on different machines or environments.

- Load distribution: Distribute jobs for optimal resource use.

- Flexibility: Configure Agents for specific requirements.

- Resilience: Reassign jobs if an Agent becomes unavailable.

- Security and isolation: Control Agent access and resources.

- Support for diverse environments: Test on various platforms and setups.

This architecture streamlines CI/CD pipelines and enhances resource utilization.

16. How to maintain a CI/CD pipeline of Jenkins in GitHub?

To maintain a CI/CD pipeline in Jenkins with GitHub, follow these steps:

- Version control Jenkins configuration using Git.

- Define the pipeline with a Jenkinsfile in the project’s GitHub repository.

- Set up webhooks in GitHub to trigger Jenkins pipelines.

- Manage sensitive data securely with Jenkins credentials.

- Keep Jenkins plugins up to date for the latest features and security.

- Regularly review and update pipeline configurations.

- Include automated tests for pipeline configuration.

- Monitor build logs for issues and failures.

- Use version control for pipeline code to enable rollbacks.

- Consider Infrastructure as Code (IaC) for infrastructure provisioning.

- Maintain documentation for the CI/CD pipeline.

- Encourage collaboration and code reviews for pipeline improvements.

- Implement backups and disaster recovery plans.

- Ensure compliance and security in your CI/CD pipeline.

These steps will help you keep your Jenkins CI/CD pipeline up-to-date and reliable while integrating with your GitHub repository.

17. How would you design and implement a Continuous Integration and Continuous Deployment (CI/CD) pipeline for deploying applications to Kubernetes?

Designing and implementing a CI/CD pipeline for deploying applications to Kubernetes involves several key steps and considerations to ensure a smooth and automated deployment process. Below is a high-level guide on how to design and implement such a pipeline:

Step 1: Set Up a Version Control System (VCS)

- Use a version control system like Git to manage your application code and deployment configurations. Host your Git repository on a platform like GitHub or GitLab.

Step 2: Define Kubernetes Manifests

- Create Kubernetes manifests (YAML files) to describe your application’s deployment, services, ingress controllers, and other resources. Store these manifests in your Git repository.

Step 3: Choose a CI/CD Tool

- Select a CI/CD tool that integrates well with Kubernetes and your VCS. Popular choices include Jenkins, GitLab CI/CD, Travis CI, CircleCI, and others.

Step 4: Configure CI/CD Pipeline

- Define a CI/CD pipeline configuration file (e.g., .gitlab-ci.yml or Jenkinsfile) in your Git repository. This file specifies the stages and steps of your pipeline.

- Configure the pipeline to trigger code pushes to the VCS, merge requests, or other relevant events.

Step 5: Build and Test Stage

- In the initial stage of the pipeline, build your application container image. Use Docker or another containerization tool.

- Run tests against your application code to ensure its correctness. This stage may include unit tests, integration tests, and code quality checks.

Step 6: Container Registry

- Push the built container image to a container registry like Docker Hub, Google Container Registry, or an internal registry.

- Ensure that your pipeline securely manages registry credentials.

Step 7: Deployment Stage

- Deploy your application to Kubernetes clusters. This stage involves applying Kubernetes manifests to create or update resources.

- Use tools like kubectl or Kubernetes-native deployment tools like Helm to manage deployments.

- Implement a rolling update strategy to minimize downtime during deployments.

Step 8: Testing Stage

- After deploying to Kubernetes, perform additional tests, including end-to-end tests and smoke tests, to verify that the application runs correctly in the cluster.

Step 9: Promotion to Production

- Implement a promotion strategy to move successfully tested changes from staging to production environments. This can involve manual approval gates or automated processes.

Step 10: Monitoring and Logging

- Integrate monitoring and logging tools (e.g., Prometheus, Grafana, ELK stack) to track the health and performance of your applications in the Kubernetes cluster. – Implement alerting to notify teams of issues that require attention.

Step 11: Security and Access Control

- Implement security measures, including RBAC (Role-Based Access Control) and Pod Security Policies, to ensure that only authorized users and applications can access your cluster.

Step 12: Infrastructure as Code (IaC)

- Treat your Kubernetes cluster’s infrastructure as code using tools like Terraform or Kubernetes operators. This ensures that your cluster infrastructure is versioned and can be recreated as needed.

Step 13: Documentation and Training

- Document your CI/CD pipeline processes, including setup, configurations, and troubleshooting steps. Provide training to team members on pipeline usage and best practices.

Step 14: Continuous Improvement

- Continuously monitor and evaluate the effectiveness of your CI/CD pipeline. Seek feedback from the development and operations teams to identify areas for improvement. – Make incremental updates and optimizations to enhance the pipeline’s efficiency and reliability.

Step 15: Security Scans and Compliance

- Integrate security scanning tools into your pipeline to identify and address vulnerabilities in your application code and container images. – Ensure compliance with industry-specific regulations and security standards.

By following these steps and best practices, you can design and implement a robust CI/CD pipeline for deploying applications to Kubernetes. This pipeline automates the deployment process, ensures consistency, and enables rapid and reliable application delivery in a Kubernetes environment.

18. Explain about the multibranch pipeline in Jenkins.

A Multibranch Pipeline in Jenkins is a feature for managing CI/CD pipelines for multiple branches in a version control repository. It automatically creates pipelines for each branch or pull request, uses Jenkinsfiles to define pipeline configurations, supports parallel builds, and cleans up unused jobs. It simplifies managing and automating pipelines across various code branches and pull requests, streamlining the CI/CD process.

19. What is a Freestyle project in Jenkins?

A Freestyle project in Jenkins is a basic and user-friendly job type. It allows users to configure build jobs using a graphical interface without scripting. It’s suitable for simple build and automation tasks, supporting various build steps, post-build actions, and integration with plugins. While it’s easy to use, it may not be ideal for complex workflows, unlike Jenkins Pipeline jobs, which offer more flexibility and scripting capabilities.

20. What is a Multi-Configuration project in Jenkins?

A Multi-Configuration project in Jenkins, also known as a Matrix Project, is designed for testing or building a software project across multiple configurations simultaneously. It allows you to define axes representing different variations (e.g., operating systems, JDK versions) and Jenkins automatically tests or builds the project for all possible combinations of these configurations. It’s useful for cross-platform testing, version compatibility, browser testing, localization checks, and more, ensuring software works in diverse environments.

21. What is a Pipeline in Jenkins?

A Jenkins Pipeline is a series of code-defined steps that automate the Continuous Integration and Continuous Delivery (CI/CD) process. It allows you to define and manage your entire software delivery pipeline as code, using a declarative or scripted syntax. Pipelines cover continuous integration, delivery, and deployment, with support for parallel and sequential stages. They integrate with source control, allow customization, utilize build agents, and offer extensive plugin support. This approach promotes automation, collaboration, and repeatability, making software development and delivery more efficient and reliable.

22. How to mention the tools configured in the Jenkins pipeline?

In a Jenkins pipeline, you can mention the tools and configurations used by defining them in the pipeline script itself. This is typically done in the ‘tools’ section of your pipeline script. Below are the steps to mention and configure tools in a Jenkins pipeline:

Step1: Open or Create a Jenkinsfile

Ensure that you have a Jenkinsfile in your project repository. If you don’t have one, create a new file named Jenkinsfile in the root directory of your project.

Step 2: Define Pipeline and Tools Section

In the Jenkinsfile, define your pipeline using the pipeline block, and within that block, define a tools section. The tools section is used to specify which tools or tool installations should be available for the pipeline.

pipeline {

agent any

tools {

// Define the tools and their configurations here

// Example:

maven 'MavenTool' // Name of the tool and the tool installation name

jdk 'JDKTool' // Name of the tool and the tool installation name

}

stages {

// Define your pipeline stages here

stage('Build') {

steps {

// Use the configured tools in your pipeline stages

// Example:

script {

sh '''#!/bin/bash

echo "Building with Maven"

mvn clean package

'''

}

}

}

}

}

Step 3: Specify Tool Installations

In the tools section, specify the tools you want to use along with their installation names. The installation names should match the names configured in your Jenkins master’s tool configurations. For example, if you have defined a Maven installation named “MavenTool” and a JDK installation named “JDKTool” in Jenkins, you can reference them in your pipeline as shown above.

Step 4: Use the Configured Tools

In your pipeline stages, you can now use the configured tools. For example, if you specified a Maven tool, you can use it to build your project by invoking mvn with the configured Maven installation

stage('Build') {

steps {

sh '''#!/bin/bash

echo "Building with Naveen"

mvn clean package

'''

}

}

Step 5: Save and Commit

Save the Jenkinsfile and commit it to your version control system (e.g., Git). This ensures that your pipeline configuration is versioned and can be shared with your team.

Step 6: Run the Pipeline

Trigger the Jenkins pipeline, and it will automatically use the tools and configurations you specified to build, test, and deploy your project.

By following these steps and configuring tools within your Jenkins pipeline script, you ensure that your pipeline has access to the required tools and environments, making your builds and deployments consistent and reproducible.

23. What is the global tool configuration in Jenkins?

Global Tool Configuration in Jenkins refers to the centralized configuration of software tools and installations that can be used by all Jenkins jobs and pipelines across the Jenkins master server. It allows Jenkins administrators to set up and manage tool installations such as JDKs, build tools (e.g., Maven, Gradle), version control systems (e.g., Git, Subversion), and other utilities in a consistent and organized manner. This configuration is accessible from the Jenkins web interface and provides a convenient way to ensure that all Jenkins projects have access to the required tools.

24. Write a sample Jenkins pipeline example.

Here’s a simple Jenkins pipeline example written in Declarative Pipeline syntax. This example demonstrates a basic pipeline that checks out code from a Git repository, builds a Java project using Maven, and then archives the build artifacts:

pipeline {

agent any

stages {

stage('Checkout') {

steps {

checkout([$class: 'GitSCM', branches: [[name: '*/main']],

userRemoteConfigs: [[url: 'https://github.com/your/repository.git']]])

}

}

stage('Build') {

steps {

sh 'mvn clean package'

}

}

stage('Archive Artifacts') {

steps {

archiveArtifacts artifacts: 'target/*.jar', allowEmptyArchive: true

}

}

}

post {

success {

echo 'Pipeline completed successfully'

}

failure {

echo 'Pipeline failed'

}

}

}

In this pipeline

- The pipeline is defined using the pipeline block.

- It runs on any available agent (specified by agent any), meaning it can be executed on any available Jenkins agent or node.

- The pipeline has three stages: Checkout, Build, and Archive Artifacts.

- In the Checkout stage, the code is checked out from a Git repository using the checkout scm step. Replace ‘your-git-repo-url’ with the actual URL of your Git repository.

- In the Build stage, the maven tool is used to build a Java project. The sh ‘mvn clean package’ command executes the Maven build.

- The Archive Artifacts stage archives the built artifacts (JAR files in this example) using the archived artifacts step. The target/*.jar pattern should be adjusted to match the location of your project’s output.

- The post section defines post-build actions. In this example, it includes simple echo statements, but you can customize this section to trigger notifications or perform additional actions based on the build result (success or failure).

This is a basic Jenkins pipeline example, but Jenkins pipelines can be much more complex and versatile, depending on your project’s needs. You can extend and customize pipelines to include additional stages, steps, and integrations with other tools and services as required for your CI/CD process.

25 What is Jenkins_X?

Jenkins X (Jenkins X) is an open-source, cloud-native, and opinionated CI/CD (Continuous Integration/Continuous Deployment) solution designed specifically for Kubernetes-based applications and microservices. It’s important to note that Jenkins X is a separate project and not an evolution of the traditional Jenkins CI/CD tool. While they share the Jenkins name, they have different goals and architecture.

Jenkins X is purpose-built for Kubernetes-native CI/CD, with a focus on modern container technologies and Kubernetes orchestration. Its key features and aspects include:

- Kubernetes-Centric: Jenkins X is tightly integrated with Kubernetes, utilizing Kubernetes native resources to manage environments, builds, and deployments.

- GitOps Practices: Jenkins X promotes GitOps practices, where the entire CI/CD process is defined, versioned, and managed within a Git repository. This includes pipeline configurations, environment definitions, and application code.

- Automated Pipelines: Jenkins X provides out-of-the-box automation for creating and managing CI/CD pipelines. It can automatically create pipelines for applications based on language and framework choices.

- Preview Environments: Developers can create ephemeral preview environments for each pull request, allowing them to test changes in an isolated environment before merging code.

- Application Versioning: Jenkins X enforces semantic versioning for applications and automates the process of versioning and promoting application releases.

- Development Workflow: Jenkins X defines a streamlined development workflow that includes code changes, code reviews, automated testing, and promotion of code from development to production.

- Built-in Git Provider Integration: Jenkins X supports popular Git providers like GitHub, GitLab, and Bitbucket, making it easy to integrate with existing repositories.

- Helm Charts: Helm charts are used to define Kubernetes resources, making it straightforward to manage the deployment of complex applications and microservices.

- Environment Promotion: Jenkins X simplifies the process of promoting applications through different environments (e.g., development, staging, production) with automated promotion pipelines.

- Monitoring and Observability: Jenkins X integrates with monitoring and observability tools like Prometheus and Grafana to provide insights into application health and performance.

- Collaboration: It supports collaboration features such as code reviews, Slack notifications, and pull request management.

- Multi-Cloud Support: Jenkins X can be used on various cloud providers and on-premises Kubernetes clusters.

In summary, while Jenkins X and traditional Jenkins share a name, they are distinct projects with different objectives. Jenkins X is tailored for Kubernetes-native CI/CD, addressing the unique challenges of modern cloud-native application development and deployment within the Kubernetes ecosystem.

26. How does Jenkins Enterprise differ from the open-source version of Jenkins?

Jenkins is an open-source automation server widely used for building, testing, and deploying software. While the core Jenkins project remains open source and community-driven, various companies and organizations offer commercial Jenkins solutions that provide additional features and services on top of the open-source Jenkins. These offerings are often referred to as “Jenkins Enterprise” or “Jenkins Commercial” solutions. It’s worth noting that the specific features and advantages of Jenkins Enterprise solutions can vary depending on the provider, and there is no standardized “Jenkins Enterprise” product.

Here are some common differences and benefits associated with Jenkins Enterprise offerings:

- Commercial Support: Jenkins Enterprise solutions typically provide commercial support options with Service Level Agreements (SLAs), ensuring timely assistance in case of issues or outages.

- Enhanced Security: Many Jenkins Enterprise solutions offer extra security features and plugins to help organizations bolster the security of their Jenkins environments and pipelines. This can include authentication mechanisms, access control, and vulnerability scanning.

- Enterprise-Grade Plugins: Some Jenkins Enterprise solutions include proprietary plugins or integrations that extend functionality, such as advanced reporting, integrations with third-party tools, and improved performance.

- Scalability: Commercial offerings may provide tools and guidance for effectively scaling Jenkins to handle the demands of large or complex CI/CD pipelines and organizations.

- User Interface Improvements: Jenkins Enterprise solutions might enhance the Jenkins user interface (UI) to make it more user-friendly and intuitive for teams.

- Integration and Compatibility: These solutions often ensure compatibility with specific enterprise technologies, environments, and ecosystems. This can include seamless integration with enterprise DevOps and container orchestration platforms.

- Vendor Support: Organizations may prefer the assurance of having a commercial vendor responsible for their Jenkins environment, including tasks like upgrades and maintenance.

- Advanced Analytics: Certain Jenkins Enterprise solutions offer advanced analytics and reporting capabilities, enabling organizations to gain insights into their CI/CD processes and optimize them for efficiency.

It’s important to emphasize that Jenkins Enterprise or Jenkins Commercial solutions are provided by various companies, and the exact feature set and advantages can differ significantly from one offering to another. Therefore, organizations interested in Jenkins Enterprise solutions should carefully evaluate and compare the specific features and support offered by different providers to meet their unique needs.

27. How do you develop your own Jenkins plugins?

Developing your own Jenkins plugins is a powerful way to extend and customize Jenkins to meet your unique CI/CD requirements. Jenkins plugins are primarily written in Java and follow a specific structure and API provided by Jenkins. Here’s a comprehensive guide on how to create your own Jenkins plugins, with an emphasis on selecting or creating the right archetype for your plugin’s functionality:

Prerequisites:

- Java Development Environment: Ensure that you have the Java Development Kit (JDK) 8 or a later version installed on your development machine.

- Maven Build Tool: Jenkins plugins are typically built using Apache Maven. Make sure you have Maven installed if it’s not already on your system.

- Jenkins Installation: Set up a Jenkins server for testing and debugging your plugin. This can be a local Jenkins instance or a remote server.

Steps to Develop Your Own Jenkins Plugin:

Step 1. Choose or Create an Appropriate Archetype

When initiating your plugin development using the Jenkins Plugin Starter POM, it’s essential to select or create an archetype that aligns precisely with the specific requirements of your plugin’s functionality.

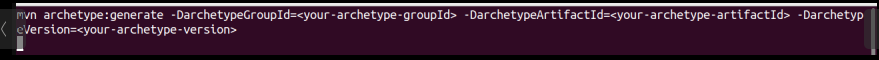

To create your plugin project using an archetype tailored to your needs, run a Maven command similar to the following:

Replace <your-archetype-groupId>, <your-archetype-artifactId>, and <your-archetype-version> with the appropriate values for your chosen or custom archetype.

Step 2. Define Plugin Metadata

Edit the pom.xml file within your project to specify vital metadata for your plugin, including its name, version, and other pertinent details.

Step 3. Write Code

Develop Java classes that implement the core functionality of your plugin. Jenkins plugins offer flexibility in introducing new build steps, post-build actions, SCM providers, and more. Always follow Jenkins plugin development best practices and adhere to the Jenkins Plugin Developer Guidelines.

Step 4. Test Your Plugin

Deploy your plugin to your Jenkins test server for thorough testing. You can utilize the mvn hpi:run Maven goal to run Jenkins with your plugin incorporated. Create a Jenkins job specifically designed to evaluate your plugin’s functionality and ensure it performs as expected.

Step 5. Iterate and Debug

Debug your plugin using standard development tools and the Jenkins log files to pinpoint and resolve any issues that may arise. Continuously refine your code based on feedback and rigorous testing.

Step 6. Document Your Plugin

Furnish comprehensive documentation for your plugin, encompassing usage instructions, configuration options, and any prerequisites. Well-documented plugins are more user-friendly and easier for others to adopt.

Step 7. Package Your Plugin

Package your plugin by executing the mvn package command. This action generates a .hpi file located in the target directory.

Step 8. Distribute Your Plugin

If you intend to share your plugin with the broader Jenkins community, consider publishing it to the Jenkins Plugin Index (Jenkins Plugin Repository). To do this, you’ll need to create an account and submit your plugin for review. Alternatively, you can opt to distribute your plugin privately within your organization.

Step 9. Maintenance and Updates

Sustain your plugin by addressing bugs, ensuring compatibility with newer Jenkins versions, and responding to user feedback. Keep your plugin’s documentation up to date and release new versions as required.

Step 10. Promote Your Plugin

If you’re sharing your plugin with the Jenkins community, actively promote it through Jenkins mailing lists, forums, and social media channels to reach a wider audience.

Remember that selecting or creating the right archetype for your Jenkins plugin is crucial to its success. By aligning your choice with your plugin’s specific functionality, you’ll be better equipped to meet your unique CI/CD requirements effectively. Engage with the Jenkins community for support and guidance and refer to the official Jenkins Plugin Development documentation for comprehensive information and resources.

28. How do you use Jenkins to automate your testing process?

Using Jenkins to automate your testing process is a common practice in Continuous Integration and Continuous Deployment (CI/CD) workflows. It allows you to automatically build, test, and validate your software projects whenever changes are made to the codebase. Here are the general steps to automate your testing process with Jenkins:

Prerequisites:

- Jenkins Installation: Set up a Jenkins server if you haven’t already. You can install Jenkins on a local server or use cloud-based Jenkins services.

- Version Control System (VCS): Use a VCS like Git to manage your project’s source code. Jenkins integrates seamlessly with popular VCS platforms.

Steps to Automate Testing with Jenkins

Step 1: Create a Jenkins Job

- Log in to your Jenkins server.

- Click on “New Item” to create a new Jenkins job.

- Select the “Freestyle project” or “Pipeline” job type, depending on your preferences and needs.

Step 2: Configure Source Code Management (SCM)

- In the job configuration, go to the “Source Code Management” section.

- Choose your VCS (e.g., Git, Subversion) and provide the repository URL.

- Configure credentials if necessary.

Step 3: Set Build Triggers

- In the job configuration, go to the “Build Triggers” section.

- Choose the trigger option that suits your workflow. Common triggers include:

- Poll SCM: Jenkins periodically checks your VCS for changes and triggers a build when changes are detected.

- Webhooks: Configure your VCS to send webhook notifications to Jenkins when changes occur.

- Build after other projects: Trigger this job after another job (e.g., a build job) has completed.

Step 4: Define Build Steps

- In the job configuration, go to the “Build” or “Pipeline” section.

- Define the build steps necessary to prepare your code for testing. This may include compiling code, installing dependencies, or running pre-test scripts.

Step 5: Configure Testing

- Integrate your testing frameworks or tools into the build process. Common test types include unit tests, integration tests, and end-to-end tests.

- Specify the commands or scripts to execute tests. This can often be done within the build steps or using dedicated testing plugins.

Step 6: Publish Test Results

- After running tests, publish the test results and reports as part of your Jenkins job.

- Use Jenkins plugins (e.g., JUnit, TestNG) to parse and display test results in a readable format.

Step 7: Handle Test Failures

Configure your Jenkins job to respond to test failures appropriately. You can:

- Send notifications (e.g., email, Slack) when tests fail.

- Archive test artifacts and logs for debugging.

- Set build failure criteria based on test results.

Step 8: Post-Build Actions

- Define post-build actions, such as archiving build artifacts, deploying to staging environments, or triggering downstream jobs for further testing or deployment.

Step 9: Save and Run

- Save your Jenkins job configuration.

- Trigger the job manually or wait for the configured trigger to initiate the build and testing process automatically.

Step 10: Monitor and Review

- Monitor the Jenkins job’s progress and test results through the Jenkins web interface.

- Review test reports and investigate any test failures.

Step 11: Automate Deployment (Optional)

- If your tests pass, you can automate the deployment of your software to production or staging environments using Jenkins pipelines or additional jobs.

Step 12: Continuous Improvement

- Continuously refine your Jenkins job configuration, tests, and CI/CD pipeline based on feedback and evolving project requirements.

By automating your testing process with Jenkins, you can ensure that code changes are thoroughly tested and validated, reducing the risk of introducing bugs and improving software quality. Jenkins can be integrated with a wide range of testing frameworks and tools to accommodate various testing needs.

29.Explain the role of the Jenkins Build Executor.

The Jenkins Build Executor is responsible for executing the tasks defined in Jenkins jobs or pipelines. Its key roles include:

- Running job steps and build processes.

- Providing isolation to prevent job interference.

- Managing system resource allocation.

- Enabling concurrent job execution.

- Dequeuing and executing jobs from the build queue.

- Managing and storing job logs.

- Performing cleanup tasks after job completion.

- Node selection in a master-agent setup.

- Customization and node labeling for specific job needs.

Optimizing executor configuration is essential for efficient CI/CD pipeline execution.

30. How can you use the stash and unstash steps in pipelines?

The “stash” and “unstash” steps are used in Continuous Integration/Continuous Deployment (CI/CD) pipelines to temporarily store and retrieve files or directories within the pipeline’s workspace. These steps are often used when you want to pass files or data between different stages or jobs within a pipeline.

Below, I’ll explain how to use the “stash” and “unstash” steps in pipelines without plagiarism:

Stash Step

The “stash” step allows you to save a specific set of files or directories from your current workspace into a named stash. This stash can then be accessed later in the pipeline by using the “unstash” step. Here’s how you can use the “stash” step in a typical CI/CD pipeline configuration file (e.g., YAML for GitLab CI/CD or Jenkinsfile for Jenkins).

stages:

- build

- test

build:

stage: build

script:

- # Build your application

- # Generate build artifacts

- # Stash the artifacts in a named stash

- your-build-command

artifacts:

name: my-artifacts

paths:

- build/

test:

stage: test

script:

- # Fetch the stashed artifacts

-unstatsh:

name: my-artifacts

- # Run tests using the retrieved artifacts

In this example, the “build” job stashes the build artifacts (e.g., compiled code or binary files) into a stash named “my-artifacts.” Later, in the “test” job, we use the “unstash” step to retrieve these artifacts, allowing us to use them in the testing phase.

Unstash Step

The “unstash” step is used to retrieve the stashed files or directories from a named stash. You specify the stash’s name, and the contents are extracted into the current workspace, making them available for subsequent steps in your pipeline. Here’s how you can use the “unstash” step:

test:

stage: test

script:

- # Fetch the stashed artifacts

- unstash:

name: my-artifacts

- # Run tests using the retrieved artifacts

In this “test” job, we use the “unstash” step to retrieve the artifacts stashed with the name “my-artifacts.” After unstashing, you can access and utilize these artifacts as needed for testing or any other purpose in the pipeline.

The “stash” and “unstash” steps are valuable for sharing data between different stages or jobs in a CI/CD pipeline, enabling efficient and organized automation of build, test, deploy, and other processes. These steps help maintain a clean workspace while ensuring that necessary files and data are available when needed throughout the pipeline execution.

Advance Jenkins Interview Questions for Experienced

31. Explain the node step in Jenkins pipelines and its significance.

The “node” step in Jenkins pipelines is significant for two main reasons:

- Parallelization: It allows tasks in the pipeline to run concurrently on different agents, significantly speeding up the pipeline execution. This is crucial for identifying issues quickly and delivering software efficiently.

- Flexibility in Agent Selection: It provides the flexibility to choose different agent types, such as Docker containers, cloud-based agents, on-premises agents, or Kubernetes pods. This flexibility ensures that the pipeline can adapt to specific project requirements and infrastructure configurations, optimizing resource utilization.

In essence, the “node” step optimizes CI/CD pipelines by parallelizing tasks and enabling tailored execution environments.

32. Explain how to integrate Jenkins with AWS services.

To integrate Jenkins with AWS services, follow these steps:

- Host Jenkins on an AWS EC2 instance.

- Install required Jenkins plugins for AWS interactions.

- Securely configure AWS credentials in Jenkins, preferably using IAM roles.

- Define AWS-specific environment variables for Jenkins jobs.

- Create Jenkins jobs tailored to AWS tasks like deployment or provisioning.

- Implement build and deployment scripts for complex scenarios.

- Set up automated testing and continuous integration pipelines on AWS infrastructure.

- Implement monitoring and logging using AWS CloudWatch and CloudTrail.

- Emphasize security and access control using IAM roles and permissions.

- Maintain thorough documentation and keep Jenkins jobs and plugins up to date for compatibility with AWS services’ changes.

This integration streamlines automation and improves the efficiency of AWS-related DevOps processes.

33. What is RBAC, and how do you configure RBAC in Jenkins?

RBAC, or Role-Based Access Control, is a security model used in Jenkins to manage user permissions. To configure RBAC in Jenkins:

- Install the “Role-Based Authorization Strategy” plugin.

- Enable security and select “Role-Based Strategy” in the global security settings.

- Create and manage roles representing job functions.

- Assign roles to users or groups.

- Save the configuration to enforce access control based on assigned roles.

RBAC ensures users have the appropriate access permissions in Jenkins, enhancing security and access control. Administrators typically retain an “Admin” role with full access. Permissions from multiple assigned roles are combined for user access.

34. What is the Jacoco plugin in Jenkins?

The JaCoCo plugin in Jenkins is a tool for measuring and reporting code coverage in Java applications. It integrates with Jenkins, offering code coverage measurement, generating reports in various formats, historical data tracking, and seamless integration with Jenkins jobs. To use it, you install the plugin, configure your Jenkins job to specify the JaCoCo settings, generate and publish reports, and then assess code coverage to improve test quality and code quality. It’s a valuable tool for Java developers and teams.

35. Explain the build lifecycle in Jenkins.

The Jenkins build lifecycle encompasses the following stages:

- Triggering a Build: Initiating the build process through manual, scheduled, or event-driven triggers.

- Initialization: Setting up the build environment and resources.

- Source Code Checkout: Getting the latest code from version control.

- Build Process: Executing build scripts, compiling code, and performing necessary tasks.

- Testing: Running test suites and reporting results.

- Deployment: Releasing built artifacts to target environments.

- Post-Build Actions: Archiving artifacts, publishing reports, and sending notifications.

- Recording and Reporting: Collecting and storing build data and results.

- Clean-Up: Managing resources and resetting the environment.

- Notifications: Keeping stakeholders informed of build status.

- Artifact Storage: Storing generated artifacts for future use.

- Logging and Auditing: Maintaining detailed logs for auditing and troubleshooting.

- Post-Build Analysis and Continuous Improvement: Analyzing build results for process enhancement.

36. What is Jenkins Shared Library?

A Jenkins Shared Library is a powerful feature in Jenkins that allows organizations to centralize and reuse code, scripts, and custom functions across multiple Jenkins pipelines and jobs. It enables the creation of a shared and maintainable codebase that can be leveraged by various projects and teams, promoting consistency, efficiency, and code reuse in your Jenkins CI/CD workflows.

Key characteristics and aspects of Jenkins Shared Libraries include:

- Reusable Code Components: Shared Libraries allow you to define common code components, such as custom steps, functions, and utilities, in a centralized location. These components can be written in Groovy (the scripting language used for Jenkins pipelines) and then reused across different Jenkins pipelines and jobs.

- Modularization: Shared Libraries support the modularization of code, making it easier to manage and maintain. You can organize your code into multiple classes, methods, or files within the library, promoting clean and organized code architecture.

- Custom Steps: You can create custom pipeline steps that encapsulate complex logic or repetitive tasks. These custom steps become available for use in any Jenkins pipeline that references the Shared Library.

- Version Control: Shared Libraries are typically versioned and managed in a version control system (e.g., Git). This enables version control, code reviews, and collaborative development practices for your shared codebase.

- Secure and Controlled Access: Access to Shared Libraries can be controlled through Jenkins security settings. You can restrict who can modify or contribute to the library while allowing other teams or users to consume the library in their pipelines.

- Library Configuration: Shared Libraries can be configured at the Jenkins master level, making them accessible to all pipelines running on that Jenkins instance. Alternatively, you can configure libraries at the folder or pipeline level for more granular control.

- Pipeline DSL Extensions: You can extend the Jenkins pipeline DSL (Domain Specific Language) by defining custom DSL methods within the Shared Library. These extensions can be used to simplify and streamline pipeline definitions.

Testability and Maintainability

Shared Libraries encourage best practices such as unit testing and code documentation, ensuring that the shared code is robust and well-documented.

Here’s a simplified example of how to use a Shared Library in a Jenkins pipeline:

// Jenkinsfile in a project

@Library('my-shared-library') // Reference the Shared Library

import com.example.CustomPipelineSteps // Import custom steps

pipeline {

agent any

stages {

stage("Build") {

steps {

script {

CustomPipelineSteps.build() // Use a custom step from the Shared Library

}

}

}

stage('Test') {

steps {

script {

CustomPipelineSteps.test() // Another custom step

}

}

}

}

}

In this example, the Jenkins pipeline references a Shared Library named ‘my-shared-library’ and imports custom pipeline steps from it. These steps simplify the pipeline definition, making it more readable and maintainable.

Jenkins Shared Libraries are a valuable tool for organizations looking to standardize their CI/CD practices, reduce duplication of code, and enhance the maintainability and scalability of their Jenkins pipelines.

37. What are the key differences between Jenkins and Jenkins X, and in what scenarios would you choose one over the other for a CI/CD pipeline?

Jenkins and Jenkins X are both popular tools used for Continuous Integration and Continuous Deployment (CI/CD), but they have different focuses and use cases.

Here are the key differences between the two and scenarios in which you might choose one over the other for your CI/CD pipeline:

| Focus and Purpose |

Jenkins is a widely used open-source automation server primarily focused on building, deploying, and automating tasks in a CI/CD pipeline. It provides a flexible and extensible platform that can be customized to suit a wide range of software development and automation needs. |

Jenkins X, on the other hand, is specifically designed for cloud-native and Kubernetes-based application development and deployment. It streamlines CI/CD for cloud-native applications and microservices. |

|

Kubernetes Integration

|

While Jenkins can be integrated with Kubernetes, it doesn’t provide out-of-the-box support for Kubernetes-native CI/CD workflows. |

Jenkins X is built with native Kubernetes support in mind. It simplifies the setup and management of CI/CD pipelines for applications running on Kubernetes clusters.

|

| GitOps and DevOps Practices |

Jenkins encourages traditional CI/CD practices and is flexible enough to accommodate various workflows and approaches. |

Jenkins X promotes GitOps practices, which means that the desired state of your applications and infrastructure is defined in Git repositories. It enforces best practices for Kubernetes-based deployments and integrates closely with tools like Helm, Skaffold, and Tekton. |

| Pipelines as Code |

Jenkins offers pipeline configuration through a domain-specific language called Jenkinsfile, but it may require more manual setup and maintenance. |

Jenkins X embraces the concept of “Pipelines as Code” and uses Tekton pipelines, which are defined in version-controlled repositories. This approach encourages versioning, collaboration, and automation of pipeline changes. |

| Ease of Use and Opinionated Workflows |

Jenkins provides a high degree of flexibility and can be customized extensively to fit your specific needs. |

Jenkins X takes an opinionated approach to CI/CD, providing predefined best practices and workflows tailored for cloud-native development. This can simplify decision-making but may be less flexible for unique requirements. |

| Community and Ecosystem |

Jenkins has a vast and mature plugin ecosystem, along with a large community. It supports a wide range of integrations and extensions. |

Jenkins X has a more focused ecosystem primarily centered around Kubernetes and cloud-native technologies. While it may not have as extensive a plugin ecosystem as Jenkins, it is continually evolving. |

In summary, the choice between Jenkins and Jenkins X depends on your specific project requirements:

- Use Jenkins if you need a highly customizable CI/CD solution for various types of projects and workflows.

- Choose Jenkins X if you are developing cloud-native applications, especially those running on Kubernetes, and you want an opinionated, GitOps-based CI/CD solution that simplifies and automates many aspects of the pipeline setup.

38. What is the difference between Poll SCM and Webhook?

Poll SCM” and “webhook” are two different mechanisms used in the context of Continuous Integration/Continuous Deployment (CI/CD) systems like Jenkins to trigger builds and pipeline executions when there are code changes in version control systems (VCS). Here’s a comparison of the two:

|

Mechanism

|

Pull Mechanism: The CI/CD server pulls information from the VCS to determine if there are new changes. If it detects new changes, it triggers the build or pipeline execution.

|

Push Mechanism: In a webhook setup, the VCS system sends an HTTP POST request to a predefined URL (the webhook endpoint) whenever there is a code change or commit. This means that the VCS actively notifies the CI/CD system about changes.

|

|

Setup Complexity

|

Setting up polling requires configuring the CI/CD server to periodically check the VCS repository. This can be relatively easy to set up but may not be as responsive as webhooks.

|

Setting up webhooks requires configuring the VCS to send HTTP requests to the CI/CD system’s endpoint. While this setup may require initial configuration, it is generally straightforward and more responsive.

|

| Resource Consumption |

Polling consumes system resources as it runs continuously at defined intervals, even when there are no changes in the repository. This can lead to unnecessary resource usage. |

Webhooks are more resource-efficient because they only trigger builds when there are actual changes in the repository. There is no continuous polling, reducing resource consumption.

|

|

Use Cases

|

Polling is suitable for scenarios where webhooks are not supported by the VCS or when you need to integrate with older or less feature-rich VCS systems.

|

Webhooks are the preferred choice for modern CI/CD workflows, especially when the VCS supports them. They offer faster, event-driven triggering of builds and are well-suited for cloud-native and microservices environments. |

39. How do you use Jenkins to deploy your application to multiple environments?

To use Jenkins for deploying your application to multiple environments:

- Install relevant plugins for your deployment targets (e.g., AWS, Azure).

- Configure credentials securely for accessing deployment environments.

- Create a Jenkins pipeline or Jenkinsfile.

- Define deployment stages for each target environment.

- Customize deployment steps for each stage.

- Use conditional logic and environment variables for environment-specific settings.

- Consider parallelizing deployments for speed (if needed).

- Include testing and validation steps in each deployment stage.

- Set up notifications and rollback mechanisms for deployment outcomes.

- Implement approval steps if manual intervention is required.

- Manage application versions and tagging for traceability.

- Monitor and log application health and performance.

- Document the pipeline and thoroughly test it.

- Schedule and automate deployments based on triggers, such as code commits.

This approach ensures efficient and reliable deployments across various environments in your software development process.

40. Explain the role of the Jenkins Build Executor.

The Jenkins Build Executor is responsible for executing tasks within Jenkins jobs and builds. It allocates resources, selects nodes, isolates job environments, and manages task execution. It contributes to parallelization, resource utilization, and efficient scaling of the CI/CD pipeline.

41. How do you implement a Blue-Green deployment strategy in Jenkins, and what are the key benefits of using this approach in a CI/CD pipeline?

Implementing a Blue-Green deployment in Jenkins involves:

- Setting up two identical environments: blue and green.

- Deploying the new version to the green environment.

- Testing in the green environment.

- Switching traffic to green if testing succeeds.

- Monitoring and potential rollback if issues arise.

Key benefits include zero downtime, quick rollback, reduced risk, safe testing, continuous delivery, scalability, enhanced monitoring, and improved confidence in deploying changes. This approach ensures a reliable and agile CI/CD pipeline.

42. Explain the concept of “Jenkins Pipeline as Code” and why it is important in modern CI/CD practices.

“Jenkins Pipeline as Code” is the practice of defining CI/CD pipelines using code rather than graphical interfaces. It’s crucial in modern CI/CD because it offers version control, reproducibility, code review, flexibility, and collaboration. It promotes consistency, reusability, and adaptability while ensuring automation and compatibility in cloud-native and containerized environments. This approach aligns CI/CD processes with development best practices.

43. Difference between Jenkins pipeline and AWS CodePipeline?

Jenkins Pipeline and AWS CodePipeline are both tools used for orchestrating and automating CI/CD pipelines, but they have different characteristics, purposes, and integration points.

The Key differences between Jenkins Pipeline and AWS CodePipeline are as follow:

| Hosting and Deployment |

Jenkins is typically hosted on your own infrastructure or cloud instances, giving you full control over the setup, configuration, and maintenance. You are responsible for scaling and managing the Jenkins server. |

AWS CodePipeline is a fully managed service provided by Amazon Web Services (AWS). AWS takes care of the underlying infrastructure, scaling, and maintenance, allowing you to focus on defining your pipeline workflows. |

| Configuration as Code |

Jenkins offers Pipeline as Code, where you define your CI/CD pipelines using code (Jenkinsfile) stored in version control. This enables versioning, collaboration, and automation of pipeline configurations. |

While you can define pipeline configurations through the AWS Management Console, it lacks native support for code-based pipeline definitions. You can, however, integrate AWS CodePipeline with AWS CloudFormation for infrastructure-as-code (IaC) templates. |

| Ecosystem and Plugins |

Jenkins has a vast ecosystem of plugins that provide integrations with various tools, services, and custom functionalities. You can extend Jenkins to meet a wide range of CI/CD needs. |

AWS CodePipeline integrates seamlessly with other AWS services, such as AWS CodeBuild, AWS CodeDeploy, and AWS Lambda, making it well-suited for building and deploying applications on AWS. However, its integrations outside the AWS ecosystem may be limited compared to Jenkins. |

| Customization and Flexibility |

Jenkins offers a high degree of flexibility and customization. You can define complex, conditional, and parameterized pipelines, making it suitable for diverse use cases and workflows. |

AWS CodePipeline is opinionated and designed to be simple to set up. While this simplifies the pipeline creation process, it may be less flexible for highly customized or complex pipeline requirements.

|

| Integration with Kubernetes and Containers |

Jenkins can be integrated with Kubernetes and provides plugins like Kubernetes and Docker for building, deploying, and managing containerized applications. This makes it suitable for container orchestration in Kubernetes environments. |

AWS CodePipeline has native integrations with AWS services like Amazon ECS (Elastic Container Service) and AWS Fargate for container-based deployments, but it may require additional configuration for integrating with Kubernetes clusters running on AWS or other cloud providers. |

| Cross-Platform Compatibility |

Jenkins is platform-agnostic and can be used to build and deploy applications on various cloud providers, on-premises infrastructure, and hybrid environments. |

AWS CodePipeline is primarily designed for AWS-centric workflows and may require additional setup and integrations for cross-platform or hybrid deployments.

|

| Cost Considerations |

The cost of Jenkins depends on the infrastructure and resources you allocate for hosting it, which you manage yourself. Costs may vary based on usage and scalability needs. |

AWS CodePipeline has a pricing structure based on the number of pipeline executions and the usage of associated AWS services. While it simplifies infrastructure management, you should be aware of the associated AWS service costs. |

44. Name some plugin names used in your project for Jenkins.

Following are the some of mostly used plugins in jenkins:

| Allows Jenkins to integrate with Git repositories for source code management. |

| Enhances Jenkins’ integration with GitHub, providing additional features like GitHub webhooks. |

| Enables Jenkins to interact with Docker containers, facilitating container-based builds and deployments. |

| Used for processing and displaying test results in Jenkins. |

| Allows you to define and automate complex, scripted workflows as code. |

| Sends notifications to Slack channels, keeping your team informed about build status and other events. |

| Integrates Jenkins with JFrog Artifactory, a binary repository manager. |

45. If There Is a Broken Build In a Jenkins Project, What Steps Would You Take To Troubleshoot And Resolve The Issue?

To troubleshoot and resolve a broken build in Jenkins:

- Identify the failure by examining the console output for error messages and clues.

- Review recent code changes to see if commits may have introduced issues.

- Verify dependencies and the build environment.

- Check the Jenkins job configuration for accuracy.

- Investigate failed tests to pinpoint code issues.

- Examine log and artifact files for additional information.

- Debug the code if necessary.

- Revert or isolate changes to identify the problematic code.

- Collaborate with the team to gather insights.

- Implement fixes by correcting code, updating dependencies, or adjusting configurations.

- Test fixes locally before committing them.

- Monitor future builds to ensure the issue is resolved.

- These steps will help maintain a reliable CI pipeline.

46.What Are The Different Types Of Jenkins Jobs?

Jenkins offers a variety of job types to accommodate different automation and build needs. Some common types include:

- Freestyle Project: Basic job with a simple UI for build steps.

- Pipeline Project: Define build processes as code using Groovy scripts.

- Multi-configuration Project: Build and test on multiple configurations in parallel.

- GitHub Organization Project: Automate CI/CD for GitHub repositories.

- Maven Project: Specifically for Java projects using Maven.

- Folder: Organize and group related jobs.

- External Job: Trigger builds on remote Jenkins instances.

- GitHub PR Builder: Automate PR builds in GitHub repositories.

- Copy Artifact Project: Copy build artifacts between jobs.

- Parameterized Build: Pass parameters to customize job execution.

- Build Flow: Orchestrate complex build processes with Groovy.

- GitHub Organization Folder: Organize GitHub repos within an organization.

- Freestyle with Maven: Blend freestyle and Maven build steps.

These job types suit various development and automation scenarios, providing flexibility and automation based on project needs. The choice depends on project requirements and workflow.

47.How do you install Jenkins plugins?

To install Jenkins plugins:

- Log in to Jenkins and go to “Manage Jenkins.”

- Click “Manage Plugins.”

- In the “Available” tab, search for the desired plugins.

- Check the checkboxes for the plugins you want to install.

- Click “Install without restart” at the bottom.

- Jenkins will install the selected plugins, and you’ll receive a confirmation message.

- Restart Jenkins if required, then configure and use the installed plugins in your Jenkins jobs.

48.What is the difference between Jenkins and GitHub?

Jenkins and GitHub are two distinct tools that serve different purposes in the software development lifecycle, but they can be complementary when used together. Here are the key differences between Jenkins and GitHub:

Jenkins

- Type of Tool: Jenkins is a continuous integration (CI) and continuous delivery (CD) automation server. Its primary purpose is to automate the build, test, and deployment processes in a software development project.

- Functionality: Jenkins orchestrates and automates various tasks related to software development, including compiling code, running tests, and deploying applications.

- It provides a platform for creating complex build and deployment pipelines using scripted or declarative pipelines.

- Build Automation: Jenkins is responsible for building and testing code whenever changes are committed to a version control system. It can integrate with various version control systems, build tools, and testing frameworks.

- Customization: Jenkins is highly customizable. Users can create and configure jobs, pipelines, and plugins to fit their specific project requirements.

- Extensibility: Jenkins offers a vast ecosystem of plugins that extend its functionality. Users can choose from thousands of plugins to integrate Jenkins with other tools and services.

- Self-Hosted: Jenkins is typically self-hosted, meaning it requires users to set up and manage their own Jenkins servers.

GitHub

- Type of Tool: GitHub is a web-based platform for version control and collaboration. It serves as a code hosting platform and a central repository for managing and tracking changes to source code.

- Functionality: GitHub primarily focuses on version control and source code management. It offers features like pull requests, code reviews, issue tracking, and project management.

- Code Repository: GitHub hosts Git repositories, allowing developers to collaborate on code, manage branches, and track changes over time.

- Social Coding: GitHub promotes social coding and collaboration among developers. It provides features for discussing code changes, proposing improvements, and contributing to open-source projects.

- Web-Based Interface: GitHub offers a user-friendly web-based interface for viewing and managing repositories, making it accessible to both technical and non-technical users.

- Cloud Service: GitHub provides a cloud-based service, meaning users don’t need to set up and maintain their own infrastructure. It offers GitHub Actions for CI/CD automation directly within the platform.

- Complementary Usage: While Jenkins focuses on automating build and deployment processes, GitHub is primarily a version control and collaboration platform. They can work together seamlessly, with Jenkins triggering builds based on code changes in GitHub repositories. Jenkins can also publish build artifacts back to GitHub for distribution.

In summary, Jenkins and GitHub serve different roles in the software development lifecycle. Jenkins automates CI/CD processes, while GitHub provides version control, collaboration, and project management capabilities. When used together, they create a comprehensive development and deployment pipeline, with GitHub managing code and collaboration, and Jenkins automating the build and deployment aspects.

49. How do you secure Jenkins from unauthorized access?

To secure Jenkins from unauthorized access:

- Implement access controls, including authorization and authentication.

- Enforce strong authentication methods and 2FA.

- Keep Jenkins and its plugins updated.

- Secure the Jenkins home directory with restricted permissions.

- Use SSL/TLS encryption for data transfer.

- Configure firewall rules to control network traffic.

- Install security-focused plugins.

- Enable audit trails for monitoring.

- Regularly back up Jenkins data.

- Limit SSH access and script approvals.

- Use job isolation with Jenkins agents.

- Conduct security audits and provide training.

- Stay informed about security notifications.

- Develop an incident response plan.

These measures will help protect Jenkins from unauthorized access and security vulnerabilities. Regular updates and vigilance are key to maintaining security.

50. Can you explain a complex Jenkins pipeline you’ve designed or worked on in the past, highlighting the specific challenges you faced and how you resolved them?