How to duplicate a row N time in Pyspark dataframe?

Last Updated :

05 Apr, 2022

In this article, we are going to learn how to duplicate a row N times in a PySpark DataFrame.

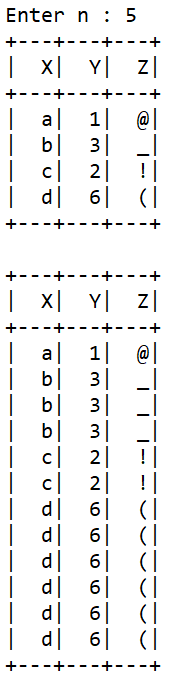

Method 1: Repeating rows based on column value

In this method, we will first make a PySpark DataFrame using createDataFrame(). In our example, the column “Y” has a numerical value that can only be used here to repeat rows. We will use withColumn() function here and its parameter expr will be explained below.

Syntax :

DataFrame.withColumn(colName,col)

Parameters :

- colName : str name of the new column

- col : Column(DataType) a column expression of the new column

The colName here is “Y”. The col expression we will be using here is :

explode(array_repeat(Y,int(Y)))

- array_repeat is an expression that creates an array containing a column repeated count times.

- explode is an expression that returns a new row for each element in the given array or map.

Example:

Python

import pyspark

from pyspark.sql import SparkSession

from pyspark.sql.functions import col,expr

Spark_Session = SparkSession.builder.appName(

'Spark Session'

).getOrCreate()

n = int(input('Enter n : '))

rows = [['a',1,'@'],

['b',3,'_'],

['c',2,'!'],

['d',6,'(']]

columns = ['X','Y','Z']

df = Spark_Session.createDataFrame(rows,columns)

df.show()

new_df = df.withColumn(

"Y", expr("explode(array_repeat(Y,int(Y)))"))

new_df.show()

|

Output :

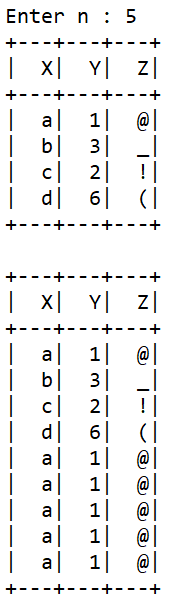

Method 2: Using collect() and appending a random row in the list

In this method, we will first accept N from the user. We will then create a PySpark DataFrame using createDataFrame(). We can then store the list of Row objects found using collect() method. The Syntax needed is :

DataFrame.collect()

in a variable. We will then use the Python List append() function to append a row object in the list which will be done in a loop of N iterations. Finally, the list of Row objects will be converted to a PySpark DataFrame.

Example:

Python

import pyspark

from pyspark.sql import SparkSession

import random

Spark_Session = SparkSession.builder.appName(

'Spark Session'

).getOrCreate()

n = int(input('Enter n : '))

rows = [['a',1,'@'],

['b',3,'_'],

['c',2,'!'],

['d',6,'(']]

columns = ['X','Y','Z']

df = Spark_Session.createDataFrame(rows,columns)

df.show()

row_list = df.collect()

repeated = random.choice(row_list)

for _ in range(n):

row_list.append(repeated)

df = Spark_Session.createDataFrame(row_list)

df.show()

|

Output :

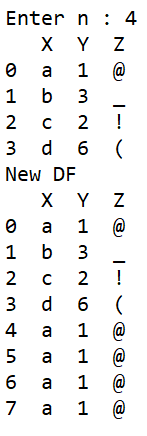

Method 3: Convert the PySpark DataFrame to a Pandas DataFrame

In this method, we will first accept N from the user. We will then create a PySpark DataFrame using createDataFrame(). We will then be converting a PySpark DataFrame to a Pandas DataFrame using toPandas(). We will then get the first row of the DataFrame using slicing with the Syntax DataFrame[:1]. We will then use append() function to stick the row to the Pandas DataFrame using a loop. They syntax of append() is :

Syntax : DataFrame.append(other, ignore_index=False, verify_integrity=False, sort=False)

Parameters :

- other : DataFrame/Numpy Series The data to be appended

- ignore_index : bool, default : False Check if the DataFrame of the new DataFrame depends on the older DataFrame

- verify_integrity : bool, default : False Takes care of duplicate values

- sort : bool, default : False Sort columns based on the value

Example:

Python

import pyspark

from pyspark.sql import SparkSession

import pandas as pd

Spark_Session = SparkSession.builder.appName(

'Spark Session'

).getOrCreate()

n = int(input('Enter n : '))

rows = [['a',1,'@'],

['b',3,'_'],

['c',2,'!'],

['d',6,'(']]

columns = ['X','Y','Z']

df = Spark_Session.createDataFrame(rows,columns)

df_pandas = df.toPandas()

print('First DF')

print(df_pandas)

first_row = df_pandas[:1]

for _ in range(n):

df_pandas = df_pandas.append(first_row,ignore_index = True)

print('New DF')

print(df_pandas)

|

Output :

Share your thoughts in the comments

Please Login to comment...