Pipelining and Addressing modes

Question 1

Question 2

ADD, MUL, ADD, MUL, ADD, MUL, ADD, MULAssume that every MUL instruction is data-dependent on the ADD instruction just before it and every ADD instruction (except the first ADD) is data-dependent on the MUL instruction just before it. The speedup defined as follows.

Speedup = (Execution time without operand forwarding) / (Execution time with operand forwarding)The Speedup achieved in executing the given instruction sequence on the pipelined processor (rounded to 2 decimal places) is _____________ .

Question 3

Consider an instruction pipeline with five stages without any branch prediction: Fetch Instruction (FI), Decode Instruction (DI), Fetch Operand (FO), Execute Instruction (EI) and Write Operand (WO). The stage delays for FI, DI, FO, EI and WO are 5 ns, 7 ns, 10 ns, 8 ns and 6 ns, respectively. There are intermediate storage buffers after each stage and the delay of each buffer is 1 ns. A program consisting of 12 instructions I1, I2, I3, …, I12 is executed in this pipelined processor. Instruction I4 is the only branch instruction and its branch target is I9. If the branch is taken during the execution of this program, the time (in ns) needed to complete the program is

Question 5

Consider a hypothetical processor with an instruction of type LW R1, 20(R2), which during execution reads a 32-bit word from memory and stores it in a 32-bit register R1. The effective address of the memory location is obtained by the addition of a constant 20 and the contents of register R2. Which of the following best reflects the addressing mode implemented by this instruction for operand in memory?

Question 6

Question 7

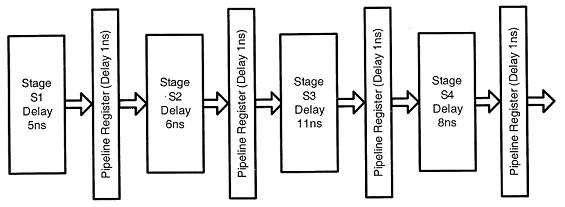

What is the approximate speed up of the pipeline in steady state under ideal conditions when compared to the corresponding non-pipeline implementation?

What is the approximate speed up of the pipeline in steady state under ideal conditions when compared to the corresponding non-pipeline implementation? Question 8

Instruction Meaning of instruction I0 :MUL R2 ,R0 ,R1 R2 ¬ R0 *R1 I1 :DIV R5 ,R3 ,R4 R5 ¬ R3/R4 I2 :ADD R2 ,R5 ,R2 R2 ¬ R5+R2 I3 :SUB R5 ,R2 ,R6 R5 ¬ R2-R6

Question 9

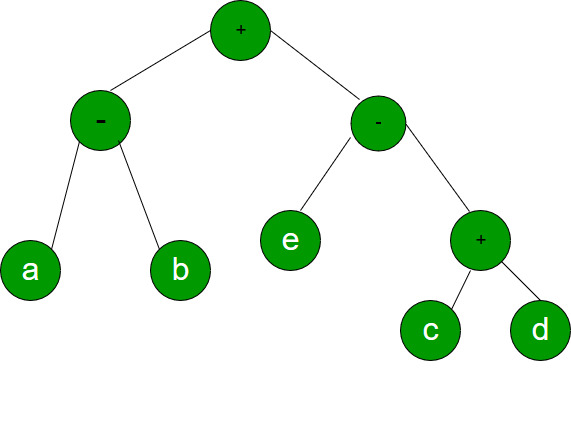

The program below uses six temporary variables a, b, c, d, e, f.

a = 1

b = 10

c = 20

d = a+b

e = c+d

f = c+e

b = c+e

e = b+f

d = 5+e

return d+f

Assuming that all operations take their operands from registers, what is the minimum number of registers needed to execute this program without spilling?

Question 10

|

S1 |

S2 |

S3 |

S4 |

|

|

I1 |

2 |

1 |

1 |

1 |

|

I2 |

1 |

3 |

2 |

2 |

|

I3 |

2 |

1 |

1 |

3 |

|

I4 |

1 |

2 |

2 |

2 |

There are 94 questions to complete.