Underflow and Overflow with Numerical Computation

Last Updated :

17 Apr, 2023

The majority of machine learning techniques necessitate a significant amount of numerical computation. This usually refers to algorithms that solve mathematical problems by iteratively updating solution estimates rather than analytically deriving a formula that provides a symbolic expression for the correct solution. Optimization (identifying the value of an argument that minimizes or maximizes a function) and solving systems of linear equations are two common activities. Even evaluating a mathematical function on a digital computer can be challenging when the function comprises real values that cannot be accurately represented using a finite quantity of memory.

Continuous math on a digital computer is tough because we must express an infinite number of real numbers with a finite number of bit patterns. This means that when we represent a number in the computer, we suffer some approximation error for practically all real numbers. This is frequently merely a rounding error. Rounding error is a concern, especially when it accumulates across multiple operations, and it can lead algorithms to fail in practice if they are not designed to reduce rounding error accumulation.

Underflow

Underflow is a type of rounding error that can be extremely damaging. When integers near zero are rounded to zero, underflow occurs. When the argument is zero instead of a small positive number, many functions act qualitatively differently. For example, we usually want to avoid division by zero (some software environments will throw an exception, while others will return a result with a placeholder NaN [not-a-number value]) or take the logarithm of zero (this is usually treated as  , which becomes NaN if it is used for many more arithmetic operations).

, which becomes NaN if it is used for many more arithmetic operations).

Overflow

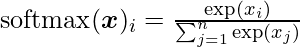

Overflow is another highly harmful type of numerical error. When numbers of enormous magnitude are approximated as -\infty or \infty , overflow occurs. These infinite numbers are frequently converted to NaN values with more mathematics. One can consider softmax function as a function that needs stabilization against the underflow and overflow. A multinomial distribution’s probabilities are frequently predicted using the softmax function. The softmax function is as follows:

Consider what happens if all  are equal to a constant

are equal to a constant  . Analytically, we can see that each of the outputs should be

. Analytically, we can see that each of the outputs should be  . When

. When  has a large magnitude, this may not happen numerically.

has a large magnitude, this may not happen numerically.  will underflow if c is highly negative. As a result, the softmax’s denominator will become 0 and the final result will be indeterminate. When

will underflow if c is highly negative. As a result, the softmax’s denominator will become 0 and the final result will be indeterminate. When  is really large and positive,

is really large and positive,  will overflow, leaving the expression undefined once again. Instead of performing

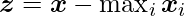

will overflow, leaving the expression undefined once again. Instead of performing  , where

, where  , each of these problems can be handled by evaluating

, each of these problems can be handled by evaluating  . Adding or deleting a scalar from the input vector does not modify the value of the softmax function analytically, as shown using simple algebra. When you subtract

. Adding or deleting a scalar from the input vector does not modify the value of the softmax function analytically, as shown using simple algebra. When you subtract  from exp, the greatest argument is 0, ruling off the chance of overflow. Similarly, at least one phrase in the denominator has a value of 1, ruling out the possibility of denominator underflow, which would result in a division by zero.

from exp, the greatest argument is 0, ruling off the chance of overflow. Similarly, at least one phrase in the denominator has a value of 1, ruling out the possibility of denominator underflow, which would result in a division by zero.

There is still one minor issue. Even if the numerator has underflow, the expression can nevertheless evaluate to zero. This means that if we implement log softmax ( ) by running the softmax subroutine first and then giving the result to the log function, we can get the wrong result with

) by running the softmax subroutine first and then giving the result to the log function, we can get the wrong result with  . Instead, a separate function that calculates log softmax in a numerically stable manner must be implemented. The same approach that we used to stabilize the softmax function can be used to stabilize the log softmax function.

. Instead, a separate function that calculates log softmax in a numerically stable manner must be implemented. The same approach that we used to stabilize the softmax function can be used to stabilize the log softmax function.

Most of the numerical considerations needed in implementing the various algorithms given in this book are not clearly detailed. When implementing deep learning algorithms, low-level library developers should keep numerical concerns in mind. Most readers of this book will be able to rely on low-level libraries with reliable implementations. It may be possible to create a new algorithm and have it automatically stabilized in some instances.

Like Article

Suggest improvement

Share your thoughts in the comments

Please Login to comment...