StyleGAN – Style Generative Adversarial Networks

Last Updated :

04 Aug, 2021

Generative Adversarial Networks (GAN) was proposed by Ian Goodfellow in 2014. Since its inception, there are a lot of improvements are proposed which made it a state-of-the-art method generate synthetic data including synthetic images. However most of these improvements made on the discriminator part of the model which refines the generation ability of generator. This also implies that there was not much focus on the generator part which causes the lack of control over the generator part of GAN. There are some parameters that can be changed during the generation part such as background, foreground and style or for human faces there are many features that can be altered in the generation of various images such pose, hair color, eyes color etc.

Style GAN proposes a lot of changes in the generator part which allows it to generate the photo-realistic high-quality images as well as modify some part of the generator part.

Architecture:

Style GAN uses the baseline progressive GAN architecture and proposed some changes in the generator part of it. However, the discriminator architecture is quite similar to baseline progressive GAN. Let’s look at these architectural changes one by one.

Style GAN architecture

- Baseline Progressive Growing GANs: Style GAN uses baseline progressive GAN architecture which means the size of generated image increases gradually from a very low resolution (4×4) to high resolution (1024 x1024). This is done by adding a new block to both the models to support the larger resolution after fitting the model on smaller resolution to make it more stable..

- Bi-linear Sampling: The authors of paper uses bi-linear sampling instead of nearest neighbor up/down sampling (which was used in previous Baseline Progressive GAN architectures) in both generator and discriminator. They implement this bi-linear sampling by low pass filtering the activation with a separable 2nd order binomial filter after each of the upsampling layer and before each of the downsampling layer.

- Mapping Network and Style Network: The goal of the mapping network is to generate the input latent vector into the intermediate vector whose different element control different visual features. Instead of directly providing latent vector to input layer the mapping is used. In this paper, the latent vector (z) of size 512 is mapped to another vector of 512 (w). The mapping function is implemented using 8-layer MLP (8- fully connected layers). The output of mapping network (w) then passed through a learned affine transformation (A) before passing into the synthesis network which AdaIN (Adaptive Instance Normalization) module. This model converts the encoded mapping into the generated image.

Generator Architecture of Style GAN vs Traditional Architecture

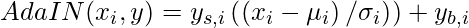

- The input to the AdaIN is y = (ys, yb) which is generated by applying (A) to (w). The AdaIN operation is defined by the following equation:

where each feature map x is normalized separately, and then scaled and biased using the corresponding scalar components from style y. Thus the dimensional of y is twice the number of feature maps (x) on that layer. The synthesis network contains 18 convolutional layers 2 for each of the resolutions (4×4 – 1024×1024).

- Removing traditional (Latent) input: Most previous style transfer model uses the random input to create the initial latent code of the generator i.e. the input of the 4×4 level. However the style-GAN authors concluded that the image generation features are controlled by w and AdaIN. Therefore they replace the initial input with the constant matrix of 4x4x512. This also contributed to increase in the performance of the network.

- Addition of Noisy:Input A Gaussian noise (represented by B) is added to each of these activation maps before the AdaIN operations. A different sample of noise is generated for each block and is interpreted on the basis of scaling factors of that layer.

- There are many aspects in people’s faces that are small and can be seen as stochastic, such as freckles, exact placement of hairs, wrinkles, features which make the image more realistic and increase the variety of outputs. The common method to insert these small features into GAN images is adding random noise to the input vector

- Mixing Regularization: The Style generation used intermediate vector at each level of synthesis network which may cause network to learn correlation between different levels. In order to reduce the correlation, the model randomly selects two input vectors (z1 and z2) and generates the intermediate vector (w1 and w2) for them. It then trains some of the levels with the first and switches (in a random split point) to the other to train the rest of the levels. This switch in random split points ensures that network don’t learn correlation very much.

Style on the Basis of Resolution:Images

Style changes in Caarse, Middle and FIne Imaaes. Here A and B are the results generated from style mixing

In order to have more control on the styles of the generated image, the synthesis network provides control over the style to different level of details (or resolution). These different levels are defined as:

- Coarse – resolution of ( 4×4 – 8×8) – affects pose, general hair style, face shape, etc

- Middle – resolution of (16×16 – 32×32) – affects finer facial features, hair style, eyes open/closed, etc.

- Fine – resolution of (64×64 – 1024×1024) – affects colors for (eye, hair and skin) and micro features.

The author of this paper also varied noise between these levels. Thus, noise will take over the control of changes in the style in these level. For Example: Noise in coarse level cause changes in broader structure while in Fine level cause changes in finer details of image.

Feature Disentanglement Studies:

The aim of these feature disentanglement study to measure the variation of feature separation. In this paper author presents two separate metrics for feature disentanglement:

- Perceptual path length : In this metric we measure the weighted difference between the VGG embedding of two consecutive images when interpolating between two random inputs.

- The average perceptual path length over the latent space Z is defined over all possible endpoints is defined as

![Rendered by QuickLaTeX.com \begin{aligned} l_{ Z }= E \left[\frac{1}{\epsilon^{2}} d\left(G\left(\operatorname{slerp}\left( z _{1}, z _{2} ; t\right)\right)\right.\right.\\ \left.\left.G\left(\operatorname{slerp}\left( z _{1}, z _{2} ; t+\epsilon\right)\right)\right)\right] \end{aligned}](https://www.geeksforgeeks.org/wp-content/ql-cache/quicklatex.com-09fb7041ae75897a233da92e2ad700bf_l3.png)

- where z1, z2 ? P(z), t ? U(0, 1), G is the generator (i.e., g ?f for style-based networks), and d(·, ·) evaluates the perceptual distance between the resulting images. Here slerp stands for spherical Interpolation. Drastic changes in perceptual distance means that multiple features have changed together and that they might be entangled

- Linear separability: In this method we look at how well the latent-space points can be separated into two distinct sets via a linear hyperplane, so that each set corresponds to a specific binary attribute of the image. For Example Each of the face images belong to either male or female.

The authors of this paper applied these metrics to both w(intermediate mapping) and z(latent space) and concludes that w are more separable. This also underscores the importance of 8-layer mapping network.

Results:

This paper generates state -of-the art results on Celeba-HQ dataset. This paper also proposes a new dataset of human faces called Flicker Face HQ (FFHQ) dataset which have considerably more variation than Celeba-HQ. this style-GAN architecture generates considerably good results also on FFHQ dataset. Below are the results (FID score) of this architecture on these two dataset.

Here we calculate FID score using 50, 000 randomly chosen images from the training set, and take the lowest distance encountered over the course of training

References:

Like Article

Suggest improvement

Share your thoughts in the comments

Please Login to comment...