robots.txt File

Last Updated :

04 Nov, 2018

What is robots.txt File?

The web surface is an open place. Almost all the websites on the surface can be accessed by several search engines e.g. if we search something in Google, a vast number of results can be obtained from it. But, what if the web designers create something on their website and don’t want Google or other search engines to access it? This is where the robots.txt file comes into play. Robots.txt file is a text file created by the designer to prevent the search engines and bots to crawl up their sites. It contains the list of allowed and disallowed sites and whenever a bot wants to access the website, it checks the robots.txt file and accesses only those sites that are allowed. It doesn’t show up the disallowed sites in search results.

Need of robots.txt file: The most important reason for this is to keep the entire sections of a website private so that no robots can access it. It also helps to prevent search engines from indexing certain files. Moreover, it also specifies the location of the sitemap.

How to create robots.txt file?

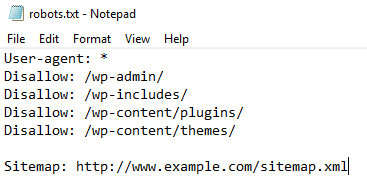

The robots.txt file is a simple text file placed on your web server which tells web crawlers like Google bot whether they should access a file or not. This file can be created in Notepad. The syntax is given by:

User-agent: {name of user without braces}

Disallow: {site disallowed by the owner, i.e this can't be indexed}

Sitemap: {the sitemap location of the website}

Description of component:

-

User-agent: *

Disallow:

The website is open to all search engines(asterisk) and none of its content is disallowed.

-

User-agent: Googlebot

Disallow: /

The Googlebot search engine is disallowed to index any of its contents.

-

User-agent: *

Disallow: /file.html

This is partial access. All other contents except file.html can be accessed.

-

Visit-time: 0200-0300

This bounds the time for the crawler. The contents can be indexed only between the time interval given.

-

Crawl-delay: 20

Prohibits the crawlers from hitting the site frequently as it would make the site slow.

Once the file is complete and ready, save it with the name “robots.txt” (this is important, don’t use another name) and upload it to the root directory of the website. This will allow the robots.txt file to do its work.

Note: The robots.txt file is accessible by everyone on the internet. Everyone can see the name of allowed and disallowed user agents and files. Although no one can open the files, just the names of the files are shown.

To check a website’s robots.txt file,

"website name" + "/robots.txt"

eg: https://www.geeksforgeeks.org/robot.txt

How does robots.txt file work?

When search something on any search engine, the search bot (being the user-agent) finds the website to display the results. But before displaying it, or even indexing it, it searches for the robots.txt file of the website, if there’s any. If there is one, the search bot goes through it to check the allowed and disallowed sites on the website. It ignores all the disallowed sites sited on the file and goes on to show the allowed contents in the results. Thus, it can only see the allowed contents by the owner of the website.

Example:

User-agent: Googlebot

Disallow: /wp-admin/

Disallow: /wp-includes/

Disallow: /wp-content/plugins/

Disallow: /wp-content/themes/

Crawl-delay: 20

Visit-time: 0200-0300

This means that Google bot is allowed to crawl each page at a delay of 20 ms except those URLs that are disallowed during the time interval of 0200 – 0300 UTC only.

Reason to use robots.txt file:

People have different opinions on having a robots.txt file in their website.

Reasons to have robots.txt file:

- It blocks the contents from search engines.

- It tune access to the site from reputable robots.

- It is used in currently developing website, which need not to show in search engines.

- It is used to make contents available to specific search engines.

Use of robots.txt file?

The most important use of a robots.txt file is to maintain privacy from the internet. Not everything on our webpage should be showed to open world, thus it is a serious concern which is dealt easily with the robots.txt file.

Like Article

Suggest improvement

Share your thoughts in the comments

Please Login to comment...