Propositional Logic Hybrid Agent and Logical State

Last Updated :

28 Feb, 2022

Prerequisite: Wumpus World in Artificial Intelligence

To create a hybrid agent for the wumpus world, the capacity to deduce various aspects of the state of the world may be integrated rather simply with the condition–action rules and problem-solving algorithms. The agent program keeps a knowledge base and a current strategy up to date. The atemporal axioms—those that do not depend on  , such as the axiom connecting the breeziness of squares to the presence of pits—are included in the initial knowledge base. The new percept phrase is added at each time step, along with all the axioms that are dependent on

, such as the axiom connecting the breeziness of squares to the presence of pits—are included in the initial knowledge base. The new percept phrase is added at each time step, along with all the axioms that are dependent on  , such as the successor-state axioms. (The agent doesn’t need axioms for future time steps, as explained in the next section.) The agent then utilizes logical inference to determine which squares are safe and which have yet to be visited by

, such as the successor-state axioms. (The agent doesn’t need axioms for future time steps, as explained in the next section.) The agent then utilizes logical inference to determine which squares are safe and which have yet to be visited by  inquiries of the knowledge base.

inquiries of the knowledge base.

The agent program’s primary body creates a plan based on a diminishing priority of goals. If there is a sparkle, the program first devises a strategy for grabbing the gold, returning to the original place, and climbing out of the cave. Otherwise, if no current plan exists, the software plots a path to the nearest safe square it has not yet visited, ensuring that the route passes only via safe squares. A* search, not an  , is used to plan a route. If the agent still has an arrow and there are no safe squares to investigate, the next step is to try to make a safe square by shooting at one of the available wumpus spots. These are found by inquiring where

, is used to plan a route. If the agent still has an arrow and there are no safe squares to investigate, the next step is to try to make a safe square by shooting at one of the available wumpus spots. These are found by inquiring where  is false, i.e. when it is unknown whether or not there is a wumpus.

is false, i.e. when it is unknown whether or not there is a wumpus.  is used by the function

is used by the function  (not shown) to plan a sequence of operations that will line up this shot. If this doesn’t work, the program looks for a square to explore that isn’t provably unsafe—that is, one for which

(not shown) to plan a sequence of operations that will line up this shot. If this doesn’t work, the program looks for a square to explore that isn’t provably unsafe—that is, one for which  returns false. If no such square exists, the mission will be impossible, and the agent will withdraw to

returns false. If no such square exists, the mission will be impossible, and the agent will withdraw to ![Rendered by QuickLaTeX.com [1, 1]](https://www.geeksforgeeks.org/wp-content/ql-cache/quicklatex.com-caf4b57856e79dad798bfceedf0ad3a6_l3.png) and climb out of the cave.

and climb out of the cave.

Logic States

The agent program performs admirably, but it has one fundamental flaw: the computational cost of calls to  grows exponentially over time. This is due to the fact that the required conclusions must reach back in time and involve an increasing number of proposition symbols. Obviously, this is unsustainable—we can’t have an agent whose processing time for each percept grows in lockstep with its lifespan! We truly need a constant update time—that is, one that is independent of

grows exponentially over time. This is due to the fact that the required conclusions must reach back in time and involve an increasing number of proposition symbols. Obviously, this is unsustainable—we can’t have an agent whose processing time for each percept grows in lockstep with its lifespan! We truly need a constant update time—that is, one that is independent of  . The apparent solution is to save, or cache, inference findings so that the inference process at the next time step can build on the outcomes of previous stages rather than having to re-start it again. The belief state—that is, some representation of the set of all conceivable current states of the world—can replace the previous history of percepts and all their repercussions

. The apparent solution is to save, or cache, inference findings so that the inference process at the next time step can build on the outcomes of previous stages rather than having to re-start it again. The belief state—that is, some representation of the set of all conceivable current states of the world—can replace the previous history of percepts and all their repercussions

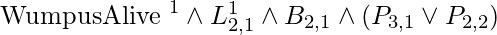

State estimation is the process of updating the belief state as fresh percepts come. We can employ a logical statement involving the proposition symbols associated with the current time step, as well as the atemporal symbols, instead of an explicit list of states as in Section 4.4. For instance, consider the logical sentence.

describes the set of all situations at time 1 in which the wumpus is alive, the agent is at ![Rendered by QuickLaTeX.com [2, 1]](https://www.geeksforgeeks.org/wp-content/ql-cache/quicklatex.com-579238e320e0ac2590224901114559c0_l3.png) , the square is breezy, and there is a pit in either

, the square is breezy, and there is a pit in either ![Rendered by QuickLaTeX.com [3, 1]](https://www.geeksforgeeks.org/wp-content/ql-cache/quicklatex.com-e0e91656c256bdeada6aabb8feab6df6_l3.png) or

or ![Rendered by QuickLaTeX.com [2, 2]](https://www.geeksforgeeks.org/wp-content/ql-cache/quicklatex.com-c395481f8d60ffff744ec17d8735499d_l3.png) , or both.

, or both.

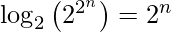

It turns out that maintaining a precise belief state as a logical formula is not straightforward. There are 2n potential states—that is, assignments of truth values to those symbols—if there are n fluent symbols for time t. The powerset (all subsets) of the set of physical states is now the set of belief states. There are  belief states since there are 2n physical states. We’d require integers with

belief states since there are 2n physical states. We’d require integers with  bits to designate the current belief state even if we employed the smallest possible encoding of logical formulas, with each belief state represented by a distinct binary number. To put it another way, correct state estimation may necessitate logical formulas of a size proportional to the number of symbols.

bits to designate the current belief state even if we employed the smallest possible encoding of logical formulas, with each belief state represented by a distinct binary number. To put it another way, correct state estimation may necessitate logical formulas of a size proportional to the number of symbols.

The representation of belief states as conjunctions of literals, i.e. 1-CNF formulas, is a highly common and natural method for approximate state estimation. Given the belief state at t 1, the agent program simply tries to prove  and

and  for each symbol

for each symbol  (as well as each atemporal symbol whose truth value is unknown). The new belief state is formed by the conjunction of verifiable literals, and the previous belief state at

(as well as each atemporal symbol whose truth value is unknown). The new belief state is formed by the conjunction of verifiable literals, and the previous belief state at  is discarded.

is discarded.

It’s vital to keep in mind that as time passes, this technique may lose some information. If the above equation were the true belief state, neither  nor

nor  would be provable separately, and neither would appear in the 1-CNF belief state. On the other hand, we know that the entire 1-CNF belief state must be true because every literal in it is proven from the prior belief state, and the original belief state is a true statement. As a result, the set of possible states represented by the 1-CNF belief state encompasses all states that are in fact feasible when the whole percept history is taken into account. The CNF belief state serves as a basic outer envelope, or conservative approximation, to the exact belief state. The concept of cautious approximations to complicated sets appears to be a repeating subject in several AI fields.

would be provable separately, and neither would appear in the 1-CNF belief state. On the other hand, we know that the entire 1-CNF belief state must be true because every literal in it is proven from the prior belief state, and the original belief state is a true statement. As a result, the set of possible states represented by the 1-CNF belief state encompasses all states that are in fact feasible when the whole percept history is taken into account. The CNF belief state serves as a basic outer envelope, or conservative approximation, to the exact belief state. The concept of cautious approximations to complicated sets appears to be a repeating subject in several AI fields.

Like Article

Suggest improvement

Share your thoughts in the comments

Please Login to comment...