Prior and Posterior Gaussian Process for Different kernels in Scikit Learn

Last Updated :

09 Jan, 2023

In this article, we will learn about the Prior and Posterior Gaussian Processes for Different kernels. But first, let’s understand what is Prior and Posterior Gaussian Processes are. After that, we will use the sci-kit learn library to see the code implementation for the same in Python.

What is the Prior and Posterior Gaussian Process?

In Gaussian process regression, the concept of a prior and posterior distribution is used to make predictions about the function that generated the data. The prior distribution is the initial belief about the function before any data is observed, and the posterior distribution is the updated belief about the function after the data is observed.

The prior distribution is defined by the mean function and covariance function (also known as the kernel) of the Gaussian process. These parameters can be specified by the user, or they can be estimated from the data. The posterior distribution is then computed using Bayesian inference, based on the observed data and the prior distribution.

The posterior distribution represents the updated belief about the function based on the observed data, and it can be used to make predictions about the function at new input points. The predictions are obtained by sampling from the posterior distribution, which gives a set of possible functions that could have generated the observed data. The mean of these functions can be used as the predicted output value, and the variance of the functions can be used to compute the uncertainty of the predictions.

Kernels in Scikit Learn

In scikit-learn, the GaussianProcessRegressor class can be used to implement Gaussian process regression. This class allows you to specify the type of covariance function (also known as a kernel) that you want to use. Some common kernels that are available in scikit-learn include the squared exponential (also known as the Radial Basis Function or RBF) kernel, the Matern kernel, and the periodic kernel.

Each of these kernels has its own characteristics and can be more or less appropriate for different types of data and prediction tasks. The squared exponential kernel is a popular choice for many regression problems, as it is smooth and has a fixed length scale, which makes it well-suited for modeling functions that vary smoothly. The Matern kernel is a generalization of the squared exponential kernel and can be used for problems where the data may not be smooth. The periodic kernel is useful for modeling periodic functions.

It is also possible to combine multiple kernels using the GaussianProcessRegressor class by using the Sum or Product kernels, which can be useful for modeling more complex functions.

Squared Exponential

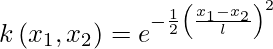

The squared exponential kernel, also known as the Radial Basis Function (RBF) kernel, is a popular choice for many regression problems. It is a smooth, stationary kernel that is defined as follows:

where x1 and x2 are input points, and l is the length scale of the kernel.

The squared exponential kernel is well-suited for modeling functions that vary smoothly, as it has a fixed length scale and is smooth. It is also a stationary kernel, which means that it does not depend on the absolute values of the input points, only on the distances between them.

Python3

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.metrics import roc_auc_score as ras

data = pd.read_csv(

"100DaysOfML/main/Day14%3A%20Logistic_Regression"

"_Metric_and_practice/heart_disease.csv")

X = data.drop("target", axis=1)

y = data['target']

X_train, X_test,\

y_train, y_test = train_test_split(X, y,

test_size=0.25,

random_state=42)

|

In scikit-learn, the squared exponential kernel can be used with the GaussianProcessRegressor class by using the RBF kernel class, as shown in the following example:

Python3

from sklearn.gaussian_process import GaussianProcessRegressor

from sklearn.gaussian_process.kernels import RBF

kernel = RBF()

gp = GaussianProcessRegressor(kernel=kernel)

gp.fit(X_train, y_train)

y_pred = gp.predict(X_test)

ras(y_test, y_pred)

|

Output:

0.705226480836237

Note that the length scale of the kernel may need to be tuned to achieve the best performance on your specific data and prediction task.

Matern Kernel

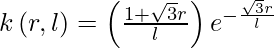

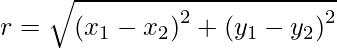

The Matern kernel is a generalization of the squared exponential kernel, which can be used for problems where the data may not be smooth. It is defined as follows:

where x1 and x2 are input points, r is the Euclidean distance between the points, and l is the length scale of the kernel.

The Matern kernel has two parameters, nu, and l, which control the smoothness of the kernel. The parameter nu determines the differentiability of the kernel, with larger values of nu corresponding to more differentiable kernels. The parameter l is the length scale of the kernel, which controls how quickly the kernel decays to zero as the distance between the input points increases.

In sci-kit-learn, the Matern kernel can be used with the GaussianProcessRegressor class by using the Matern kernel class, as shown in the following example:

Python3

from sklearn.gaussian_process import GaussianProcessRegressor

from sklearn.gaussian_process.kernels import Matern

kernel = Matern()

gp = GaussianProcessRegressor(kernel=kernel)

gp.fit(X_train, y_train)

y_pred = gp.predict(X_test)

ras(y_test, y_pred)

|

Output:

0.7226480836236934

Note that the parameters of the Matern kernel (nu and l) may need to be tuned to achieve the best performance on your specific data and prediction task.

Periodic Kernel or ExpSineSquared

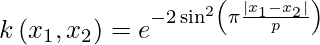

The periodic kernel is a kernel that is useful for modeling periodic functions. It is defined as follows:

where x1 and x2 are input points, and p is the period of the kernel.

The periodic kernel has one parameter, p, which controls the period of the kernel. This parameter determines the length of the cycle of the periodic function that the kernel can model.

In scikit-learn, the periodic kernel can be used with the GaussianProcessRegressor class by using the Periodic kernel class, as shown in the following example:

Python3

from sklearn.gaussian_process import GaussianProcessRegressor

from sklearn.gaussian_process.kernels import Periodic

kernel = Periodic()

gp = GaussianProcessRegressor(kernel=kernel)

gp.fit(X_train, y_train)

y_pred = gp.predict(X_test)

ras(y_test, y_pred)

|

Output:

0.4975609756097561

Note that the period of the periodic kernel (p) may need to be tuned to achieve the best performance on your specific data and prediction task.

In summary, Gaussian process regression and the choice of the kernel are important tools for modeling functions in scikit-learn, and selecting the right kernel for your data and prediction task can help improve the accuracy of your model.

Like Article

Suggest improvement

Share your thoughts in the comments

Please Login to comment...