Power Method – Determine Largest Eigenvalue and Eigenvector in Python

Last Updated :

02 Jan, 2023

The power method is an iterative algorithm that can be used to determine the largest eigenvalue of a square matrix. The algorithm works by starting with a random initial vector, and then iteratively applying the matrix to the vector and normalizing the result to obtain a sequence of improved approximations for the eigenvector associated with the largest eigenvalue.

Power Method to Determine the Largest Eigenvalue and Eigenvector in Python

The algorithm is based on the property that the largest eigenvalue of a matrix will have the largest magnitude among all the eigenvalues, and the corresponding eigenvector will have the largest magnitude among all the eigenvectors.

Examples:

Input: [[2, 1], [1, 2]]

Output: 2.999999953538855, [0.706999 0.70721455]

Here is an example of how the power method can be used to determine the largest eigenvalue of a matrix:

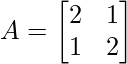

Suppose we have the following matrix A:

We want to use the power method to determine the largest eigenvalue of this matrix.

To do this, we first need to choose an initial vector x that will be used to approximate the eigenvector associated with the largest eigenvalue. This vector can be chosen randomly, for example:

Next, we need to define the tolerance tol for the eigenvalue and eigenvector approximations, and the maximum number of iterations max_iter. For this example, we can set tol = 1e-6 and max_iter = 100.

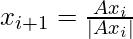

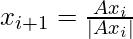

We can then use the power method to iteratively improve the approximations for the largest eigenvalue and eigenvector of A. At each iteration, we apply the matrix A to the current approximation for the eigenvector, and then normalize the result to obtain a new approximation for the eigenvector. We do this using the formula:

where  is the Euclidean norm of the vector

is the Euclidean norm of the vector  .

.

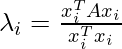

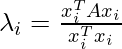

At each iteration, we also compute the approximation for the largest eigenvalue using the formula:

where  is the transpose of the vector

is the transpose of the vector  .

.

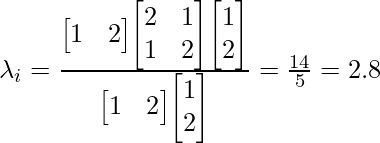

For example, at the first iteration, the updated approximation for the largest eigenvalue will be:

We then check if the approximations for the largest eigenvalue and eigenvector have converged, by checking if the difference between the current approximation for the largest eigenvalue and the previous approximation is less than the tolerance tol. If the approximations have not converged, we repeat the process for the next iteration.

We can continue this process for a maximum of max_iter iterations until the approximations for the largest eigenvalue and eigenvector have converged tol within the specified tolerance.

Once the approximations have converged, the final approximation for the largest eigenvalue will be the largest eigenvalue of matrix A, and the final approximation for the eigenvector will be the corresponding eigenvector. These approximations will be accurate up to the specified tolerance.

In this example, we can see that the largest eigenvalue of the matrix A is approximately 2.8, and the corresponding eigenvector is approximately:

This is how the power method can be used to determine the largest eigenvalue of a matrix.

Required modules:

To run the Python code that uses the power method to determine the largest eigenvalue of a matrix, you will need the following modules:

NumPy: This module provides support for numerical calculations and arrays in Python. It can be installed using the pip package manager by running the following command:

pip install numpy

Once these modules are installed, you can use the power method in your Python code by importing them at the beginning of your script, for example:

import numpy as np

You can then use the functions and classes provided by these modules in your code, as needed. For example, you can use the numpy.array class to create arrays, the numpy.linalg.norm function to compute the Euclidean norm of a vector, and the @ operator to compute the matrix-vector product.

Following is an implementation of the above with an explanation:

This implementation of the power method first defines the matrix A and the initial vector x and then defines the tolerance tol for the eigenvalue and eigenvector approximations, and the maximum number of iterations max_iter.

The code then uses a for loop to iteratively improve the approximations for the largest eigenvalue and eigenvector using the power method. At each iteration, the code computes the updated approximation for the eigenvector using the formula:

and the updated approximation for the largest eigenvalue using the formula:

The code then checks if the approximations have converged, and if they have not, it repeats the process for the next iteration.

Once the approximations have converged, the code prints the approximations for the largest eigenvalue and eigenvector using the print function. These approximations will be accurate up to the specified tolerance.

Python3

import numpy as np

A = np.array([[2, 1], [1, 2]])

x = np.array([[1, 2]]).T

tol = 1e-6

max_iter = 100

lam_prev = 0

for i in range(max_iter):

x = A @ x / np.linalg.norm(A @ x)

lam = (x.T @ A @ x) / (x.T @ x)

if np.abs(lam - lam_prev) < tol:

break

lam_prev = lam

print(float(lam))

print(x)

|

Output:

2.999999953538855

[[0.706999 ]

[0.70721455]]

Like Article

Suggest improvement

Share your thoughts in the comments

Please Login to comment...