Pagination using Scrapy – Web Scraping with Python

Last Updated :

14 Dec, 2023

Pagination using Scrapy. Web scraping is a technique to fetch information from websites. Scrapy is used as a Python framework for web scraping. Getting data from a normal website is easier, and can be just achieved by just pulling the HTML of the website and fetching data by filtering tags. But what is the case when there is Pagination in Python and in the data you are trying to fetch, For example – Amazon’s products can have multiple pages and to scrap all products successfully, one would need the concept of pagination.

What is Pagination in Python?

Pagination, also known as paging, is the process of dividing a document into discrete pages, with URLs available means a bundle of data on different pages . These different pages have their URL. So we need to take these URLs, one by one, and scrape these pages. But to keep in mind is when to stop pagination. Generally, pages have the , available URLs, this next button is able and it gets disabled when pages are finished. This method is used to get url of pages till the next page button is available and when it gets disabled no page is left for scraping.

Web scraping pagination with Scrapy in Python

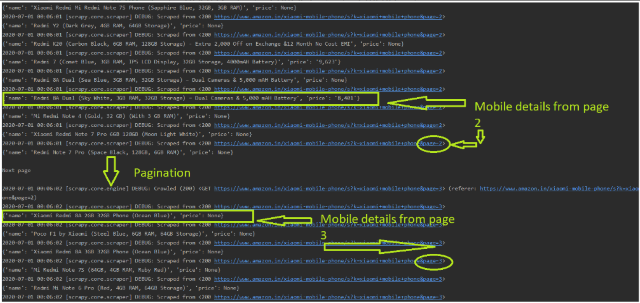

Scraping mobile details from the Amazon site and applying pagination in the following project. The scraped details involve the name and price of mobiles and pagination to scrape all the result for the following searched URLinvolve

Logic behind Pagination

Here next_page variable gets url of the the next page only if next page is available but if no page is left then, this condition gets false.

Python3

next_page = response.xpath("//div/div/ul/li[@class='alast']/a/@href").get()

if next_page:

yield scrapy.Request(

url=abs_url,

callback=self.parse

)

|

Note:

abs_url = f"https://www.amazon.in{next_page}"

Here need to take https://www.amazon.in is because next_page is /page2. That is incomplete and the complete url is https://www.amazon.in/page2

Fetch xpath of details need to be scraped –

Follow below steps to get xpath – xpath of items:

xpath of name: xpath of price:

xpath of price: xpath of next page:

xpath of next page:

Spider Code

In this example the below code defines a web scraper using Scrapy to extract information (product name and price) from Amazon’s mobile phone search results. It initiates a request to the specified Amazon URL in the `start_requests` method, and in the `parse` method, it extracts product details from the HTML response. It also navigates to the next page of search results if available, continuing the scraping process.

Python3

import scrapy

class MobilesSpider(scrapy.Spider):

name = 'mobiles'

def start_requests(self):

yield scrapy.Request(

+ '= 2AT2IRC7IKO1K&sprefix = xiome % 2Caps % 2C302&ref = nb_sb_ss_i_1_5',

callback = self.parse

)

def parse(self, response):

products = response.xpath("//div[@class ='s-include-content-margin s-border-bottom s-latency-cf-section']")

for product in products:

yield {

'name': product.xpath(".//span[@class ='a-size-medium a-color-base a-text-normal']/text()").get(),

'price': product.xpath(".//span[@class ='a-price-whole']/text()").get()

}

print()

print("Next page")

print()

next_page = response.xpath("//div / div / ul / li[@class ='a-last']/a/@href").get()

if next_page:

yield scrapy.Request(

url = abs_url,

callback = self.parse

)

else:

print()

print('No Page Left')

print()

|

Scraped Results

Output

Like Article

Suggest improvement

Share your thoughts in the comments

Please Login to comment...