Music Recommendation System Using Machine Learning

Last Updated :

01 Nov, 2022

When did we see a video on youtube let’s say it was funny then the next time you open your youtube app you get recommendations of some funny videos in your feed ever thought about how? This is nothing but an application of Machine Learning using which recommender systems are built to provide personalized experience and increase customer engagement.

In this article, we will try to build a very basic recommender system that can recommend songs based on which songs you hear.

Importing Libraries & Dataset

Python libraries make it very easy for us to handle the data and perform typical and complex tasks with a single line of code.

- Pandas – This library helps to load the data frame in a 2D array format and has multiple functions to perform analysis tasks in one go.

- Numpy – Numpy arrays are very fast and can perform large computations in a very short time.

- Matplotlib/Seaborn – This library is used to draw visualizations.

- Sklearn – This module contains multiple libraries having pre-implemented functions to perform tasks from data preprocessing to model development and evaluation.

Python3

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sb

from sklearn.metrics.pairwise import cosine_similarity

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.manifold import TSNE

import warnings

warnings.filterwarnings('ignore')

|

The dataset we are going to use contains data about songs released in the span of around 100 years. Along with some general information about songs some scientific measures of sound are also provided like loudness, acoustics, speechiness, and so on.

Python3

tracks = pd.read_csv('tracks_records.csv')

tracks.head()

|

Output:

First five rows of the dataset

Data Cleaning

Data Cleaning is one of the important steps without which data will be of no use because the raw data contains a lot of noises that must be removed else the observations made from it will be inaccurate and if we are building a model upon it then it’s performance will be poor as well. Steps included in the data cleaning are outlier removal, null value imputation, and fixing the skewness of the data.

Output:

(586672, 19)

Output:

Basic information about the columns of the dataset

Now. let’s check if there are null values in the columns of our data frame.

Output:

Number of null values in each column

The genre of music is a very important indicator of the type of music which is why we will remove such rows with null values. We could have imputed then as well but we have a huge dataset of around 6 lakh rows so, removing 50,000 won’t affect much (depending upon the case).

Python3

tracks.dropna(inplace = True)

tracks.isnull().sum().plot.bar()

plt.show()

|

Output:

After removing rows containing null values

Now let’s remove some columns which we won’t be using to build our recommender system.

Python3

tracks = tracks.drop(['id', 'id_artists'], axis = 1)

|

Exploratory Data Analysis

EDA is an approach to analyzing the data using visual techniques. It is used to discover trends, and patterns, or to check assumptions with the help of statistical summaries and graphical representations.

The dataset we have contains around 14 numerical columns but we cannot visualize such high-dimensional data. But to solve this problem t-SNE comes to the rescue. t-SNE is an algorithm that can convert high dimensional data to low dimensions and uses some non-linear method to do so which is not a concern of this article.

Python3

model = TSNE(n_components = 2, random_state = 0)

tsne_data = model.fit_transform(a.head(500))

plt.figure(figsize = (7, 7))

plt.scatter(tsne_data[:,0], tsne_data[:,1])

plt.show()

|

Output:

Scatter plot of the output of t-SNE

Here we can observe some clusters.

Formation of clusters in 2-D space

As we know multiple versions of the same song are released hence we need to remove the different versions of the same sone as we are building a content-based recommender system behind which the main worker is the cosine similarity function our system will recommend the versions of the same song if available and that is not what we want.

Python3

tracks['name'].nunique(), tracks.shape

|

Output:

(408902, (536847, 17))

So, our concern was right so, let’s remove the duplicate rows based upon the song names.

Python3

tracks = tracks.sort_values(by=['popularity'], ascending=False)

tracks.drop_duplicates(subset=['name'], keep='first', inplace=True)

|

Let’s visualize the number of songs released each year.

Python3

plt.figure(figsize = (10, 5))

sb.countplot(tracks['release_year'])

plt.axis('off')

plt.show()

|

Output:

Countplot of the number of songs in subsequent years

Here we can see a boom in the music industry from the year 1900 to somewhere around 1990.

Python3

floats = []

for col in tracks.columns:

if tracks[col].dtype == 'float':

floats.append(col)

len(floats)

|

Output:

10

There is a total of 10 such columns with float values in them. Let’s draw their distribution plot to get insights into the distribution of the data.

Python3

plt.subplots(figsize = (15, 5))

for i, col in enumerate(floats):

plt.subplot(2, 5, i + 1)

sb.distplot(tracks[col])

plt.tight_layout()

plt.show()

|

Output:

Distribution plot of the continuous features

Some of the features have normal distribution while some data distribution is skewed as well.

Python3

%%capture

song_vectorizer = CountVectorizer()

song_vectorizer.fit(tracks['genres'])

|

As the dataset is too large computation cost/time will to too high so, we will show the implementation of the recommended system by using the most popular 10,000 songs.

Python3

tracks = tracks.sort_values(by=['popularity'], ascending=False).head(10000)

|

Below is a helper function to get similarities for the input song with each song in the dataset.

Python3

def get_similarities(song_name, data):

text_array1 = song_vectorizer.transform(data[data['name']==song_name]['genres']).toarray()

num_array1 = data[data['name']==song_name].select_dtypes(include=np.number).to_numpy()

sim = []

for idx, row in data.iterrows():

name = row['name']

text_array2 = song_vectorizer.transform(data[data['name']==name]['genres']).toarray()

num_array2 = data[data['name']==name].select_dtypes(include=np.number).to_numpy()

text_sim = cosine_similarity(text_array1, text_array2)[0][0]

num_sim = cosine_similarity(num_array1, num_array2)[0][0]

sim.append(text_sim + num_sim)

return sim

|

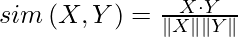

To calculate the similarity between the two vectors we have used the concept of cosine similarity.

Python3

def recommend_songs(song_name, data=tracks):

if tracks[tracks['name'] == song_name].shape[0] == 0:

print('This song is either not so popular or you\

have entered invalid_name.\n Some songs you may like:\n')

for song in data.sample(n=5)['name'].values:

print(song)

return

data['similarity_factor'] = get_similarities(song_name, data)

data.sort_values(by=['similarity_factor', 'popularity'],

ascending = [False, False],

inplace=True)

display(data[['name', 'artists']][2:7])

|

Now, it’s time to see the recommender system at work. Let’s see which songs are recommender system will recommend if he/she listens to the famous song ‘Shape of you’.

Python3

recommend_songs('Shape of You')

|

Output:

Recommended songs if you hear ‘Shape of you’

Let’s try this on one more song.

Python3

recommend_songs('Love Someone')

|

Output:

Recommended songs if you hear ‘Love Someone’

Below shown is the case if the song name entered is incorrect.

Python3

recommend_songs('Love me like you do')

|

Output:

If the input song name is not in the dataset

Conclusion

Although this model requires a lot of changes before it can be used in any real-world music app or website. But this is just an overview of how recommendation systems are built and used.

Like Article

Suggest improvement

Share your thoughts in the comments

Please Login to comment...