Lex code to count total number of tokens

Last Updated :

21 May, 2019

Lex is a computer program that generates lexical analyzers and was written by Mike Lesk and Eric Schmidt. Lex reads an input stream specifying the lexical analyzer and outputs source code implementing the lex in the C programming language.

Tokens: A token is a group of characters forming a basic atomic chunk of syntax i.e. token is a class of lexemes that matches a pattern. Eg – Keywords, identifier, operator, separator.

Example:

Input: int p=0, d=1, c=2;

Output:

total no. of tokens = 13

Below is the implementation of the above explanation:

%{

int n = 0 ;

%}

%%

"while"|"if"|"else" {n++;printf("\t keywords : %s", yytext);}

"int"|"float" {n++;printf("\t keywords : %s", yytext);}

[a-zA-Z_][a-zA-Z0-9_]* {n++;printf("\t identifier : %s", yytext);}

"<="|"=="|"="|"++"|"-"|"*"|"+" {n++;printf("\t operator : %s", yytext);}

[(){}|, ;] {n++;printf("\t separator : %s", yytext);}

[0-9]*"."[0-9]+ {n++;printf("\t float : %s", yytext);}

[0-9]+ {n++;printf("\t integer : %s", yytext);}

. ;

%%

int main()

{

yylex();

printf("\n total no. of token = %d\n", n);

}

|

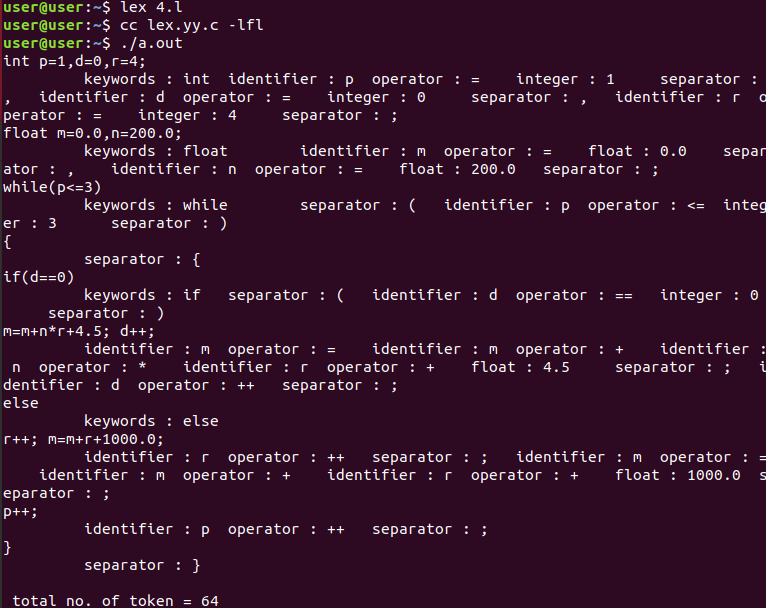

Output:

Like Article

Suggest improvement

Share your thoughts in the comments

Please Login to comment...