K-MEDOIDS is a partitional clustering algorithm that is slightly modified from the k-means algorithm. They both attempt to minimize the squared error but the k-medoids algorithm is more robust to noise than k-means algorithm. In k-means algorithm, one chooses means as the centroids but in k-medoids, data points are chosen to be the medoids(median). A medoid can be defined as the object of a cluster, whose average is dissimilar to all the objects in the cluster is minimal.

Algorithm:

Step 1:Initialize select k random points out of the n data points as the medoids.

Step 2: Associate each data point to the closest medoid by using any common distance metric methods.

Step 3: While the cost decreases, For each medoid m, for each data o point which is not a medoid:

- Swap m and o, associate each data point to the closest medoid, and recompute the cost

- If the total cost is more than that in the previous step, undo the swap

In this image we have all diamonds in black except three diamonds:

Depending on the distance, the color of the diamonds changes. Distance calculated and black color is changed to the respective color bases on the minimal distance. In this image we are having 3 clusters.

Now changing the position of the color diamonds.

The cluster groups will be changing according to the minimal distance.

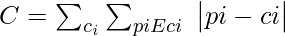

Cost Calculation:

The dissimilarity of the medoid(ci) and object (oi) is calculated by using E=| pi-ci |. The cost in the k-medoids algorithm is given as:

C

Example:

| x-axis | y-axis |

|---|

| 7 | 6 |

| 2 | 6 |

| 3 | 8 |

| 8 | 5 |

| 7 | 4 |

| 4 | 7 |

| 6 | 2 |

|

7

6

|

3

4

|

| 3 | 4 |

Let us randomly choose 2 medoids, let us take (3,4) & (7,4) as our medoids points. Now calculating the distance from each point,

- (7,6) calculating the distance from the medoid chosen, near to (7,4)

- (2,6) calculating the distance from the medoid chosen, near to (3,4)

- (3,8) calculating the distance from the medoid chosen, near to (3,4)

- (8,5) calculating the distance from the medoid chosen, near to (7,4)

- (4,7) calculating the distance from the medoid chosen, near to (3,4)

- (6,2) calculating the distance from the medoid chosen, near to (7,4)

- (7,3) calculating the distance from the medoid chosen, near to (7,4)

- (6,4) calculating the distance from the medoid chosen, near to (7,4)

So, now after the clustering. The clusters formed are:

{(3,4); (2,6); (3,8); (4,7)}

{(7,4); (6,2); (6,4); (7,3);

(8,5); (7,6)}Calculating the cost which is nothing but the sum of distance of each non-selected point from the selected point.

Total cost = cost((3,4),(2,6)) + cost((3,4),(3,8))

+ cost((3,4),(4,7)) + cost((7,4),(6,2))

+ cost((7,4),(6,4)) + cost((7,4),(7,3))

+ cost((7,4),(8,5)) + cost((7,4),(7,6))

= 3 + 4 + 4 + 3 + 1 + 1 + 2 + 2

= 20Now let us choose some other point to be a medoid instead of (7,4). Let us randomly choose (7,3). Now (3,4),(7,3) be new medoid points

| x-axis | y-axis |

|---|

| 7 | 6 |

| 2 | 6 |

| 3 | 8 |

| 8 | 5 |

| 7 | 4 |

|

4

6

|

7

2

|

| 7 | 3 |

| 6 | 4 |

| 3 | 4 |

Now repeat as earlier, Calculating the distance from each point

- (7,6) calculating the distance from the medoid chosen, near to (7,3)

- (2,6) calculating the distance from the medoid chosen, near to (3,4)

- (3,8) calculating the distance from the medoid chosen, near to (3,4)

- (8,5) calculating the distance from the medoid chosen, near to (7,3)

- (4,7) calculating the distance from the medoid chosen, near to (3,4)

- (6,2) calculating the distance from the medoid chosen, near to (7,3)

- (7,4) calculating the distance from the medoid chosen, near to (7,3)

- (6,4) calculating the distance from the medoid chosen, near to (7,3)

clusters are = {(3,4); (2,6); (3,8);

(4,7)} & {(7,3); (7,6);

(8,5); (6,2); (7,4); (6,4)}Calculating the total cost = cost((3,4),(2,6)) +

((3,4),(3,8)) + ((3,4),(4,7)) +

cost((7,3),(7,6)) + cost((7,3),(8,5))

+ cost((7,3),(6,2)) + cost((7,3),(7,4))

+ cost((7,3),(6,4))

= 3 + 4 + 4 + 3 + 3 + 2 +1 + 2

= 22Total cost when (7,3) is the medoid is greater than(>) total cost when (7,4) was the medoid. Hence (7,4) is chosen instead of (7,3) as the medoid. Hence the clusters finally obtained are:- {(3,4); (2,6); (3,8); (4,7)} and {(7,4); (6,2); (6,4); (7,3); (8,5); (7,6)}

Advantages:

- It is simple to understand and easy to implement

- k-medoid algorithm is fast and converges in a fixed no of steps

Disadvantages:

- Applicable only when meaning is defined.

- It requires specifying the no of clusters(k) in advance.

- It cannot handle noisy data & outliers.

- Do not work on non-linear data.

Like Article

Suggest improvement

Share your thoughts in the comments

Please Login to comment...