A new transition in Data Science is Julia since it is fast and easy to learn and work with. Julia being a promising language is mainly focused on the scientific computing domain. It provides good execution speed which is comparable to C/C++. It also supports parallelism. Julia is good for writing codes in Deep Learning because deep learning frameworks majorly use C++ at the backend(performance matters) and Python at the frontend(ease of use). Parallelism plays a big part in writing non-trivial deep learning code. It ensures syntax similar to MATLAB to which many coders will be able to have a transition.

Julia is a high-performance, open-source programming language that has gained popularity in recent years for its ease of use and high performance. Julia is particularly well-suited for scientific computing, machine learning, and deep learning applications.

There are a few popular Julia libraries for deep learning, such as Flux.jl and Mocha.jl.

Flux.jl is a machine learning library for Julia that provides a high-level interface for building and training deep learning models. It is built on top of the popular Julia library, Zygote.jl, which provides automatic differentiation. This makes it easy to define and train complex neural networks in Julia.

Mocha.jl is a deep learning framework for Julia that provides a simple and efficient way to train deep learning models. Mocha.jl is built on top of the Julia library, CUDA.jl, which provides a Julia interface to CUDA, NVIDIA’s parallel computing platform. This makes it easy to train deep learning models on GPUs and perform distributed training in Julia.

Here’s a general overview of how to use Julia for deep learning:

Install Julia and the required packages: Julia can be installed from the official website, and the required packages such as Flux.jl or Mocha.jl can be installed using the Julia package manager.

Define the model: The model can be defined using the Flux.jl or Mocha.jl library.

Define the loss function: The loss function is

The main deep learning libraries for Julia is Flux.jl. PyTorch or TensorFlow are written in C++ or Cuda for good CPU performance. But if we want to write custom codes for experimental purposes it gets difficult here. So, in this Julia comes into picture providing us with some custom loss function.

Packages and dataset

We will import Flux, Statistics, and MLDatasets.

The Model:

model = Chain(

# 28x28 => 14x14

Conv((5, 5), 1=>8, pad=2, stride=2, relu),

# 14x14 => 7x7

Conv((3, 3), 8=>16, pad=1, stride=2, relu),

# 7x7 => 4x4

Conv((3, 3), 16=>32, pad=1, stride=2, relu),

# 4x4 => 2x2

Conv((3, 3), 32=>32, pad=1, stride=2, relu),

# Width x height feature map average pooling

GlobalMeanPool(),

flatten,

Dense(32, 10),

softmax)

Now,we can use onecold() function to decode the predictions

# Fetching the predictions

y = model(x_train)

# Decode the predictions

y = onecold(y)

println("Predict my Image1: $(y[1])")

Training The Model:

number_epochs = 10

@epochs number_epochs Flux.train!(loss, ps, train_data, opt)

accuracy(model(x_train), y_train)

@epochs-number of times to be executed.

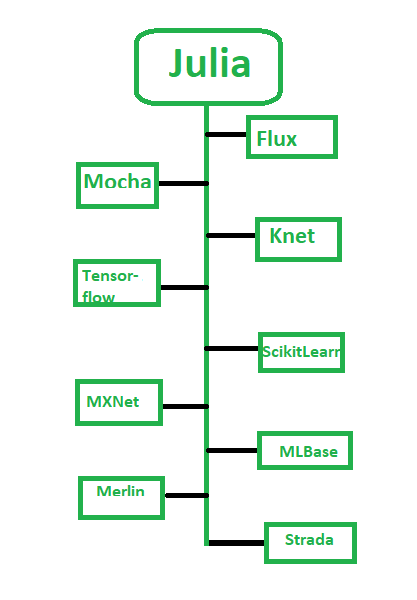

Best Frameworks for Julia

Multiple frameworks can be used to work on Julia as per the user’s needs. Some of the most commonly used frameworks are:

Flux

It is a deep learning and machine learning library. It provides a single and intuitive way to define models the same as a mathematical notation. The existing Julia libraries are differentiable, and they can be incorporated directly into the Flux models.

Mocha.jl

- It is a deep learning library for Julia.

- Mocha.jl is completely written for Julia. The library considers Julia interfaces and it is capable of interacting with core Julia functionality and packages.

- This library includes minimum dependencies to use Julia in the backend. There is no need for root privileges or installing any external dependencies.

- Promotes modularity and correctness

Knet

Knet is a deep learning framework. It is implemented in Julia. Knet allows describing their forward computation in plain Julia. It allows the use of conditionals, loops, recursion, tuples, closures, dictionaries, array indexing, concatenation, and high-level language features. The library supports GPU operations and automates differentiation using dynamic computational graphs for models defined in plain Julia.

Knet documentation has detailed instructions for handling major deep learning building blocks, some of which are listed below:

- Backpropagation

- Convolutional Neural Networks

- RNN

- Reinforcement learning

TensorFlow.jl

TensorFlow.jl is also a Julia wrapper for popular open-source machine learning TensorFlow. This wrapper can be used for various purposes such as fast ingestion of data, especially data in uncommon formats, fast post-processing of inference results, such as calculating various statistics and visualizations that do not have a canned vectorized implementation.

ScikitLearn.jl

ScikitLearn.jl is a Julia wrapper for the popular Python library Scikit-learn. It implements the Scikit-learn interface and algorithms in Julia. It provides a uniform interface for training and using models, as well as a set of tools for chaining (pipelines), evaluating, and tuning model hyper parameters. It supports both models from the Julia ecosystem and those of the Scikit-learn library.

The major features of ScikitLearn.jl are listed below:

- Support for DataFrames

- Hyper parameter tuning

- Feature unions and pipelines

- Cross-validation

MXNet.jl

MXNet.jl is the Apache MXNet Julia package that brings flexible and efficient GPU computing and state-of-art deep learning to Julia. The features of this library include efficient tensor and matrix computation across multiple devices, including multiple CPUs, GPUs, and distributed server nodes. It also has flexible symbolic manipulation to composite and construction of state-of-the-art deep learning models.

MLBase.jl

MLBase.jl is a Julia package that provides useful tools for machine learning applications. It provides a collection of useful tools to support machine learning programs, including data manipulation and preprocessing, score-based classification, performance evaluation, cross-validation, and model tuning.

The MLBase.jl may be used for the following tasks:

- Preprocessing and data manipulation

- Performance evaluation

- Cross-validation

- Model tuning

Merlin

Merlin is a deep learning framework written in Julia. The library aims to provide a fast, flexible, and compact deep learning library for machine learning. The requirements of this library are Julia 0.6 and g++ for OSX or Linux. The library runs on CPUs and CUDA GPUs.

Strada

Strada is an open-source deep-learning library for Julia, based on the popular Caffe framework. The library supports convolutional and recurrent neural network training, both on CPUs and GPUs. Some features of this library include flexibility, support for Caffe features, integration with Julia, and other such.

Application Areas of Julia

- Parallel Supercomputing for Astronomy: The Celeste research team spent three years developing and testing a new parallel computing method that was used to process the Sloan Digital Sky Survey dataset and produce the most accurate catalog of 188 million astronomical objects in just 14.6 minutes with state-of-the-art point and uncertainty estimates.

- Tangent Works-Tangent Works uses Julia to build a comprehensive analytics solution that blurs the barrier between prototyping done by data scientists and product development done by developers.

- Diabetic Retinopathy-Diabetic retinopathy is an eye disease that affects more than 126 million diabetics and accounts for more than 5% of blindness cases worldwide. Timely screening and diagnosis can help prevent vision loss for millions of diabetics worldwide. IBM and Julia Computing analyzed eye fundus images provided by Drishti Eye Hospitals, and a built a deep learning solution that provides eye diagnosis and care to thousands of rural Indians.

- Julia in artificial plays an amazing role since:-

- It is one of the best ways of performing Deep Learning.

- Designed to quickly implement basic mathematical and scientific queries.

- Julia is a versatile programming language that is well-suited for a wide range of applications. Here are some of the main application areas of Julia:

- Scientific computing: Julia is well-suited for scientific computing applications such as numerical analysis, optimization, and simulation, due to its high performance and ease of use.

- Machine learning and deep learning: Julia has a growing ecosystem of machine learning and deep learning libraries such as Flux.jl and Mocha.jl, which make it easy to implement and train complex neural networks.

- Data Science: Julia has powerful libraries for data manipulation, visualization, and statistics such as DataFrames.jl, Gadfly.jl, and Statistics.jl. It’s also a great choice for big data processing and distributed computing with libraries like JuliaDB and Distributed.jl

- Optimization: Julia has powerful optimization libraries such as JuMP.jl and Optim.jl, which can be used to solve a wide range of optimization problems.

- Financial modeling: Julia is well-suited for financial modeling applications such as risk management and portfolio optimization, due to its high performance and support for parallel computing.

- High-Performance Computing: Julia has built-in support for parallel and distributed computing, which makes it an attractive option for high-performance computing tasks such as image processing, simulations, and data analysis.

- Web development: Julia has libraries such as HttpServer.jl and Gumbo.jl that make it easy to build web applications and web scraping.

- Robotics and control systems: Julia has libraries such as Robotics.jl and Control.jl that make it easy to implement control systems and robotic algorithms.

Like Article

Suggest improvement

Share your thoughts in the comments

Please Login to comment...