PyTorch is an open-source machine-learning framework based on the Torch library. It is built by the Facebook AI team.

It is used for Computer vision and Natural Language Processing applications. PyTorch uses tensors to use the power of GPU.

Differentiation is part of Calculus. So, In this article, we will know to calculate the derivative value of any function with the help of PyTorch with examples.

In machine-learning gradient is simply defined as the derivative of any function (e.g cost function) which has more than one input variable.

Example 1:

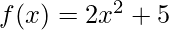

Calculate the derivative value of  for x = 5.0.

for x = 5.0.

Method 1: By using the backward function

- First import the torch library

- Then create a tensor input value with requires_grad = True. Basically, this is used to record the autograd operations.

- Define the function

.

. - Use f.backward() to execute the backward pass and computes all the backpropagation gradients automatically.

- Calculate the derivative value of the given function for the given input using x.grad

Note: Here the input should be float value.

Python3

import torch

x=torch.tensor(5.0,requires_grad=True)

f = 2*(x**2)+5

f.backward()

print(x.grad)

|

Output:

tensor(20.)

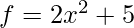

Explanations :

Method 2: By using torch.autograd

We can also use torch.autograd is PyTorch’s automatic differentiation engine that powers neural network training.

It performs the backpropagation starting from a variable. This variable often holds the value of the cost function. To differentiate a gradient in PyTorch, compute the gradient of a tensor with respect to some parameter in PyTorch, you can use the torch.autograd.grad function. This function takes in the tensor we want to compute the gradient of, as well as the parameter with respect to which we want to compute the gradient.

Python3

import torch

from torch.autograd import grad

def f(x):

return 2 *(x ** 2) + 5

x = torch.tensor([5.0], requires_grad=True)

grad_f = grad(f(x), x)[0]

print(grad_f)

|

Output :

tensor(20.)

Example 2:

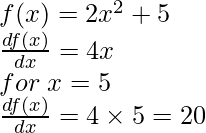

Calculate the derivative value of  for

for  .

.

Step1: Import the torch library

Step 2: Assign the input value i.e x in tensor form. and make sure requires_grad=True.

Python3

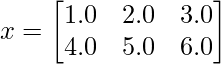

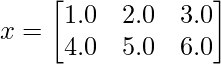

x = torch_input=torch.tensor([[1.0,2.0,3.0],[4.0,5.0,6.0]],requires_grad=True)

x

|

Output:

tensor([[1., 2., 3.],

[4., 5., 6.]], requires_grad=True)

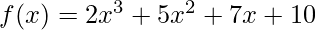

Step 3: Define the function of the given mathematical equation  .

.

Python3

def f(x):

return 2*(x**3) + 5*(x**2) + 7*x + 10

print(f(x))

|

Output:

tensor([[ 24., 60., 130.],

[246., 420., 664.]], grad_fn=<AddBackward0>)

Step 4: Use torch.autograd.functional.jacobian() to find the derivative but here the output will be of the size of torch.Size([2, 3, 2, 3]).

Python3

gred = torch.autograd.functional.jacobian(f, x)

print(gred.shape)

gred

|

Output:

torch.Size([2, 3, 2, 3])

tensor([[[[ 23., 0., 0.],

[ 0., 0., 0.]],

[[ 0., 51., 0.],

[ 0., 0., 0.]],

[[ 0., 0., 91.],

[ 0., 0., 0.]]],

[[[ 0., 0., 0.],

[143., 0., 0.]],

[[ 0., 0., 0.],

[ 0., 207., 0.]],

[[ 0., 0., 0.],

[ 0., 0., 283.]]]])

Here the output tensor shape is [2, 3, 2, 3]. There is some alternate method by which we can get the same outputs in the same shape as the input.

Alternate Method 1: By using torch.autograd.grad()

Step 1 to 3 is the same as the above.

Step 4: Let’s create a variable z with the sum of f(x). Because torch.autograd.grad() works only for scalar input.

Output:

tensor(1544., grad_fn=<SumBackward0>)

Step 5: Use torch.autograd.grad(z, x) to get the derivative value of f(x) with respect to x.

Python3

grad_f = torch.autograd.grad(z, x)

print(grad_f)

|

Output:

(tensor([[ 23., 51., 91.],

[143., 207., 283.]]),)

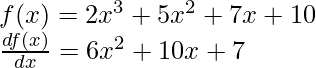

Explanations:

Python3

df = 6*x**2 + 10*x + 7

print(df)

|

Output:

tensor([[ 23., 51., 91.],

[143., 207., 283.]], grad_fn=<AddBackward0>)

As we can see from above that the grad_f value and df are the same.

Alternate method: 2 By using the backward() function

All steps are similar to the above except, We will use here z.backward() function in place of torch.autograd.grad(z,x). and we can get the output by using x.grad().

Python3

import torch

x = torch_input=torch.tensor([[1.0,2.0,3.0],[4.0,5.0,6.0]],requires_grad=True)

def f(x):

return 2*(x**3) + 5*(x**2) + 7*x + 10

z=f(x).sum()

z.backward()

print(x.grad)

|

Output:

tensor([[ 23., 51., 91.],

[143., 207., 283.]])

Example 3: Plot the gradient of sin(x)

Let’s plot the graph of sin(x) and the derivative of sin(x) = cos(x)

Python3

import torch

import matplotlib.pyplot as plt

x = torch.linspace(-5, 5, steps=200, requires_grad=True)

def f(x):

return torch.sin(x)

z = f(x).sum()

z.backward()

plt.plot(x.detach(), f(x).detach(), label="f(x) = sin(x)")

plt.plot(x.detach(), x.grad, label="f'(x) = cos(x)")

plt.ylabel("f(x) or f'(x)")

plt.xlabel('x')

plt.legend()

plt.show()

|

Outputs:

Sin(x) and derivative of Sin(x)

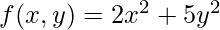

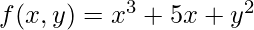

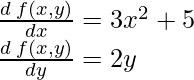

Partial Derivative

The partial derivative of a function is defined as the derivative with respect to one of those variables, with the others held constant.

Example 1:

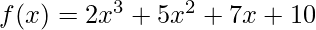

Calculate the  and

and  value of

value of  for

for  and

and

Let’s use jacobian() from torch.autograd.functional

Python3

import torch

from torch.autograd.functional import jacobian

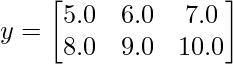

x = torch_input=torch.tensor([[1.0,2.0,3.0],[4.0,5.0,6.0]],requires_grad=True)

y = torch_input=torch.tensor([[5.0,6.0,7.0],[8.0,9.0,10.0]],requires_grad=True)

def f(x,y):

return 2*(x**2) + 5*(y**2)

gred = jacobian(func = f,inputs=(x,y))

print('df(x,y)/dx = ',gred[0])

print('\ndf(x,y)/dy =',gred[1])

|

Output:

df(x,y)/dx = tensor([[[[ 4., 0., 0.],

[ 0., 0., 0.]],

[[ 0., 8., 0.],

[ 0., 0., 0.]],

[[ 0., 0., 12.],

[ 0., 0., 0.]]],

[[[ 0., 0., 0.],

[16., 0., 0.]],

[[ 0., 0., 0.],

[ 0., 20., 0.]],

[[ 0., 0., 0.],

[ 0., 0., 24.]]]])

df(x,y)/dy = tensor([[[[ 50., 0., 0.],

[ 0., 0., 0.]],

[[ 0., 60., 0.],

[ 0., 0., 0.]],

[[ 0., 0., 70.],

[ 0., 0., 0.]]],

[[[ 0., 0., 0.],

[ 80., 0., 0.]],

[[ 0., 0., 0.],

[ 0., 90., 0.]],

[[ 0., 0., 0.],

[ 0., 0., 100.]]]])Alternate method: By using grad() from torch.autograd

Python3

import torch

from torch.autograd import grad

x = torch_input=torch.tensor([[1.0,2.0,3.0],

[4.0,5.0,6.0]],

requires_grad=True)

y = torch_input=torch.tensor([[5.0,6.0,7.0],

[8.0,9.0,10.0]],

requires_grad=True)

def f(x,y):

return 2*(x**2) + 5*(y**2)

z=f(x,y).sum()

grad_f = grad(z, inputs =(x,y))

print('df(x,y)/dx = ',grad_f[0])

print('\ndf(x,y)/dy = ',grad_f[1])

|

Output:

df(x,y)/dx = tensor([[ 4., 8., 12.],

[16., 20., 24.]])

df(x,y)/dy = tensor([[ 50., 60., 70.],

[ 80., 90., 100.]]Example 2: Plot the graph

Find the derivative of  and plot the graph.

and plot the graph.

Solution:

Python3

import torch

import matplotlib.pyplot as plt

x = torch.linspace(-5, 5, steps=200,

requires_grad=True)

y = torch.linspace(-5, 5, steps=200,

requires_grad=True)

def f(x,y):

return (x**3) + 5*x + (y**2)

z = f(x,y).sum()

z.backward()

plt.figure(figsize=(13,5))

plt.subplot(1,2,1)

plt.plot(x.detach(), f(x,y).detach(), label="f(x,y) = x^3 +5x + y^2")

plt.plot(x.detach(), x.grad, label="d(f(x,y))/dx = 3x^2 + 5")

plt.ylabel('f(x,y) & d(f(x,y))/dx')

plt.xlabel('x')

plt.legend()

plt.title("d(f'(x,y))/dx")

plt.subplot(1,2,2)

plt.plot(y.detach(), f(x,y).detach(), label="f(x,y) = x^3 +5x + y^2")

plt.plot(y.detach(), y.grad, label="d(f(x,y))/dy = 2y")

plt.ylabel('f(x,y) & d(f(x,y))/dy')

plt.xlabel('y')

plt.legend()

plt.title("d(f'(x,y))/dy")

plt.show()

|

Outputs:

Derivative of f(x,y) = x^3 +5x + y^2

Like Article

Suggest improvement

Share your thoughts in the comments

Please Login to comment...