How does a Computer Render 3D objects on 2D screen

Last Updated :

04 Jan, 2022

Have you ever wondered how a computer can render a 3D object/model on a 2D screen for us to see? There are various GPU & CPU intensive operations under the hood that is trying very hard to imitate the real-life lighting, materials, and textures of a 3D model. In this article, let’s learn the basics of how a 3D object is rendered.

A 3D model

To understand 3D rendering, you should first understand a 3D model. A 3D object represented in a computer is made up of vertices, these vertices are arbitrary points in 3D space. At least 3 vertices are required to represent a face. Well, why 3? You must be wondering. Consider a face made of 4 vertices instead, initially, all four points would lie on the same reference plane. But, when you move one of 4 points, all of the points do not lie on the same plane. Hence, a triangle is chosen as a base face for a 3D model. A 3D file is nothing but a list of vertices stored in a file, similar to image file formats there are various file formats for 3D objects each with specific advantages.

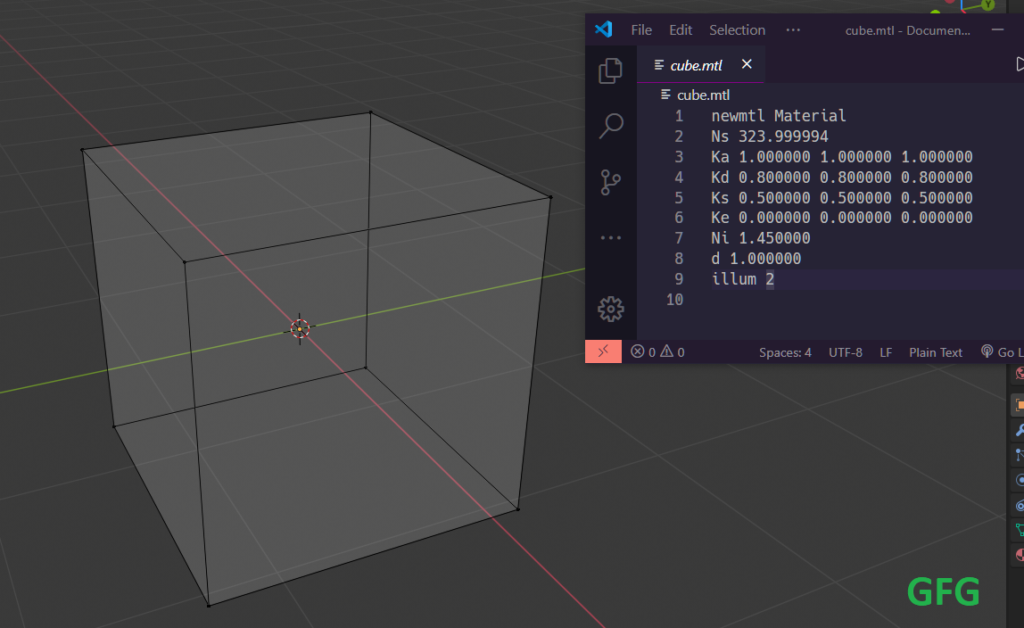

A 3D cube in Blender and it’s .obj file

Rendering 3D objects

Rendering is the method of producing a 2D image based on the 3D data file using various computational algorithms. The rendering procedure essentially simulates the lighting and geometry of the 3D model, based on the methods and techniques it is categorized into.

- Scanline rendering and Rasterization

It is the most basic type of rendering, It projects the geometry of the 3D object on a 2D plane, It does not take the fancy light refractions, scattering, and color spaces into account. One good example of this type of rendering is the old DOOM game

Doom(game – 1993) using rasterization

- Ray casting

Ray Casting is another old method in the book, It is most popularly used in Wolfenstein 3D, because of it’s simple, rather robust algorithm it was widely used in the development of games which could render 3D graphics in computers as slow as 4MHz.

- Ray tracing

Ray tracing simulates light and optics through sophisticated algorithms. It renders by shooting out rays from the camera/view source and records the effects of the ray hitting on different materials at different angles considering the position of the light source. It is capable of producing highly realistic images and renders compared to other methods. The rendering time is very slow since the ray is simulated and calculated for each pixel on the screen. That’s why producing VFX films cost more and require high computation power requiring GPU specially made to render films(render farms). It can stimulate reflection, refraction, depth of field, and many other realistic optical imagery techniques.

A sample scene rendered using Blender(software) showing reflection and refraction

Basic Ray tracing Algorithm

The basic ray tracing algorithm works the same way as a simple light ray. Assume a light ray is projected from the camera(virtual). It travels and hits a solid object and reflects off of it with an angle based on the material and reflectivity of the surface of the object. An object’s material governs how a light ray would behave when it hits the surface of the object. Since a 3D model is made up of tiny triangles, a ray hitting the face of a triangle can be solved by solving the intersection of a line and a plane. If the object is highly detailed(high-poly), the GPU has to perform more calculations to render, similarly, if the model has fewer polygons/triangles(low-poly) the GPU performs lesser calculations. This is why the first-gen graphics were blocky and the new-gen graphics are smooth.

Behavior of a single ray on different materials

As one can clearly see ray tracing imitates the real-world optics. The above image shows the behavior of a single ray, but in practice, there will be millions of rays originating from the camera; hitting and reflecting from various objects cascadingly. A simple algorithm for ray tracing can be expressed as…

for every pixel on the view-cone:

- shoot out a ray from the camera.

- find the first intersecting object to the ray.

- if it is a reflective material

- recursively shoot out rays from the reflected point based on the reflectivity and the light bounce limit.

- calculate the light intensity of each pixel.

This is an oversimplified algorithm for ray tracing but in reality, there are tons of background work to optimize the procedure. The rays described here are essentially implemented as vectors having a magnitude and a direction.

The future of Ray tracing

You might have heard about Real-time Ray Tracing(RTX) GPU by Nvidia. Before RTX, there was no way for Realtime Ray Tracing since it was computationally intensive and slow. So, the RTX uses hybrid rendering technique. It mixes the best of both worlds, faster rendering/rasterization for ordinary materials and advanced raytracing for reflective materials. The speed and parallel processing of GPUs are increasing day by day. The realism and clarity obtained by raytraced GPUs are increasing too.

References:

- https://en.wikipedia.org/wiki/Ray_tracing_(graphics)

Like Article

Suggest improvement

Share your thoughts in the comments

Please Login to comment...