Hadoop Version 3.0 – What’s New?

Last Updated :

10 Sep, 2020

Hadoop is a framework written in Java used to solve Big Data problems. The initial version of Hadoop is released in April 2006. Apache community has made many changes from the day of the first release of Hadoop in the market. The journey of Hadoop started in 2005 by Doug Cutting and Mike Cafarella. The reason behind developing Hadoop is to support distribution for the Nutch Search Engine Project.

Hadoop conquers the supercomputer in the year 2008 and becomes the fastest system ever made by humans to sort terabytes of data stored. Hadoop has come a long way and has accommodated so many changes from its previous version i.e. Hadoop 2.x. In this article, we are going to discuss the changes made by Apache to the Hadoop version 3.x to make it more efficient and faster.

What’s New in Hadoop 3.0?

1. JDK 8.0 is the Minimum JAVA Version Supported by Hadoop 3.x

Since Oracle has ended the use of JDK 7 in 2015, so to use Hadoop 3 users have to upgrade their Java version to JDK 8 or above to compile and run all the Hadoop files. JDK version below 8 is no more supported for using Hadoop 3.

2. Erasure Coding is Supported

Erasure coding is used to recover the data when the computer hard disk fails. It is a high-level RAID(Redundant Array of Independent Disks) technology used by so many IT company’s to recover their data. Hadoop file system HDFS i.e. Hadoop Distributed File System uses Erasure coding to provide fault tolerance in the Hadoop cluster. Since we are using commodity hardware to build our Hadoop cluster, failure of the node is normal. Hadoop 2 uses a replication mechanism to provide a similar kind of fault-tolerance as that of Erasure coding in Hadoop 3.

In Hadoop 2 replicas of the data, blocks are made which is then stored on different nodes in the Hadoop cluster. Erasure coding consumes less or half storage as that of replication in Hadoop 2 to provide the same level of fault tolerance. With the increasing amount of data in the industry, developers can save a large amount of storage with erasure coding. Erasure encoding minimizes the requirement of hard disk and improves the fault tolerance by 50% with the similar resources provided.

3. More Than Two NameNodes Supported

The previous version of Hadoop supports a single active NameNode and a single standby NameNode. In the latest version of Hadoop i.e. Hadoop 3.x, the data block replication is done among three JournalNodes(JNs). With the help of that, the Hadoop 3.x architecture is more capable to handle fault tolerance than that of its previous version. Big data problems where high fault tolerance is needed, Hadoop 3.x is very useful in that situation. In this Hadoop, 3.x users can manage the number of standby nodes according to the requirement since the facility of multiple standby nodes is provided.

For example, developers can now easily configure three NameNodes and Five JournalNodes with that our Hadoop cluster is capable to handle two nodes rather than a single one.

4. Shell Script Rewriting

The Hadoop file system utilizes various shell-type commands that directly interact with the HDFS and other file systems that Hadoop supports i.e. such as WebHDFS, Local FS, S3 FS, etc. The multiple functionalities of Hadoop are controlled by the shell. The shell script used in the latest version of Hadoop i.e. Hadoop 3.x has fixed lots of bugs. Hadoop 3.x shell scripts also provide the functionality of rewriting the shell script.

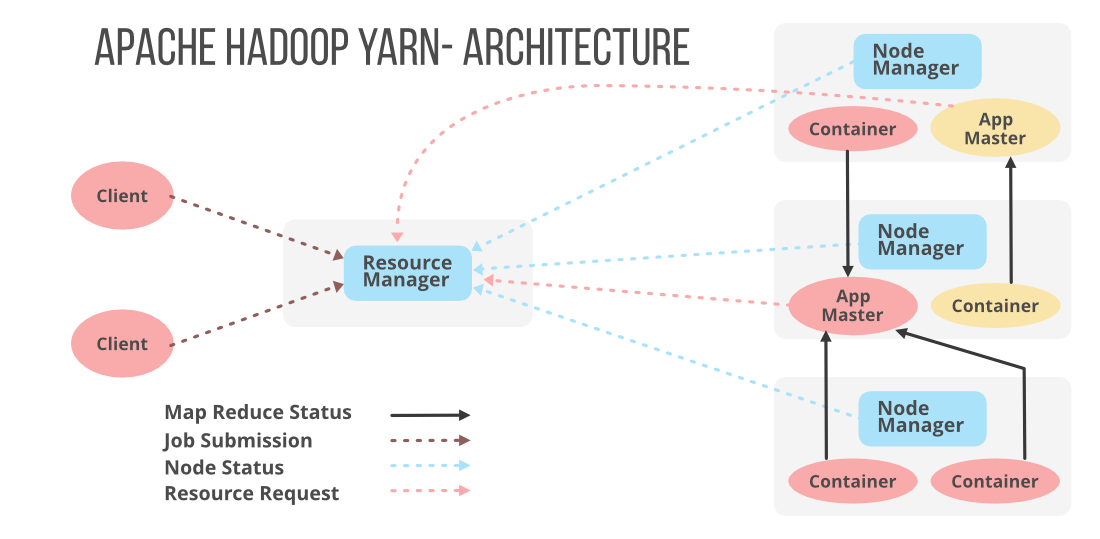

5. Timeline Service v.2 for YARN

The YARN Timeline service stores and retrieve the applicant’s information(The information can be ongoing or historical). Timeline service v.2 was much important to improve the reliability and scalability of our Hadoop. System usability is enhanced with the help of flows and aggregation. In Hadoop 1.x with TimeLine service, v.1 users can only make a single instance of reader/writer and storage architecture that can not be scaled further.

Hadoop 2.x uses distributed writer architecture where data read and write operations are separable. Here distributed collectors are provided for every YARN(Yet Another Resource Negotiator) application. Timeline service v.2 uses HBase for storage purposes which can be scaled to massive size along with providing good response time for reading and writing operations.

The information that Timeline service v.2 stores can be of major 2 types:

A. Generic information of the completed application

- user information

- queue name

- count of attempts made per application

- container information which runs for each attempt on application

B. Per framework information about running and completed application

- count of Map and Reduce Task

- counters

- information broadcast by the developer for TimeLine Server with the help of Timeline client.

6. Filesystem Connector Support

This new Hadoop version 3.x now supports Azure Data Lake and Aliyun Object Storage System which are the other standby option for the Hadoop-compatible filesystem.

7. Default Multiple Service Ports Have Been Changed

In the Previous version of Hadoop, the multiple service port for Hadoop is in the Linux ephemeral port range (32768-61000). In this kind of configuration due to conflicts occurs in some other application sometimes the service fails to bind to the ports. So to overcome this problem Hadoop 3.x has moved the conflicts ports from the Linux ephemeral port range and new ports have been assigned to this as shown below.

// The new assigned Port

Namenode Ports: 50470 -> 9871, 50070 -> 9870, 8020 -> 9820

Datanode Ports: 50020-> 9867,50010 -> 9866, 50475 -> 9865, 50075 -> 9864

Secondary NN Ports: 50091 -> 9869, 50090 -> 9868

8. Intra-Datanode Balancer

DataNodes are utilized in the Hadoop cluster for storage purposes. The DataNodes handles multiple disks at a time. This Disk’s got filled evenly during write operations. Adding or Removing the disk can cause significant skewness in a DataNode. The existing HDFS-BALANCER can not handle this significant skewness, which concerns itself with inter-, not intra-, DN skew. The latest intra-DataNode balancing feature can manage this situation which is invoked with the help of HDFS disk balancer CLI.

9. Shaded Client Jars

The new Hadoop–client-API and Hadoop-client-runtime are made available in Hadoop 3.x which provides Hadoop dependencies in a single packet or single jar file. In Hadoop 3.x the Hadoop –client-API have compile-time scope while Hadoop-client-runtime has runtime scope. Both of these contain third-party dependencies provided by Hadoop-client. Now, the developers can easily bundle all the dependencies in a single jar file and can easily test the jars for any version conflicts. using this way, the Hadoop dependencies onto application classpath can be easily withdrawn.

10. Task Heap and Daemon Management

In Hadoop version 3.x we can easily configure Hadoop daemon heap size with some newly added ways. With the help of the memory size of the host auto-tuning is made available. Instead of HADOOP_HEAPSIZE, developers can use the HEAP_MAX_SIZE and HEAP_MIN_SIZE variables. JAVA_HEAP_SIZE internal variable is also removed in this latest Hadoop version 3.x. Default heap sizes are also removed which is used for auto-tuning by JVM(Java Virtual Machine). If you want to use the older default then enable it by configuring HADOOP_HEAPSIZE_MAX in Hadoop-env.sh file.

Like Article

Suggest improvement

Share your thoughts in the comments

Please Login to comment...