Difference between Correlation and Regression

Last Updated :

26 Nov, 2023

In the realm of data analysis and statistics, these two techniques play an important role- Correlation and Regression techniques understand the relationship between variables, make predictions and generate useful data insights from data. For data analysts and researchers, these tools are essential across various fields. Let’s study the concepts of correlation and regression and explore their significance in the world of data analysis.

Correlation

Correlation is the statistical technique that is used to describe the strength and direction of the relationship between two or more variables. It assesses the relationship between changes in one variable and those in another.

Significance of Correlation

Understanding how variables link, whether moving in unison or opposition, depends heavily on correlation analysis. By enabling insights into one variable’s behaviour based on another’s value and directing predictive models, it helps with prediction. Additionally, by revealing variable connections and advancing data-driven developments, its function in feature selection for machine learning increases algorithm efficiency.

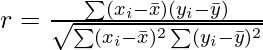

Pearson’s Correlation formula

In statistics, the pearson correlation can be defined by formula :

where,

- r: Correlation coefficient

: i^th value first dataset X

: i^th value first dataset X : Mean of first dataset X

: Mean of first dataset X : i^th value second dataset Y

: i^th value second dataset Y : Mean of second dataset Y

: Mean of second dataset Y

Condition: The length of the dataset X and Y must be the same.

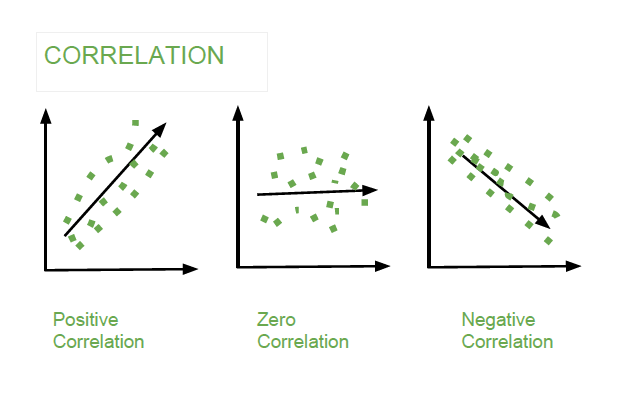

Correlation

- A positive value indicates a positive correlation, meaning both variables increase together.

- A negative value indicates a negative correlation, where one variable rises as the other falls.

- A value that is close to zero indicates weak or no linear correlation.

Correlation Analysis: Its primary goal is to inform researchers to know the existence or lack of a relationship between two variables. In general, its main goal is to determine a numerical value that demonstrates the connection between the variables and how they move together.

Example

Python3

import numpy as np

data1 = np.array([1, 2, 3, 4, 5])

data2 = np.array([3, 5, 7, 8, 10])

correlation_coefficient = np.corrcoef(data1, data2)[0, 1]

print(f"Pearson Correlation Coefficient: {correlation_coefficient}")

|

Output:

Pearson Correlation Coefficient: 0.9948497511671097

Interpretation: In the above code , using sample data we will calculating correlation coefficient. The np.corroef() function is used to calculate the correlation matrix and [0,1] which will extract correlation coefficient.

Regression

Regression analysis is a statistical technique that describes the relationship between variables with the goal of modelling and comprehending their interactions. It primary objective is to form an equation between a dependent and one or more than one independent variable.

Significance of Regression

Finding relationships between variables through regression is essential for creating accurate predictions and well-informed decisions. It goes beyond correlations to investigate the casual connections, assisting cause and effect comprehension. Regression insights are used by businesses to optimize strategies, scientists to verify hypotheses, and industries to forecast trends. It is a crucial tool for deciphering complicated data because it has many applications in variety of fields.

Simple Linear Regression: It serves as the fundamental building block in statistical analysis. It centers the interaction between two variables: independent variable, that serves as the predictor and dependent variable, that represents the outcome that is expected. The equation establishes a linear relationship between these variables and is sometimes written as

y = mx + b

where, y , x = dependent and independent variable , respectively

m = slope , that indicates the rate of change in “y” per unit change in “x”

b = y-intercept

Regression Analysis: In order to estimate the unknown variable and forecast upcoming outcomes for objectives and events, regression analysis helps in identifying the functional relationship between two variables. To calculate the value of the random variable based on the value of the know or fixed variables, is its main goal. It is always considered to be the best-fitting line through data points.

Example:

Python3

import numpy as np

from sklearn.linear_model import LinearRegression

import matplotlib.pyplot as plt

np.random.seed(42)

x = np.random.rand(50) * 10

y = 2 * x + 1 + np.random.randn(50) * 2

x_reshaped = x.reshape(-1, 1)

model = LinearRegression()

model.fit(x_reshaped, y)

predicted_y = model.predict(x_reshaped)

print("Slope:", model.coef_[0])

print("Intercept:", model.intercept_)

|

Output:

Slope: 1.9553132007706207

Intercept: 1.1933785489377744

Interpretation: Here in the code, we are providing random values for x and y variables. When fitting the linear regression to the data, it uses linear regression model from the sklearn to choose the best slope and intercept.

- model.coef() – stores the slope of the regression line.

- intercept – stores y-intercept of the line.

We can even plot a graph for the simple regression

Python3

plt.scatter(x, y, label="Actual Data")

plt.plot(x, predicted_y, color='red', label="Regression Line")

plt.xlabel("X")

plt.ylabel("Y")

plt.legend()

plt.title("Simple Linear Regression")

plt.show()

|

Output:

Simple Linear Regression

Interpretation: Here, we are using matplotlib to create a scatter plot of the actual data points and overlay the predicted regression line. The plot is labeled and titled for clarity, then displayed using plt.show(). This visualization helps to see how well the regression line fits data points.

Difference between Correlation and Regression

The difference between the correlations and regression are as follows:

|

| 1. Definition | Correlation describes as a statistical measure that determines the association or co-relationship between two or more variables. | Regression depicts how an independent variable serves to be numerically related to any dependent variable.

|

| 2. Range | Correlation coefficients may range from -1.00 to +1.00. | Regression coefficient i.e slope and intercepth may be any positive and negative values.

|

| 3. Dependent and Independent Variables | Both independent and dependent variable values have no difference. | Both variables serve to be different, One variable is independent, while the other is dependent.

|

| 4. Aim | To find the numerical value that defines and shows the relationship between variables. | To estimate the values of random variables based on the values shown by fixed variables.

|

| 5. Nature for Response | Its coefficient serves to be independent of any change of Scale or shift in Origin. | Its coefficient shows dependency on the change of Scale but is independent of its shift in Origin.

|

| 6. Nature | Its coefficient is mutual and symmetrical. | Its coefficient fails to be symmetrical. |

| 7. Coefficient | Its correlation serves to be a relative measure. | Its coefficient is generally an absolute figure. |

| 8. Variables | In this, both variables x and y are random variables. | In this, x is a random variable and y is a fixed variable. At times, both variables may be like random variables.

|

Similarities between Correlation and Regression

- Measure Relationships: Correlation and regression both are used to determine the relationship between variables.

- Linear Relationships: Both techniques are almost relevant for assessing linear relationships between variables.

- Strength and Direction: They both provide information about the strength and direction of the relationship of the variables.

- R-Squared Interpretation: The coefficient of determination is used to explain the proportion of variance in the dependent variable that can be explained by the independent variable. Also, similarly correlation coefficient indicate the proportion of shared variance between variables.

- Interpretation of Slope: The slope coefficient in a linear regression shows how much the independent variable changes when the dependent variable changes by one unit. Conceptually, the slope of a linear relationship in correlation refers to how steep the relationship is.

- Statistical Techniques: Both techniques are part of the toolkit of statistical analysis used for exploring and understanding relationships within the datasets.

Conclusion

As a result, though correlation and regression are both important statistical methods for examining relationships between variables, they have different functions and yields different results. Understanding the degree of covariation between the variables is easier due to correlation, which evaluate the direction and intensity of the linear link. However, it doesn’t suggest a cause and effect relationship or make any predictions. Regression on the other hand, goes beyond the correlation by stimulating relationship between variables, allowing one variable to be predicted with the help of the other variable.

Like Article

Suggest improvement

Share your thoughts in the comments

Please Login to comment...