Concept of Cache Memory Design

Last Updated :

12 Jun, 2020

Cache Memory plays a significant role in reducing the processing time of a program by provide swift access to data/instructions. Cache memory is small and fast while the main memory is big and slow.

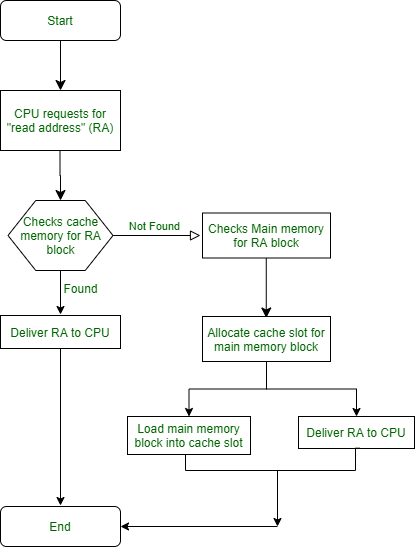

The concept of caching is explained below.

Caching Principle :

The intent of cache memory is to provide the fastest access to resources without compromising on size and price of the memory. The processor attempting to read a byte of data, first looks at the cache memory. If the byte does not exist in cache memory, it searches for the byte in the main memory. Once the byte is found in the main memory, the block containing a fixed number of byte is read into the cache memory and then on to the processor. The probability of finding subsequent byte in the cache memory increases as the block read into the cache memory earlier contains relevant bytes to the process because of the phenomenon called Locality of Reference or Principle of Locality.

Cache Memory Design :

- Cache Size and Block Size –

To align with the processor speed, cache memories are very small so that it takes less time to find and fetch data. They are usually divided into multiple layers based on the architecture. The size of cache should accommodate the size of blocks, which are again determined by the processor’s architecture. When block size increase, hit ratio increases initially because of the principle of locality.

Further increase in the block size resulting in bringing more data into the cache will decrease the hit ratio because, after certain point, the probability of using the new data brought in by the new block is less than the probability of reusing the data that is being flushed out to make room for newer blocks.

- Mapping function –

When a block of data is read from the main memory, the mapping function decides which location in the cache gets occupied by the read-in main memory block. There will be a need to replace the cache memory block with the main memory block if the cache is full and this rises to complexities. Which cache block should be replaced?

Care should be taken to not replace the cache block that is more probable to get referred by the processor. Replacement algorithm directly depends on the mapping function such that if the mapping function is more flexible, the replacement algorithm will provide the maximum hit ratio. But, in order to provide more flexibility, the complexity of the circuitry to search cache memory to determine if the block is in the cache increases.

- Replacement Algorithm –

It decides which block in cache gets replaced by the read-in block from main memory when the cache is full, with a certain constraints from the mapping function. The block of cache that would not be referred in the near future should be replaced but it is highly improbable to determine which block would not be referred. Hence, the block in cache that has not been referred for a long time should be replaced by the new read-in block from the main memory. This is called Least-Recently-Used algorithm.

- Write Policy –

One of the most important aspect of memory caching. The block of data from cache that is chosen to be replaced by the new read-in main memory block should first be placed back in the main memory. This is to prevent loss of data. A decision should be made when the cache memory block would be put back in the main memory.

These two available option are as follows –

- Place the cache memory block in the main memory when it is chosen to be replaced by a new read-in block from main memory.

- Place the cache memory block in the main memory after every update to the block.

The write policy decides on when to write back the cache block on to the main memory. If option 1 is chosen, then there is an excessive write operation performed on the main memory. If option 2 is chosen, in case of a multi processor system, the block in main memory is obsolete as it has not yet been replaced from cache memory but it has undergone changes.

Examples :

-

What is Hit Ratio ?

Number of Hits (successful search in cache memory) / Number of total attempts (total search).

-

What is LRU algorithm ?

More details here

-

What are the different cache memory layers ?

More details here and here.

-

How to check the different layers of cache memory in my Windows PC ?

Open Task Manager -> Performance -> CPU (Examples – L1, L2, L3)

Like Article

Suggest improvement

Share your thoughts in the comments

Please Login to comment...