Clustering in Julia

Last Updated :

29 Dec, 2022

Clustering in Julia is a very commonly used method in unsupervised learning. In this method, we put similar data points into a cluster based on the number of features they have in common. The number of clusters created during the clustering process is decided based on the complexity and size of the dataset. All the points in a cluster have some similarities which can increase or decrease depending upon how many features are being selected before starting the training.

Applications of Clustering in Julia

- Marketing: Clustering in marketing is very useful for finding the potential buyers of a certain product. Clustering is used to find patterns in the market that help a company to make the right decisions for the future.

- Medical Science: As science grows at a rapid speed, there are new discoveries very frequently. To identify and classify new species of micro-organisms, clustering is very useful. Clustering is used to identify the family in which an organism belongs depending on its features.

- Websites: A lot of websites use clustering to promote their site by finding out the users from whom it might be relevant. Website design is also affected using clustering to find what type of design attracts the users.

Types of Clustering

Clustering can be divided into two major parts:

Hard Clustering: It is a type of clustering where a data point can only belong to a single cluster. A data point will either belong to a cluster completely or not at all. This type of clustering does not use probability to put a data point to a cluster. The most common example of hard clustering is K-means clustering.

Soft Clustering: In this type of clustering a data point can exist in many or all clusters with some probability. This type of clustering method does not put a data point into a cluster completely. Each data point has some probability to exist in every cluster. This type of clustering is used in fuzzy programming or soft computing.

K-means Clustering in Julia

K-means clustering comes under unsupervised learning. It is an iterative method where the data points are put one predefined cluster depending upon the similarities of their features. The number of clusters is set by the user before training. This method also finds out the centroid of the clusters.

Algorithm:

- Specifying the number of clusters (K): Let us take an example with 2 clusters and 6 data points.

- Randomly assign each data point to a cluster: In the example below, we have randomly assigned colors to the data points determining the two clusters.

- Calculate cluster centroids: The calculated centroid of a cluster is represented by its corresponding color.

- Check all the data points and place them into the cluster with the nearest centroid: In the image below the blue point from the previous step moves to the red cluster because it is closest to the red cluster centroid.

- Re-calculate centroids for every cluster: Now the centroid will be slightly shifted after the re-calculation.

Syntax: kmeans(X,k)

where,

X: represents the features

k: represents the number of clusters

Julia

using RDatasets, Clustering, Plots

iris = dataset("datasets", "iris");

features = collect(Matrix(iris[:, 1:4])');

result = kmeans(features, 3);

scatter(iris.PetalLength, iris.PetalWidth,

marker_z = result.assignments,

color =:lightrainbow, legend = false)

savefig("D:\\iris.png")

|

Output:

Similarity Aggregation Clustering

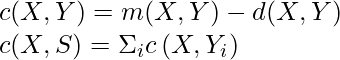

This is another type of clustering where each data point is compared to every other data point in a pair. This method of clustering is also known as the Condorcet method or relational clustering. For a pair of values X and Y, values are assigned to two vectors m(X, Y) and d(X, Y). The values of X and Y are the same in m(X, Y) but different in d(X, Y).

where, S is the cluster

The first condition is used to create a cluster. The second condition is used to calculate the global Condorcet criterion. This is an iterative process where the iterations occur until the specific iteration conditions are not met or the global Condorcet criterion shows no improvement.

Like Article

Suggest improvement

Share your thoughts in the comments

Please Login to comment...