Averaged One-Dependence Estimators (AODE) are a type of ensemble classifier that uses multiple one-dependence classifiers to make predictions. It is a variation of the Naive Bayes classifier that is often used in Machine Learning because of its simplicity and effectiveness.

AODE uses the Bayes theorem to calculate the probability of each possible class for a given input data point. It then combines the probabilities from each one-dependence classifier to make a final prediction. This approach allows AODE to make predictions that are more accurate than a single one-dependence classifier, while still retaining the simplicity of the underlying model.

What is the use of Averaged One-Dependence Estimators (AODE)?

The main use of Averaged One-Dependence Estimators (AODE) is to make predictions in classification tasks. It is a type of ensemble classifier that combines multiple one-dependence classifiers to make more accurate predictions than a single one-dependence classifier. This makes AODE a useful tool for machine learning applications where the goal is to predict the class of a given data point.

AODE is often used in machine learning because of its simplicity and effectiveness. It uses the Bayes theorem to calculate the probability of each possible class for a given input data point and then combines the probabilities from each one-dependence classifier to make a final prediction. This approach allows AODE to make predictions that are more accurate than a single one-dependence classifier, while still retaining the simplicity of the underlying model.

Overall, the main use of AODE is to make accurate predictions in classification tasks, and it is often used in machine learning because of its simplicity and effectiveness.

Features of the AODE classifier

The AODE (average one-dependence estimators) classifier is a type of probabilistic classifier that uses Bayesian networks to model the relationship between different features of a dataset. Some key features of the AODE classifier include:

- It uses a Bayesian network to model the dependencies between the different features in a dataset, allowing it to take into account the interdependence of these features when making predictions.

- It estimates the probability of each class based on the training data and then uses these probabilities to make predictions on new data.

- It is a type of generative model, meaning that it can be used to generate new samples from the data distribution.

- It is an effective method for dealing with high-dimensional data, as it can handle many features without overfitting.

- It is relatively easy to implement and can be used with a variety of different types of data.

Breaking down the Math behind AODE

The AODE classifier uses Bayesian networks to model the dependencies between different features in a dataset. In a Bayesian network, the nodes represent random variables and the edges represent the relationships between these variables. The AODE classifier uses this network to model the relationship between the features in a dataset and the target variable (i.e. the class that each data point belongs to).

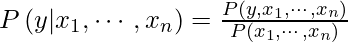

To make predictions with the AODE classifier, we first need to estimate the probabilities of each class based on the training data. This is done using the Bayesian network to calculate the joint probability of each class and the features of a data point. We then use these probabilities to estimate the likelihood that a given data point belongs to each class, and choose the class with the highest likelihood as the predicted class.

In more mathematical terms, let’s say we have a dataset with n features, represented by the random variables X1, X2, …, Xn, and a target variable Y representing the class that each data point belongs to. The AODE classifier uses a Bayesian network to model the relationship between these variables, and estimates the probabilities of each class based on the training data.

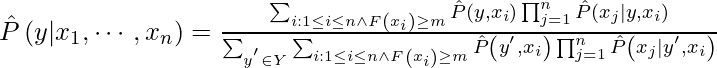

To make a prediction for a new data point with features x1, x2, …, xn, we use the Bayesian network to calculate the joint probability of each class y and the features x1, x2, …, xn. This is done using the following formula:

How Averaged One-Dependence Estimators (AODE) is compared to other estimators?

Averaged One-Dependence Estimators (AODE) is a simple and effective machine learning algorithm that is often compared to other estimators, such as Naive Bayes and decision trees. Here are some ways in which AODE is compared to these other estimators:

- Compared to Naive Bayes: AODE is a variation of the Naive Bayes algorithm that uses multiple one-dependence classifiers to make predictions. It is often more accurate than Naive Bayes, but it is also more computationally expensive, as it requires training multiple one-dependence classifiers.

- Compared to Decision Trees: AODE is a one-class classifier, meaning that it can only be used to predict a single class for each input data point. This makes it less versatile than decision trees, which can be used for both binary and multi-class classification tasks. However, AODE is often faster and more computationally efficient than decision trees, as it is a simpler algorithm.

- Compared to other Ensemble Classifiers: AODE is an ensemble classifier, meaning that it uses multiple classifiers to make predictions. However, unlike other ensemble classifiers such as random forests or gradient boosting, AODE only uses one type of classifier (i.e. one-dependence classifiers) and combines their predictions to make a final prediction. This makes it a simpler and more computationally efficient algorithm than other ensemble classifiers, but it may also be less accurate in some cases.

Overall, AODE is often compared to other estimators in terms of its accuracy, computational efficiency, and versatility. It is a simple and effective algorithm that can be a useful tool for certain machine-learning tasks, but it may not be the best choice for all applications.

Averaged One-Dependence Estimators (AODE) in Python:

Here is the step-by-step simple implementation of the AODE algorithm in Python.

Step 1: Import the necessary libraries, including defaultdict from the collections module.

Python3

from collections import defaultdict

|

Step 2: Define a train() function that takes a dataset as input. Inside the train() function, create a dictionary probability that will store the probabilities of different classes and feature values. Use a defaultdict to set the default value of probabilities to be a dictionary, so that we can assign values to the items in the dictionary. Calculate the probability of each class in the dataset. To do this, we first create a class_counts dictionary that stores the number of data points in each class.

Python3

def train(dataset):

probabilities = defaultdict(lambda: defaultdict(dict))

probabilities = defaultdict(lambda: defaultdict(dict))

class_counts = defaultdict(int)

|

Step 3: We then iterate through the dataset and count the number of data points in each class, using the class_counts dictionary.

Python3

for data in dataset:

x, y = data

class_counts[y] += 1

|

Step 4: We then calculate the probability of each class by dividing the number of data points in that class by the total number of data points in the dataset.

Python3

for y, count in class_counts.items():

probabilities[y]['total'] = count / len(dataset)

|

Step 5: Calculate the probability of each feature value for each class. To do this, we create a feature_counts dictionary that stores the number of times each feature value appears in each class:

Python3

feature_counts = defaultdict(lambda: defaultdict(int))

|

Step 6: We then iterate through the dataset and count the number of times each feature value appears in each class, using the feature_counts dictionary:

Python3

for data in dataset:

x, y = data

for i in range(len(x)):

feature_counts[i][(x[i], y)] += 1

|

Step 7: Then, we calculate the probability of each feature value for each class by dividing the number of times that feature value appears in that class by the total number of data points in that class:

Python3

for i, counts in feature_counts.items():

for (x, y), count in counts.items():

probabilities[y][i][x] = count / class_counts[y]

|

Step 8: After calculating the probabilities of different classes and feature values, we return the probabilities dictionary from the train() function.

Step 9: At this point, the train() function is complete, and we can use it to train the AODE classifier on the dataset. For example, we can train the classifier and print the resulting probabilities dictionary as follows.

Python3

model = train(dataset)

print(model)

|

Complete Code Implementation for AODE:

Python3

from collections import defaultdict

def train(dataset):

probabilities = defaultdict(lambda: defaultdict(dict))

class_counts = defaultdict(int)

for data in dataset:

x, y = data

class_counts[y] += 1

for y, count in class_counts.items():

probabilities[y]['total'] = count / len(dataset)

feature_counts = defaultdict(lambda: defaultdict(int))

for data in dataset:

x, y = data

for i in range(len(x)):

feature_counts[i][(x[i], y)] += 1

for i, counts in feature_counts.items():

for (x, y), count in counts.items():

probabilities[y][i][x] = count / class_counts[y]

return probabilities

def predict(model, data):

joint_probabilities = defaultdict(int)

for y, class_probabilities in model.items():

joint_probabilities[y] = class_probabilities['total']

for i, feature_probabilities in class_probabilities.items():

if i != 'total':

joint_probabilities[y] *= feature_probabilities.get(data[i], 0)

likelihoods = defaultdict(int)

for y, joint_probability in joint_probabilities.items():

likelihoods[y] = joint_probability / sum(joint_probabilities.values())

return max(likelihoods, key=likelihoods.get)

dataset = [

([1, 1, 1, 1], 'A'),

([1, 1, 1, 0], 'A'),

([1, 1, 0, 1], 'A'),

([1, 1, 0, 0], 'A'),

([1, 0, 1, 1], 'B'),

([1, 0, 1, 0], 'B'),

([1, 0, 0, 1], 'B'),

([1, 0, 0, 0], 'B'),

([0, 1, 1, 1], 'C'),

([0, 1, 1, 0], 'C'),

([0, 1, 0, 1], 'C'),

([0, 1, 0, 0], 'C'),

([0, 0, 1, 1], 'D'),

([0, 0, 1, 0], 'D'),

([0, 0, 0, 1], 'D'),

([0, 0, 0, 0], 'D'),

]

model = train(dataset)

print(predict(model, [0, 0, 0, 0]))

|

Output:

D

Limitations of Averaged One-Dependence Estimators (AODE)

Averaged One-Dependence Estimators (AODE) are simple and effective machine learning algorithm, but it does have some limitations. Some of the main limitations of AODE include:

- AODE assumes that the features in a dataset are independent of each other, which is often not the case in real-world data. This assumption can lead to inaccurate predictions if the features are not truly independent.

- AODE can be sensitive to noise in the data, as it relies on the Bayes theorem to calculate probabilities. If the data contains a lot of noise, the probabilities calculated by AODE may be less accurate, leading to less accurate predictions.

- AODE is a one-class classifier, meaning that it can only be used to predict a single class for each input data point. This limits its usefulness in certain applications, such as multi-class classification tasks.

- AODE is not well suited to highly non-linear data, as it relies on linear combinations of features to make predictions. In these cases, other algorithms such as decision trees or neural networks may be more effective.

Overall, while AODE is a simple and effective algorithm, it does have some limitations that should be considered when deciding whether to use it for a particular machine-learning task.

Like Article

Suggest improvement

Share your thoughts in the comments

Please Login to comment...