A 2D Convolution operation is a widely used operation in computer vision and deep learning. It is a mathematical operation that applies a filter to an image, producing a filtered output (also called a feature map). In this article, we will look at how to apply a 2D Convolution operation in PyTorch.

PyTorch provides a convenient and efficient way to apply 2D Convolution operations. It provides functions for performing operations on tensors (PyTorch’s implementation of arrays), and it also provides functions for building deep learning models.

Convolutions are a fundamental concept in computer vision and image processing. They are mathematical operations that take an input signal (such as an image) and produce a transformed output signal that highlights certain features of the input. Convolutional neural networks (ConvNets or CNNs) are deep learning models that are built using convolutions as a core component.

In the context of PyTorch, the meaning of 1D, 2D, and 3D convolutions is determined by the dimensionality of the input data that the convolution applied.1D Convolutions are applied to 1D input signals such as 1D arrays, sequences, or time series. In this case, the convolution kernel (or filter) slides along the input signal and performs element-wise multiplication and accumulation at each position to produce the output signal.2D Convolutions are applied to 2D input signals such as grayscale or color images. In this case, the convolution kernel slides over the 2D input array, performs element-wise multiplication and accumulation at each position, and produces a 2D output signal.3D Convolutions are applied to 3D input signals such as video or volumetric data. In this case, the convolution kernel slides over the 3D input array, performs element-wise multiplication and accumulation at each position, and produces a 3D output signal.

A convolution operation is a mathematical operation that is widely used in image processing and computer vision. It involves applying a convolution kernel, also known as a filter, to an image. The filter acts as a sliding window over the image, computing the dot product of its values with the underlying image pixels at each step.

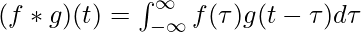

Mathematically, a convolution operation can be represented as:

Where f and g are functions representing the image and the filter respectively, and * denotes the convolution operator.

2D convolution in PyTorch

Syntax of Conv2d() :

torch.nn.Conv2d(in_channels, out_channels, kernel_size, stride=1, padding=0, dilation=1, groups=1, bias=True, padding_mode=’zeros’, device=None, dtype=None)

Cout is determined by the number of filters used in the convolutional layer.

in_channels (int) – Number of channels in the input image.

out_channels (int) – Number of channels produced by the convolution.

kernel_size (int or tuple) – Size of the convolving kernel.

bias (bool, optional) – If True, adds a learnable bias to the output. Default: True.

stride : controls the stride for the cross-correlation, a single number or a tuple.

padding : controls the amount of padding applied to the input. It can be either a string {‘valid’, ‘same’} or a tuple of ints giving the amount of implicit padding applied on both sides.

dilation : controls the spacing between the kernel points; also known as the à trous algorithm. It is harder to describe, but this link has a nice visualization of what dilation does.

groups : controls the connections between inputs and outputs. in_channels and out_channels must both be divisible by groups.

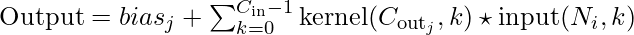

For 2D convolution in PyTorch, we apply the convolution operation by using the simple formula :

The input shape refers to the dimensions of a single data sample in a batch. The shape is defined as (N, Cin, Hin, Win), where:

- N is the batch size or number of samples in the batch

- Cin is the number of channels in the input data

- Hin is the height of the input data

- Win is the width of the input data

The output shape refers to the dimensions of the output from a convolutional layer. The shape is defined as (N, Cout, Hout, Wout), where:

- N is the batch size or number of samples in the batch

- Cout is the number of channels in the output data

- Hout is the height of the output data

- Wout is the width of the output data

These shapes can be determined mathematically based on the kernel size, stride, and padding of the convolutional layer. The formula for Hout is:

![Rendered by QuickLaTeX.com h_{out} = \left[\frac{h_{in} - \text{kernel\_size[0]}+ 2 * \text{padding[0]}}{\text{stride[0]}} + 1 \right]](https://www.geeksforgeeks.org/wp-content/ql-cache/quicklatex.com-f3964a14ef4e50f7bca17b68a048c71d_l3.png)

Similarly, the formula for Wout is:

![Rendered by QuickLaTeX.com w_{out} = \left[\frac{w_{in} - \text{kernel\_size[1]}+ 2 * \text{padding[1]}}{\text{stride[1]}} + 1 \right]](https://www.geeksforgeeks.org/wp-content/ql-cache/quicklatex.com-293b7b0202c651d1c1e25903624bb560_l3.png)

Let’s consider this with an example, Here we define a custom image of shape 4X4 and kernel 3X3 and bias 1X1.

Find the output shape by using the above formula.

Python3

import numpy as np

import torch

kernel = torch.tensor(

[[0, -1, 0],

[-1, 5, -1],

[0, -1, 0]], dtype=torch.float32)

bias = torch.tensor([5], dtype=torch.float32)

image = torch.tensor(

[[1, 2, 3, 4],

[5, 6, 7, 8],

[9, 10, 11, 12],

[13, 14, 15, 16]], dtype=torch.float32)

def Output_shape(image, kernel, padding, stride):

h,w = image.shape[-2],image.shape[-1]

k_h, k_w = kernel.shape[-2],kernel.shape[-1]

h_out = (h-k_h-2*padding)//stride[0] +1

w_out = (w-k_w-2*padding)//stride[1] +1

return h_out,w_out

Output_shape(image, kernel, padding=0, stride=(1,1))

|

Output:

(2, 2)

Let’s apply the convolution operation by using the simple formula

Python3

output_shape = Output_shape(image, kernel, padding=0, stride=(1,1))

output = np.zeros(output_shape)

for i in range(output_shape[0]):

for j in range(output_shape[1]):

output[i,j]=torch.tensordot(image[i:3+i,j:3+j],kernel).numpy() +bias.numpy()

output

|

Output:

array([[11., 12.],

[15., 16.]])Example 1:

We’ll start by creating a 2D Convolution operation that applies a filter to an image.

The code defines the filter using a 3×3 tensor and the input image using a 4×4 tensor. The nn.Conv2d function creates a 2D Convolution operation, and we specify the number of input and output channels, the size of the kernel, and whether or not to include a bias term in the calculation. we don’t include a bias in the code.

We then set the filter kernel for the convolution operation using the conv. weight parameter and bias by conv.bias, and apply the operation to the input image using the conv function. The resulting output is a tensor that represents the filtered image.

Python3

import torch

import torch.nn as nn

import torch.nn.functional as F

kernel = torch.tensor(

[[0, -1, 0],

[-1, 5, -1],

[0, -1, 0]], dtype=torch.float32)

kernel = kernel.reshape(1, 1, 3, 3)

bias = torch.tensor([5], dtype=torch.float32)

image = torch.tensor(

[[1, 2, 3, 4],

[5, 6, 7, 8],

[9, 10, 11, 12],

[13, 14, 15, 16]], dtype=torch.float32)

image = image.reshape(1, 1, 4, 4)

conv = nn.Conv2d(in_channels=1, out_channels=1, kernel_size=3, bias=False)

conv.weight = nn.Parameter(kernel)

conv.bias = nn.Parameter(bias)

output = conv(image)

print('Output Shape :',output.shape)

print('Output \n',output)

|

Output:

Output Shape : torch.Size([1, 1, 2, 2])

Output

tensor([[[[11., 12.],

[15., 16.]]]], grad_fn=<ConvolutionBackward0>)

As you can see, the 2D Convolution operation has produced a filtered output. In this case, the output is a tensor with shape (1, 1, 2, 2).

Example 2:

Let’s try with real image

Python3

import torch

import torch.nn as nn

import torchvision.transforms as T

from PIL import Image

image = Image.open('pawan.jpeg')

Input = T.ToTensor()(image)

Input = Input.unsqueeze(0)

print('Input Tensor :',Input.shape)

conv = nn.Conv2d(in_channels=3, out_channels=3, kernel_size=3,stride=2, bias=True)

output = conv(Input)

print('Output Tensor :',output.shape)

Out_img = output.squeeze(0)

Out_img = T.ToPILImage()(Out_img)

Out_img

|

Output:

Input Tensor : torch.Size([1, 3, 460, 460])

Output Tensor : torch.Size([1, 3, 229, 229])

Output Image

In this case, the output image pixel value will change every time, we haven’t defined the weight and bias. So, it is taking a random value. but the output shape will be the same every time.

We can calculate the output shape by using the formula also :

![Rendered by QuickLaTeX.com \begin{aligned} h_{out} & =\left[\frac{h_{in} - \text{kernel\_size[0]} + 2 * \text{padding[0]} }{\text{stride[0]}} + 1\right] \\&=\left [\frac{460 -3 + 2 * 0}{2} + 1\right] \\ &= \left[\frac{460 -3 + 0}{2} + 1\right] \\ &= \left[\frac{457}{2} + 1\right] \\ &= \left [228.5+1 \right] \\ &= 229.5 \\ & \approx 229 \end{aligned}](https://www.geeksforgeeks.org/wp-content/ql-cache/quicklatex.com-ce7eeec9bb2ae5b09a1637ebe0aa9bbc_l3.png)

![Rendered by QuickLaTeX.com \begin{aligned} w_{out} & =\left[\frac{w_{in} - \text{kernel\_size[1]} + 2 * \text{padding[1]} }{\text{stride[1]}} + 1\right] \\&=\left [\frac{460 -3 + 2 * 0}{2} + 1\right] \\ &= \left[\frac{460 -3 + 0}{2} + 1\right] \\ &= \left[\frac{457}{2} + 1\right] \\ &= \left [228.5+1 \right] \\ &= 229.5 \\ & \approx 229 \end{aligned}](https://www.geeksforgeeks.org/wp-content/ql-cache/quicklatex.com-0e28557210fb45443c8de1765ce92d01_l3.png)

In this article, we looked at how to apply a 2D Convolution operation in PyTorch. We defined a filter and an input image and created a 2D Convolution operation using PyTorch’s nn.Conv2d function set the filter for the operation and applied the operation to the input image to produce a filtered output.

By using PyTorch’s convenient and efficient functions for performing 2D Convolution operations, we can easily build deep learning models that incorporate this important operation.

Like Article

Suggest improvement

Share your thoughts in the comments

Please Login to comment...